Generating high-fidelity videos with diffusion models has long been bottlenecked by computational inefficiency. Even on powerful GPUs, producing just a…

Diffusion Models

Sparse VideoGen2: Accelerate Video Diffusion Models 2.3x Without Retraining or Quality Loss 596

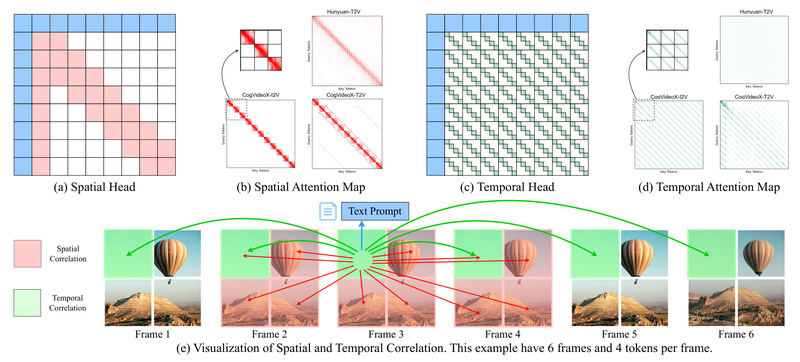

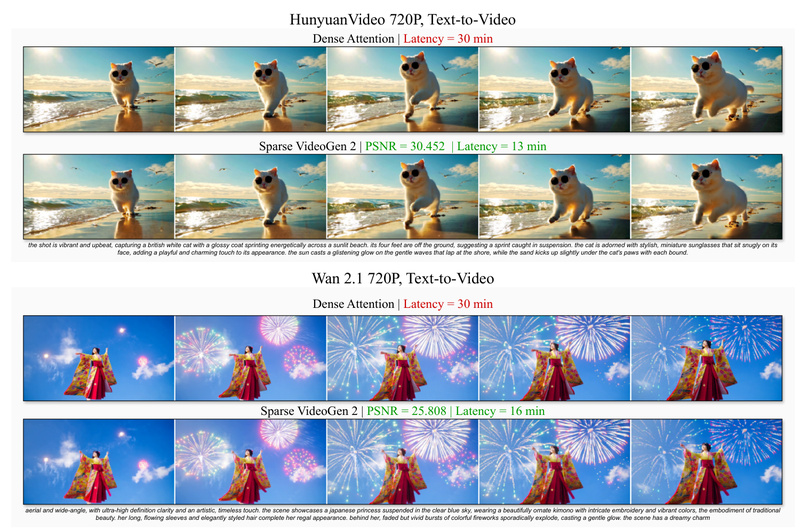

Video generation using diffusion transformers (DiTs) has reached remarkable visual fidelity—but at a steep computational cost. The quadratic complexity of…

RCG: High-Fidelity Unconditional Image Generation Without Labels 929

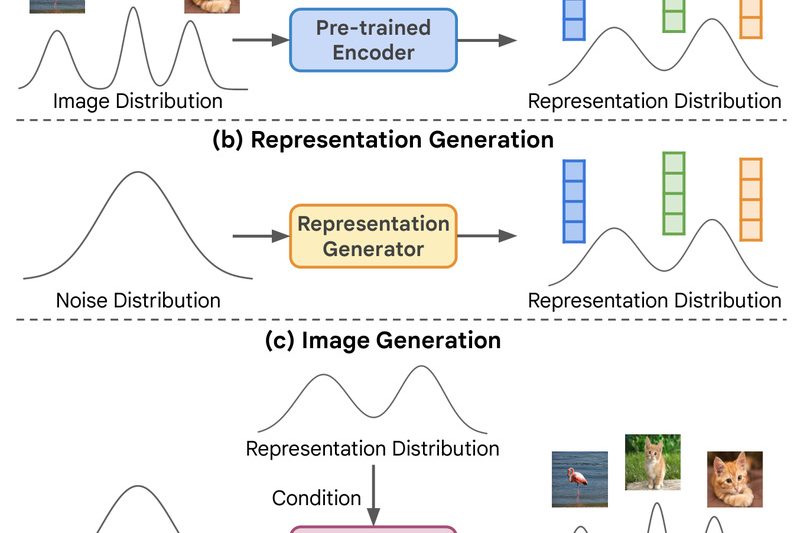

For years, unconditional image generation—creating realistic images without relying on human-provided class labels—has lagged significantly behind its class-conditional counterpart in…

FramePack: Generate Long, High-Quality Videos on a Laptop—Without Cloud Costs or Drifting Artifacts 16308

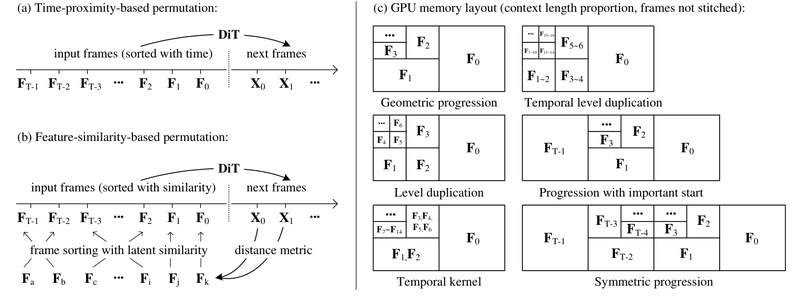

Creating long, coherent, and visually rich videos with AI has long been bottlenecked by computational complexity, memory constraints, and error…

InvSR: High-Quality Image Super-Resolution in 1–5 Steps Using Diffusion Inversion 1341

Image super-resolution (SR) remains a critical capability across computer vision applications—from upscaling smartphone photos to enhancing AI-generated content (AIGC). However,…

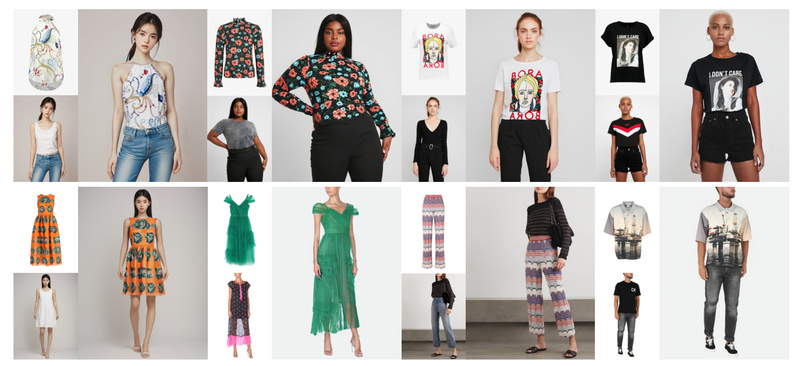

OOTDiffusion: High-Fidelity, Controllable Virtual Try-On Without Garment Warping 6482

OOTDiffusion represents a significant leap forward in image-based virtual try-on (VTON) technology. Built on the foundation of pretrained latent diffusion…

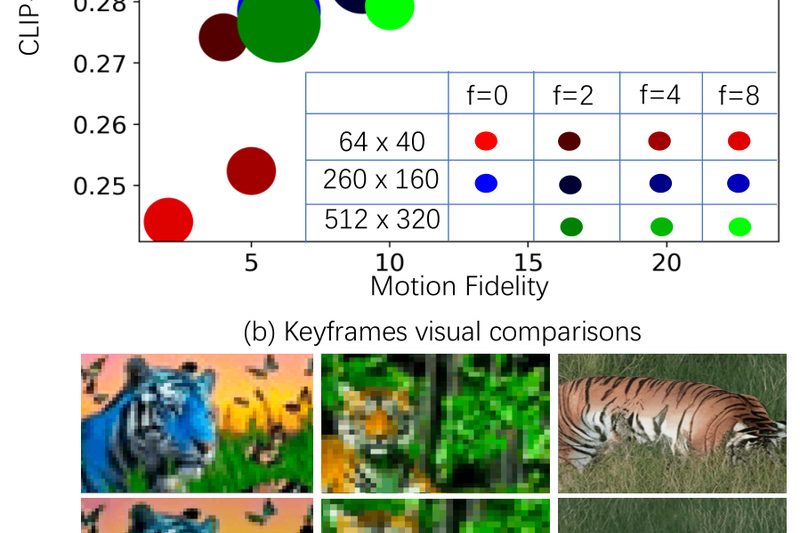

Show-1: High-Quality, Efficient Text-to-Video Generation with Precise Prompt Alignment 1133

Text-to-video generation has rapidly evolved, yet technical teams still face a persistent trade-off: high-quality outputs often come at prohibitive computational…

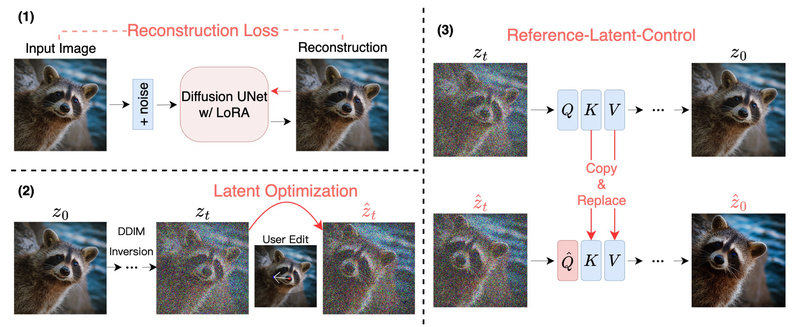

DragDiffusion: Precise, Interactive Image Editing for Real and AI-Generated Photos Using Diffusion Models 1234

DragDiffusion is an open-source framework that brings pixel-precise, point-based image manipulation to both real-world photographs and AI-generated images—without requiring users…

VSA: Accelerate Video Diffusion Models by 2.5× with Trainable Sparse Attention—No Quality Tradeoff 2780

Video generation using diffusion transformers (DiTs) is rapidly advancing—but at a steep computational cost. Full 3D attention in these models…

DreamCraft3D: Generate Photorealistic, View-Consistent 3D Assets from a Single Image 2989

Creating high-quality 3D assets has traditionally required expert modeling skills, extensive manual labor, or expensive capture setups—barriers that limit accessibility…