Training large language models (LLMs) has traditionally been the domain of well-resourced AI labs with access to massive GPU clusters…

Distributed Deep Learning

Megatron-LM: Train Billion-Parameter Transformer Models Efficiently on NVIDIA GPUs at Scale 14515

If you’re building or scaling large language models (LLMs) and have access to NVIDIA GPU clusters, Megatron-LM—developed by NVIDIA—is one…

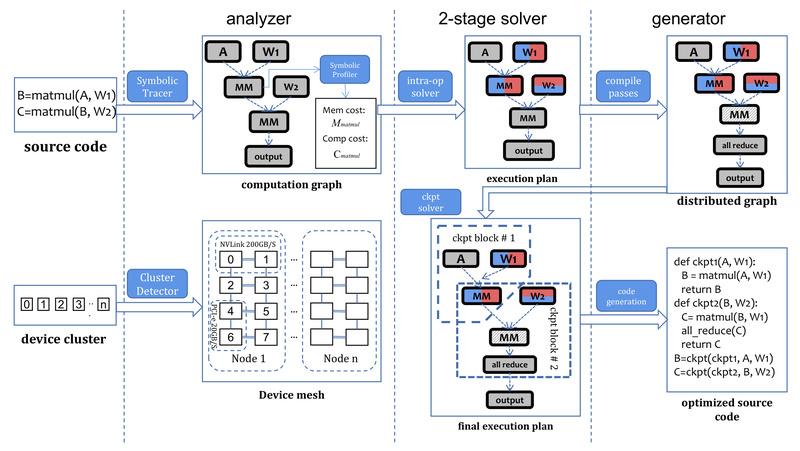

Colossal-Auto: Automate Large Model Training with Zero Expertise in Parallelization or Checkpointing 41290

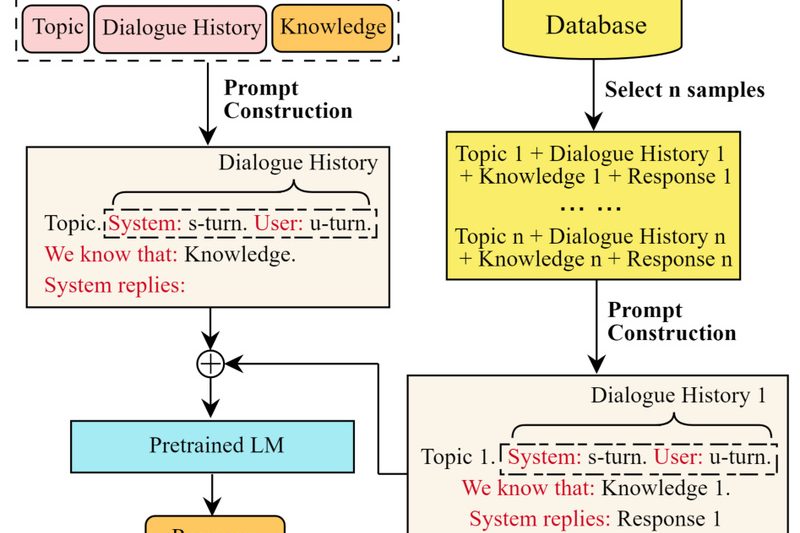

Training large-scale AI models—whether language models like LLaMA or video generators like Open-Sora—has become increasingly common, yet remains bottlenecked by…