Deploying large language models (LLMs) in real-world applications remains a major engineering challenge. While models like LLaMA-2, Falcon, and Mixtral…

Efficient LLM Deployment

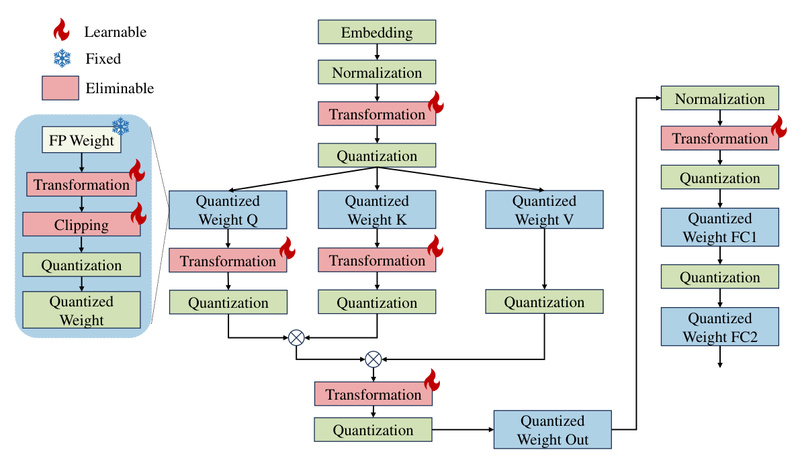

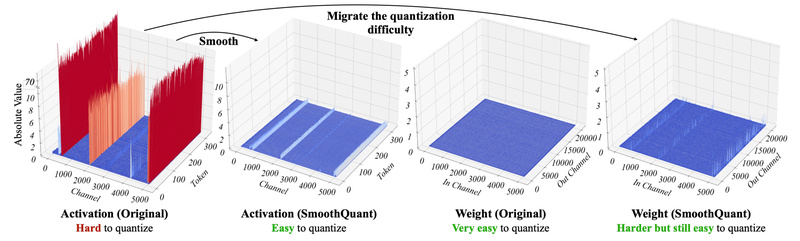

SmoothQuant: Accurate 8-Bit LLM Inference Without Retraining – Slash Memory and Boost Speed 1576

Deploying large language models (LLMs) in production is expensive—not just in dollars, but in compute and memory. While models like…

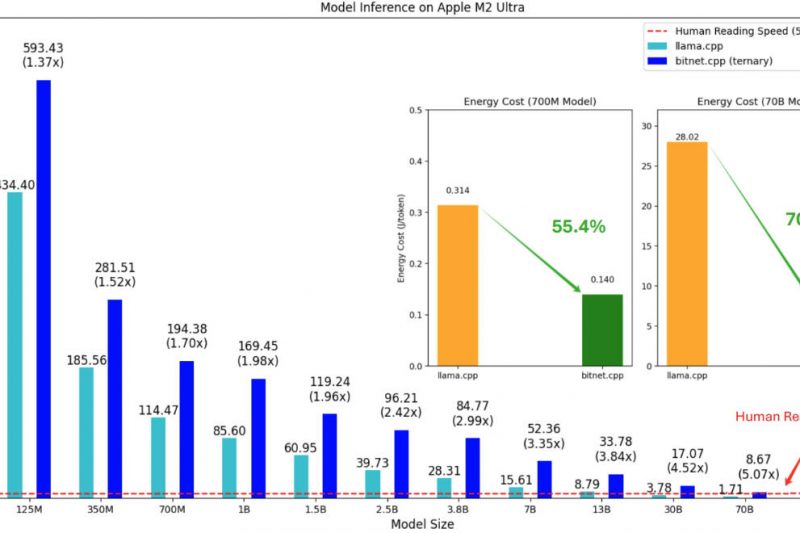

BitNet: Run 1.58-Bit LLMs Locally on CPUs with 6x Speedup and 82% Less Energy 24452

Running large language models (LLMs) used to require powerful GPUs, expensive cloud infrastructure, or specialized hardware—until BitNet changed the game.…