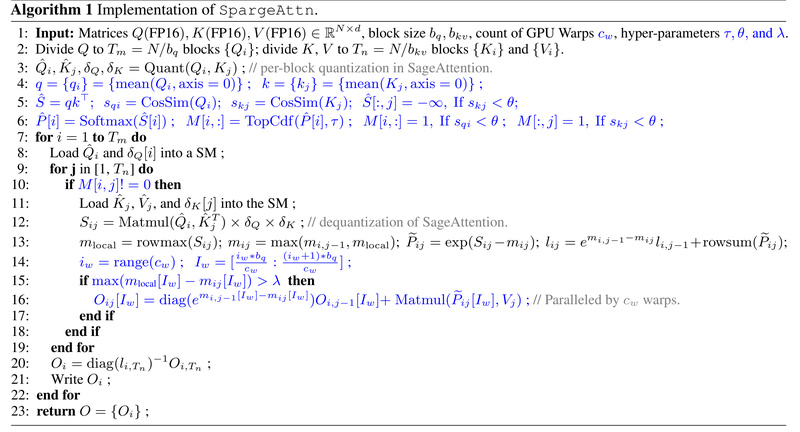

Large AI models—from language generators to video diffusion systems—are bottlenecked by the attention mechanism, whose computational cost scales quadratically with…

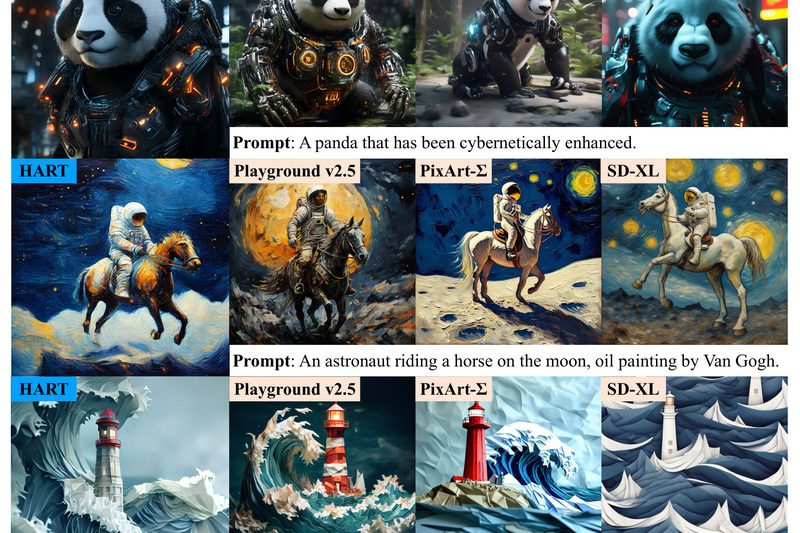

Image Generation

HART: Generate 1024×1024 Images Faster and More Efficiently Than Diffusion Models 635

For teams building AI-powered visual applications—whether in creative tools, digital content platforms, or rapid prototyping—the trade-off between image quality, speed,…

LightningDiT: Break the Reconstruction-Generation Trade-Off with 21.8x Faster, SOTA Image Diffusion 1315

Latent diffusion models (LDMs) have become a cornerstone of modern high-fidelity image generation. However, a persistent challenge has limited their…

GANformer: Compositional, Controllable Image Generation with Fewer Training Steps 1342

Traditional generative adversarial networks (GANs) often act as “black boxes”—they produce compelling images but offer little insight into how those…

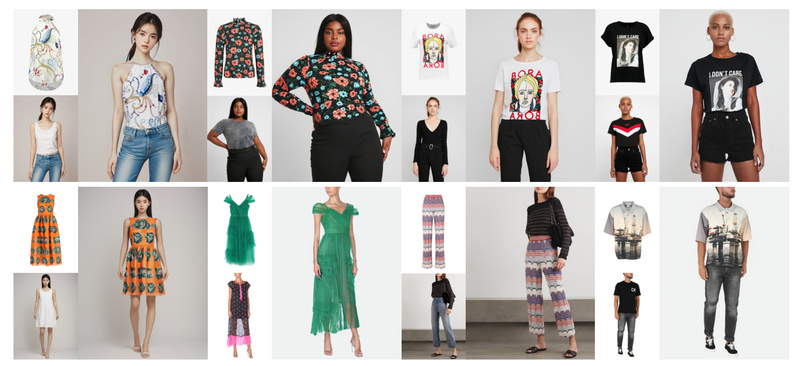

OOTDiffusion: High-Fidelity, Controllable Virtual Try-On Without Garment Warping 6482

OOTDiffusion represents a significant leap forward in image-based virtual try-on (VTON) technology. Built on the foundation of pretrained latent diffusion…

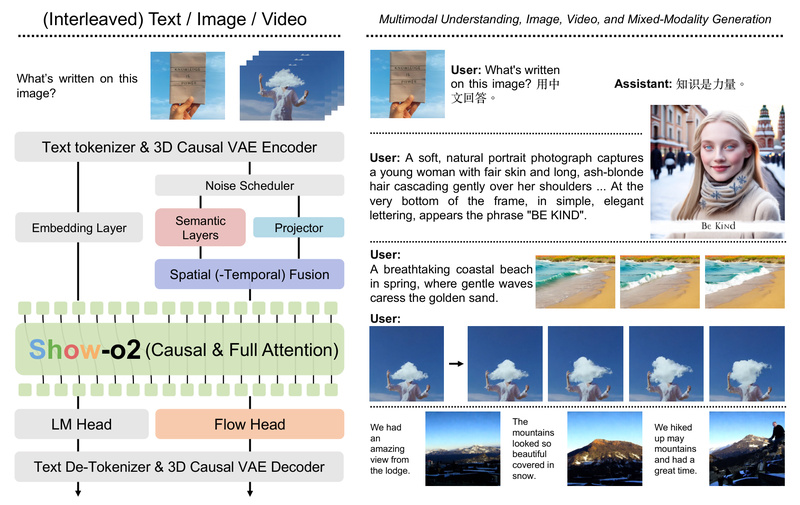

Show-o: One Unified Transformer for Multimodal Understanding and Generation Across Text, Images, and Videos 1809

In today’s AI landscape, developers and researchers often juggle separate models for vision, language, and video—each with its own architecture,…