Building powerful language models used to be the exclusive domain of well-funded tech giants. But JetMoE is changing that narrative.…

Language Modeling

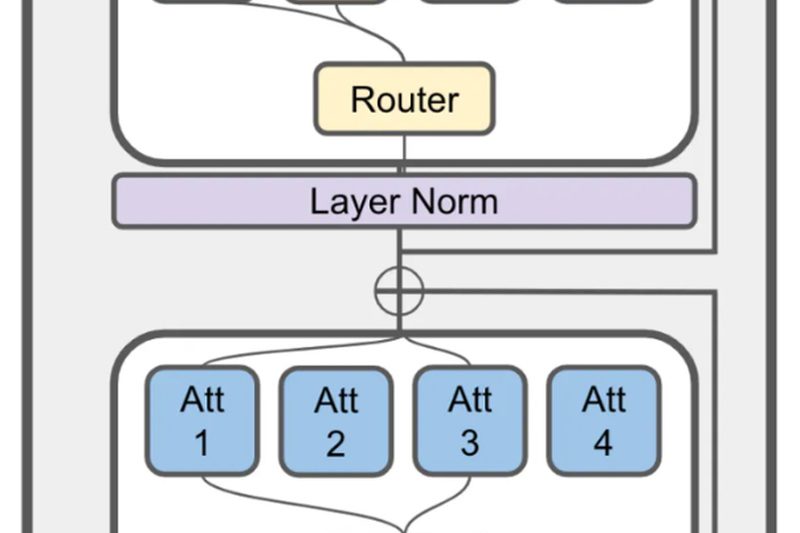

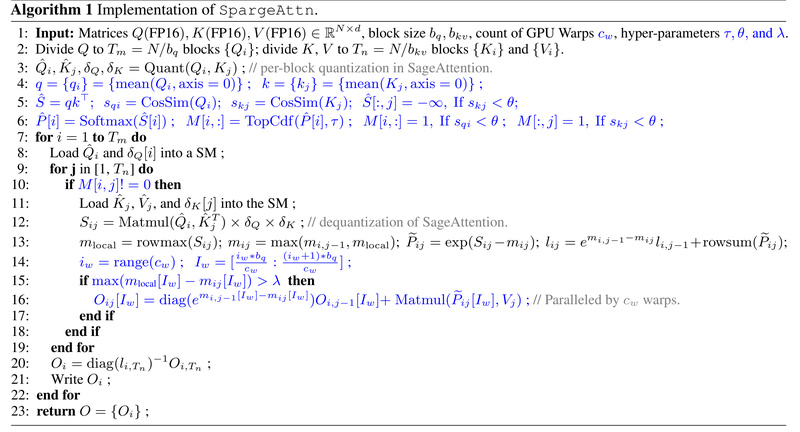

SpargeAttention: Universal, Training-Free Sparse Attention for Faster LLM, Image & Video Inference Without Retraining 814

Large AI models—from language generators to video diffusion systems—are bottlenecked by the attention mechanism, whose computational cost scales quadratically with…

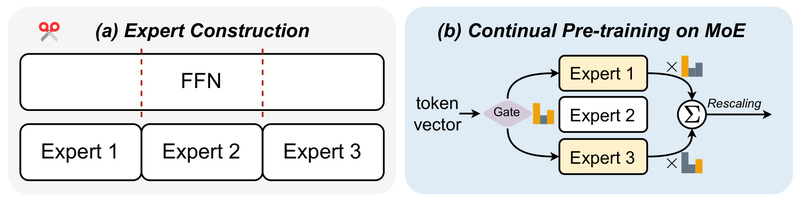

LLaMA-MoE: High-Performance Mixture-of-Experts LLM with Only 3.5B Active Parameters 994

If you’re a developer, researcher, or technical decision-maker working with large language models (LLMs), you’ve likely faced a tough trade-off:…