Deploying large language models (LLMs) in production is expensive—not just in dollars, but in compute and memory. While models like…

Large Language Model Inference

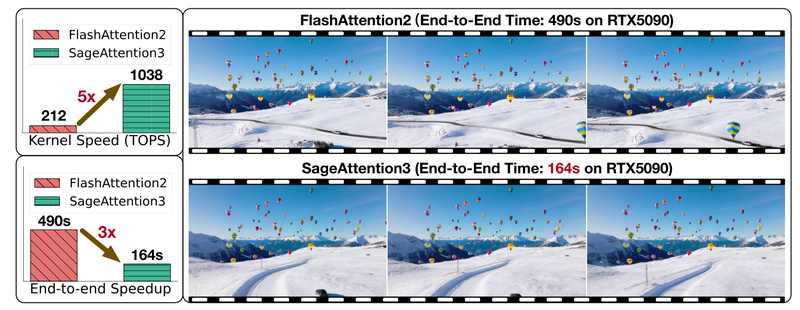

SageAttention3: 5x Faster LLM Inference on Blackwell GPUs with Plug-and-Play FP4 Attention and First-Ever 8-Bit Training Support 2814

Attention mechanisms lie at the heart of modern large language models (LLMs) and multimodal architectures—but their quadratic computational complexity remains…