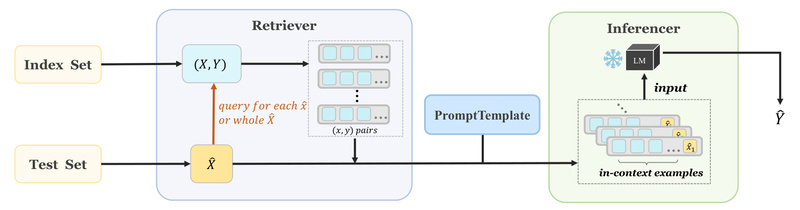

Evaluating large language models (LLMs) on new tasks traditionally requires fine-tuning—a process that’s time-consuming, resource-intensive, and often impractical when labeled…

LLM Evaluation

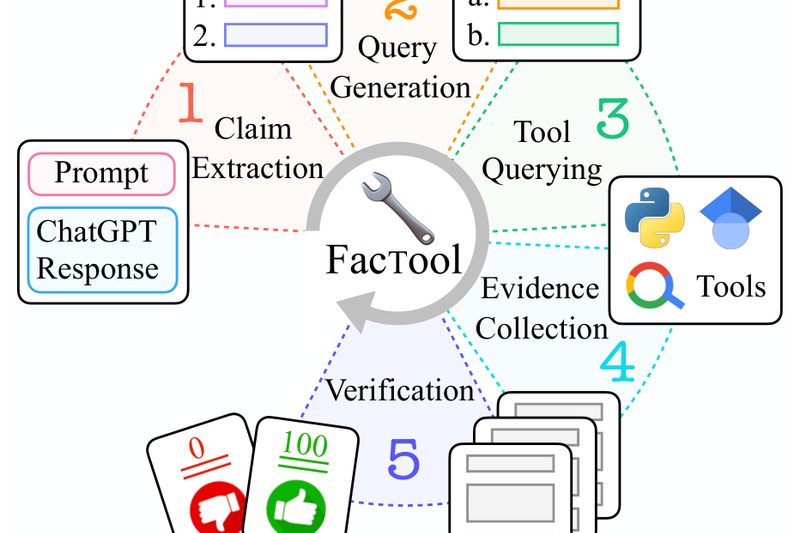

FacTool: Automatically Detect Factual Errors in LLM Outputs Across Code, Math, QA, and Scientific Writing 899

Large language models (LLMs) like ChatGPT and GPT-4 have transformed how we generate text, write code, solve math problems, and…