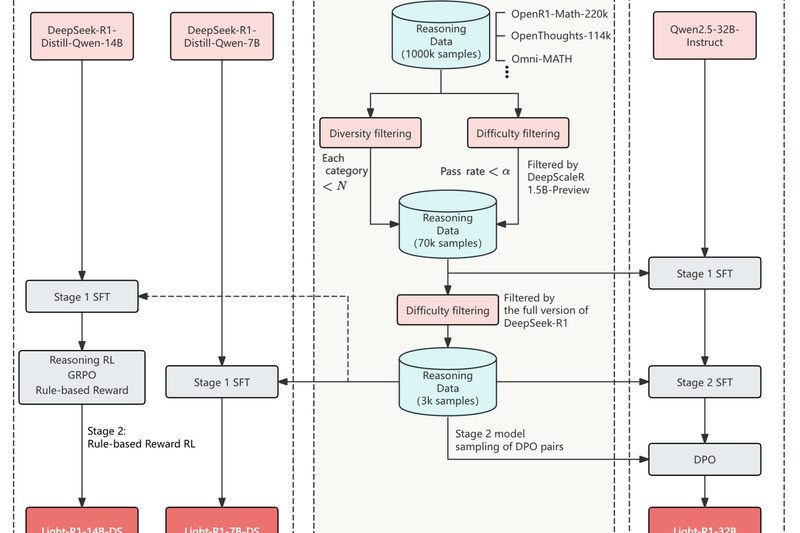

If you’re building AI systems that require reliable, step-by-step mathematical reasoning—but don’t have access to proprietary datasets, massive compute budgets,…

Mathematical Reasoning

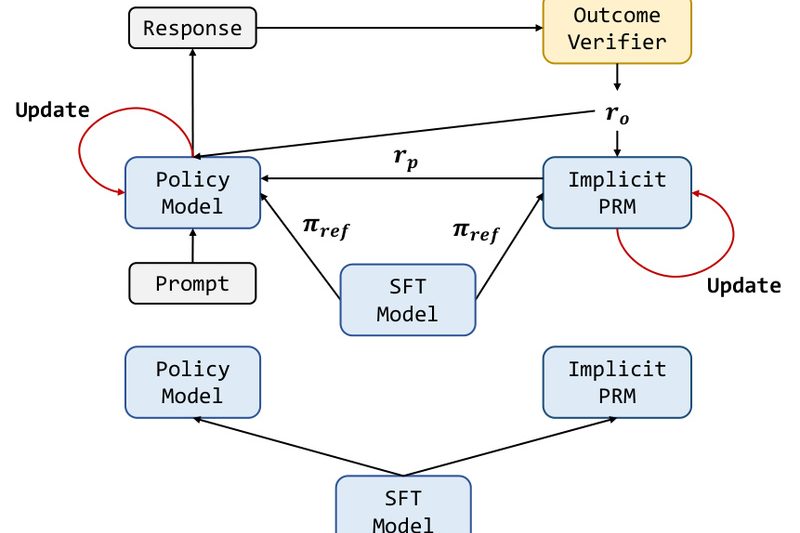

PRIME: Boost LLM Reasoning with Token-Level Rewards—No Step-by-Step Labels Needed 1783

If you’re working to improve large language models (LLMs) on hard reasoning tasks—like math problem solving or competitive programming—you’ve likely…

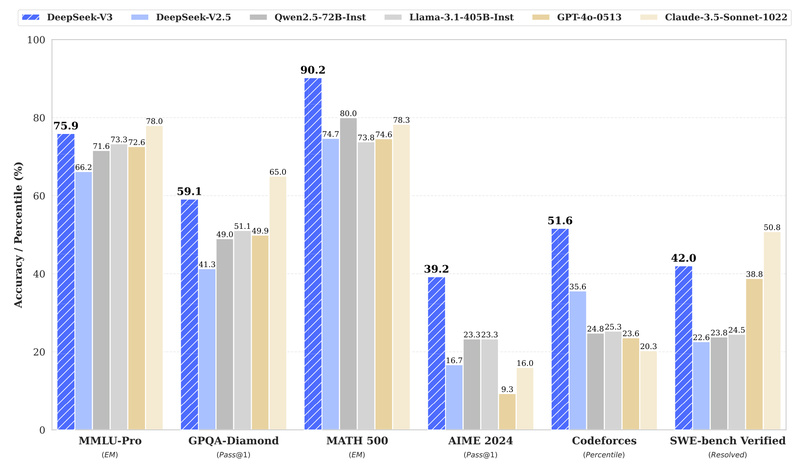

DeepSeek-V3: A High-Performance, Cost-Efficient MoE Language Model That Delivers Closed-Source Power with Open-Source Flexibility 100738

For technical decision-makers evaluating large language models (LLMs) for real-world applications, balancing raw capability, inference cost, training efficiency, and deployment…

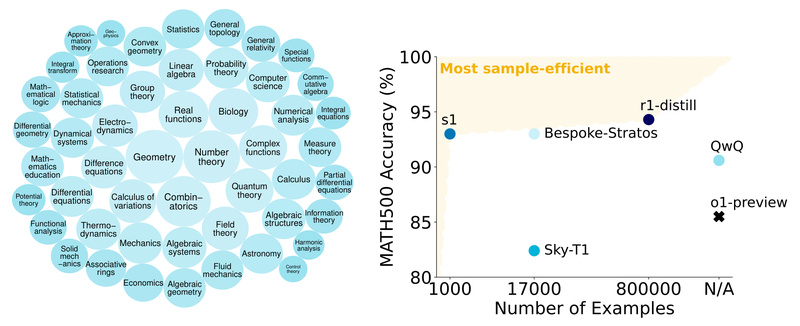

S1: Boost Reasoning Performance with Just 1,000 Examples and Smart Test-Time Scaling 6613

In the rapidly evolving landscape of large language models (LLMs), achieving strong reasoning capabilities often comes at the cost of…

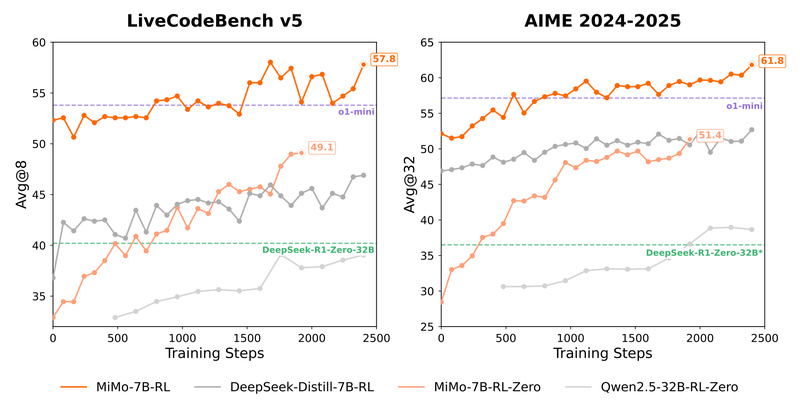

MiMo: High-Performance Reasoning in a 7B Model—Outperforming 32B Models and Matching o1-mini 1637

MiMo is a 7-billion-parameter language model purpose-built for reasoning-intensive tasks—spanning mathematics, code generation, and STEM problem solving—without the computational overhead…

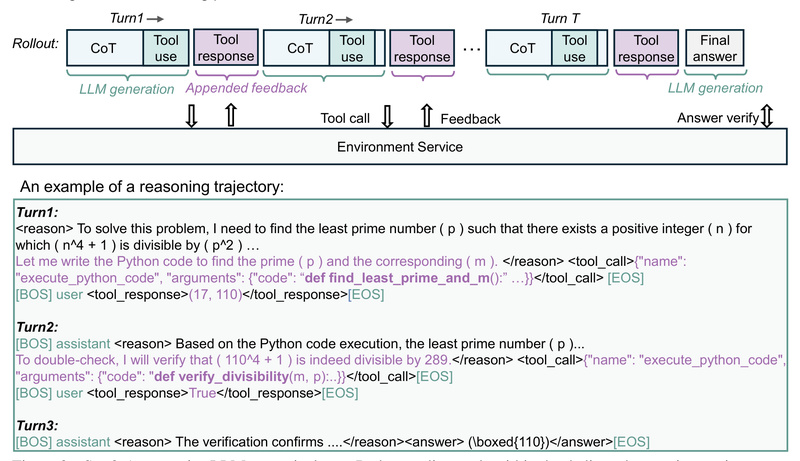

rStar2-Agent: A 14B Math Reasoning Model That Outsmarts 671B Models with Smarter, Tool-Aware Agentic Reasoning 1356

In the rapidly evolving landscape of large language models (LLMs), bigger isn’t always better—smarter is. Enter rStar2-Agent, a 14-billion-parameter reasoning…