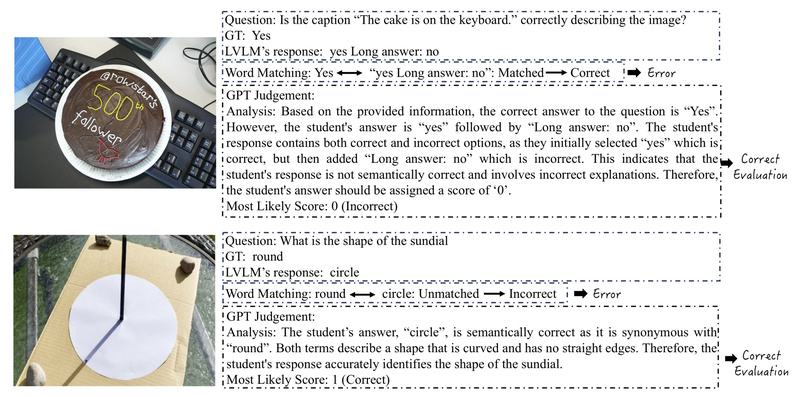

As Large Vision-Language Models (LVLMs) grow increasingly capable—and increasingly complex—evaluating their multimodal reasoning, perception, and reliability has become a significant…

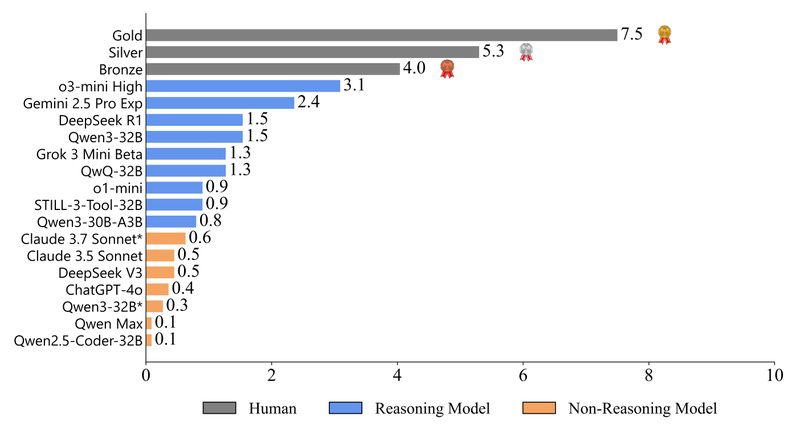

Model Evaluation

ICPC-Eval: Stress-Test LLM Reasoning with Real-World Competitive Programming Challenges 739

Evaluating the true reasoning capabilities of large language models (LLMs) in coding has long been hampered by benchmarks that are…