Deploying large language models (LLMs) like LLaMA, Mistral, or Vicuna often demands multiple high-end GPUs, complex inference pipelines, and substantial…

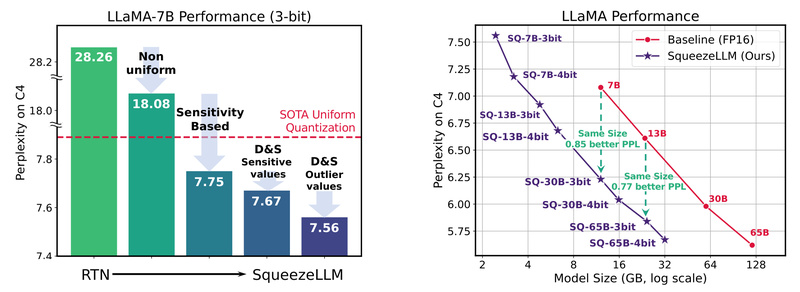

Model Quantization

TorchAO: Unified PyTorch-Native Optimization for Faster Training and Efficient LLM Inference 2559

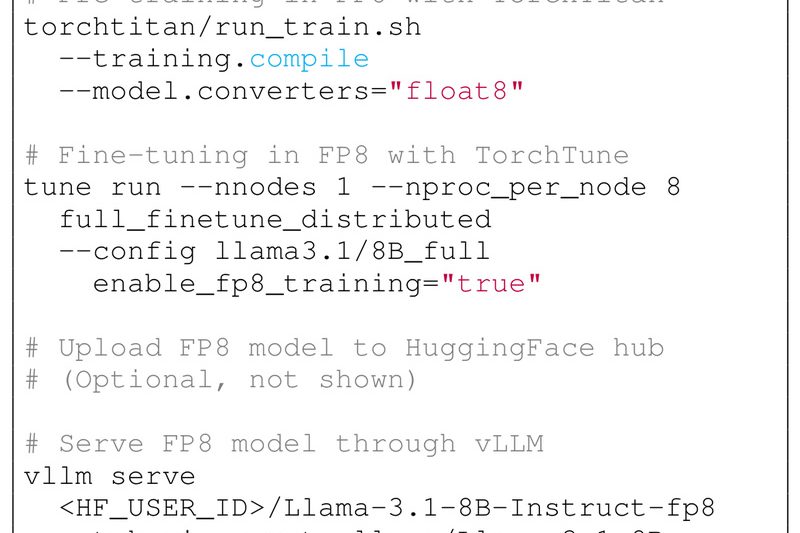

Deploying large AI models in production often involves a fragmented toolchain: one set of libraries for training, another for quantization,…