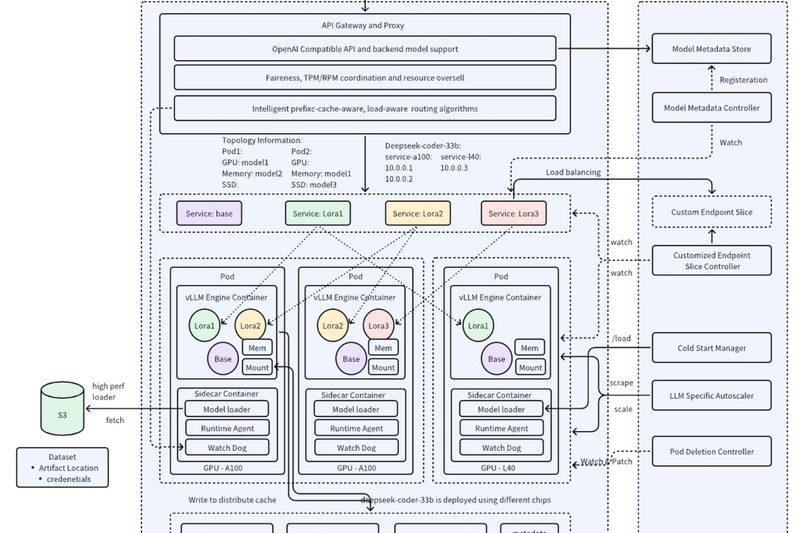

If you’re building or scaling a system that relies on large language models (LLMs)—whether for chatbots, embeddings, multimodal reasoning, or…

Model Serving

AIBrix: Scalable, Cost-Effective LLM Inference Infrastructure for Enterprise-Grade GenAI Deployment 4460

Deploying large language models (LLMs) at scale in production environments remains a significant challenge for engineering teams. High inference costs,…