In today’s AI landscape, multimodal systems that understand both images and videos are increasingly essential—but most solutions force you to…

Multimodal Understanding

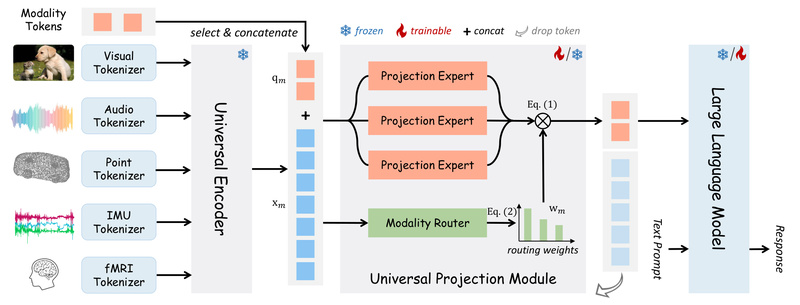

OneLLM: Unify Images, Audio, Video, Sensors, and Even Brain Signals into One Language Model 665

Multimodal AI is no longer just about images and text—it’s about seamlessly blending diverse data streams like audio, video, 3D…

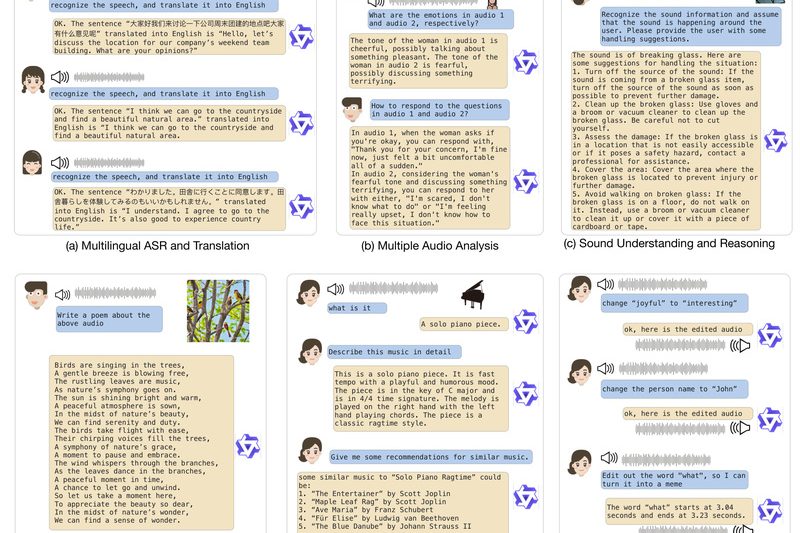

Qwen-Audio: Unified Audio-Language Understanding for Speech, Music, and Environmental Sounds Without Task-Specific Tuning 1848

Audio is one of the richest yet most fragmented modalities in artificial intelligence. Traditional systems often require separate models for…

Mini-Omni2: Unified Vision, Speech, and Text Interaction Without External ASR/TTS Pipelines 1847

In today’s open-source AI landscape, building truly multimodal applications often means stitching together separate models for vision, speech recognition (ASR),…

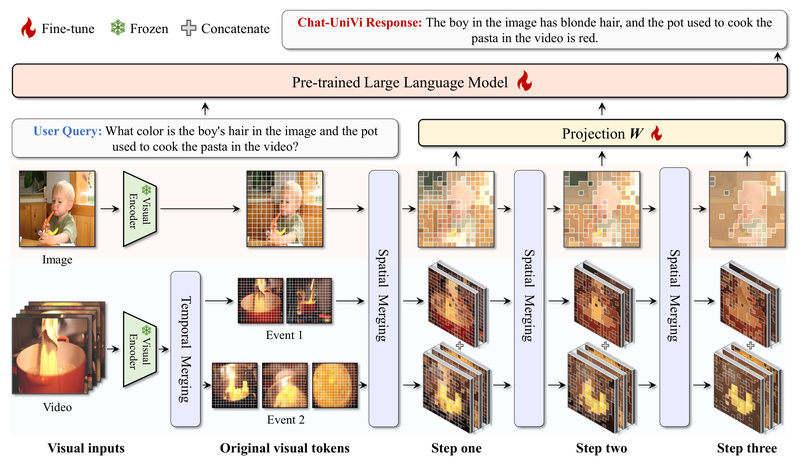

Video-LLaVA: One Unified Model for Both Image and Video Understanding—No More Modality Silos 3417

If you’re evaluating vision-language models for a project that involves both images and videos, you’ve probably faced a frustrating trade-off:…

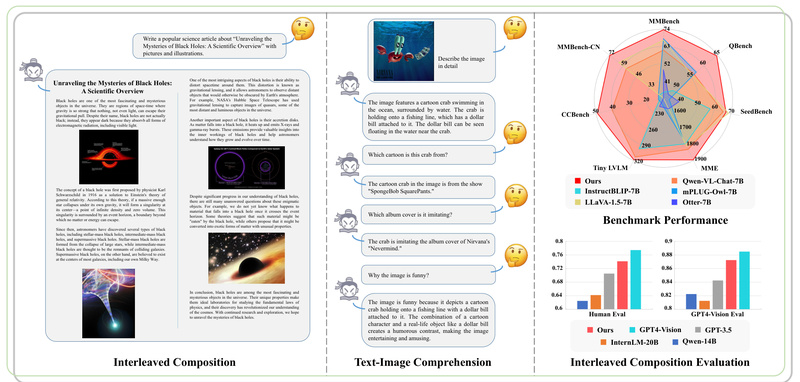

InternLM-XComposer: Generate Rich Text-Image Content and Understand High-Res Visuals with Open, Commercially Free AI 2909

Overview For technical decision makers evaluating multimodal AI, choosing between closed-source APIs and open alternatives often means trading off control,…

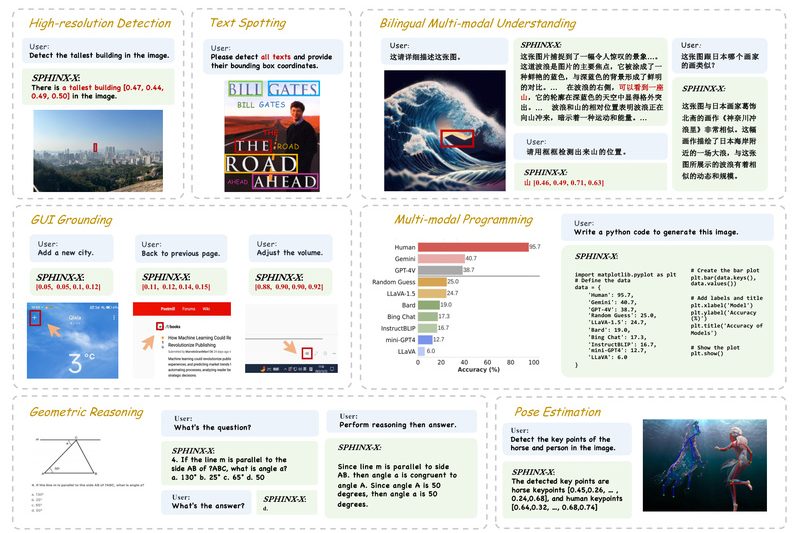

SPHINX-X: Build Scalable Multimodal AI Faster with Unified Training, Diverse Data, and Flexible Model Sizes 2794

SPHINX-X is a next-generation family of Multimodal Large Language Models (MLLMs) designed to streamline the development, training, and deployment of…

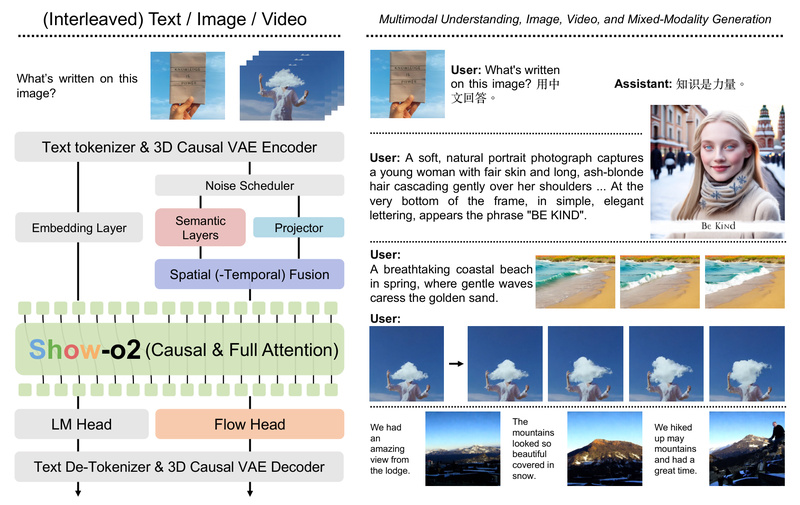

Show-o: One Unified Transformer for Multimodal Understanding and Generation Across Text, Images, and Videos 1809

In today’s AI landscape, developers and researchers often juggle separate models for vision, language, and video—each with its own architecture,…

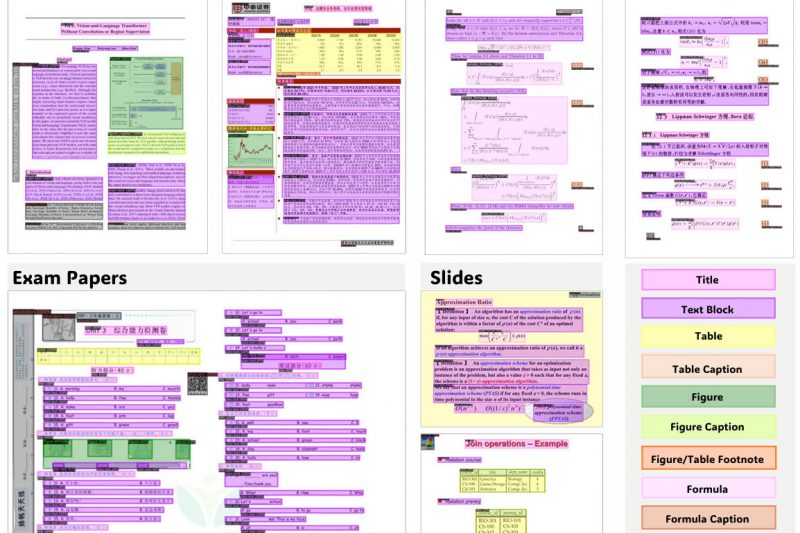

Dolphin: Lightweight, Accurate Document Image Parsing for Real-World Mixed-Content Pages 7904

Parsing complex document images—those containing intertwined text paragraphs, tables, mathematical formulas, figures, and code—is a persistent challenge in applied AI.…

MinerU: High-Precision Open-Source Document Parsing for Real-World PDFs, Tables, and Formulas 50296

Converting real-world documents—especially PDFs containing mixed content like equations, tables, multi-column layouts, and scanned text—into clean, structured, machine-readable formats remains…