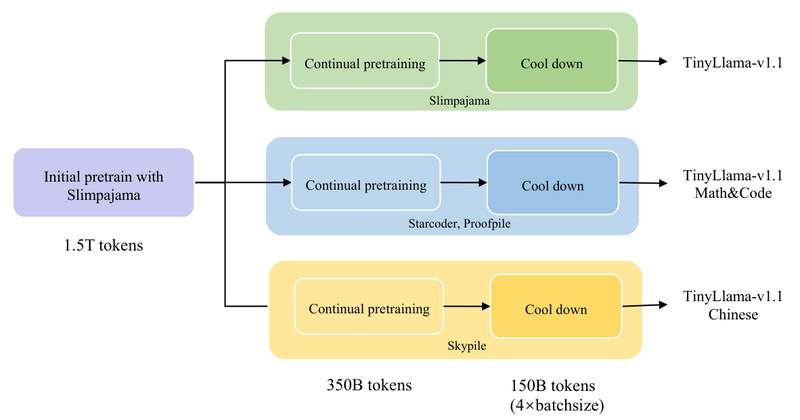

TinyLlama is a compact yet powerful open-source language model with just 1.1 billion parameters—but trained on an impressive 3 trillion…

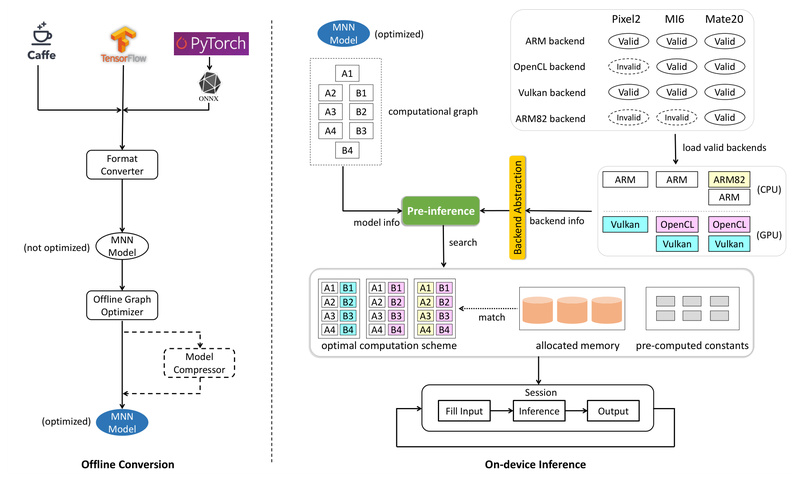

On-device Inference

MNN: Run Large Language Models and Vision AI Offline on Mobile with a Lightweight, High-Performance Inference Engine 13694

Mobile Neural Network (MNN) is an open-source, lightweight deep learning inference engine developed by Alibaba Group to bring powerful AI…

AgentCPM-GUI: On-Device AI Agent for Bilingual Mobile Automation with Reinforcement Fine-Tuning 1142

AgentCPM-GUI is an open-source, on-device large language model (LLM) agent designed to understand smartphone screenshots and autonomously perform user-specified tasks…

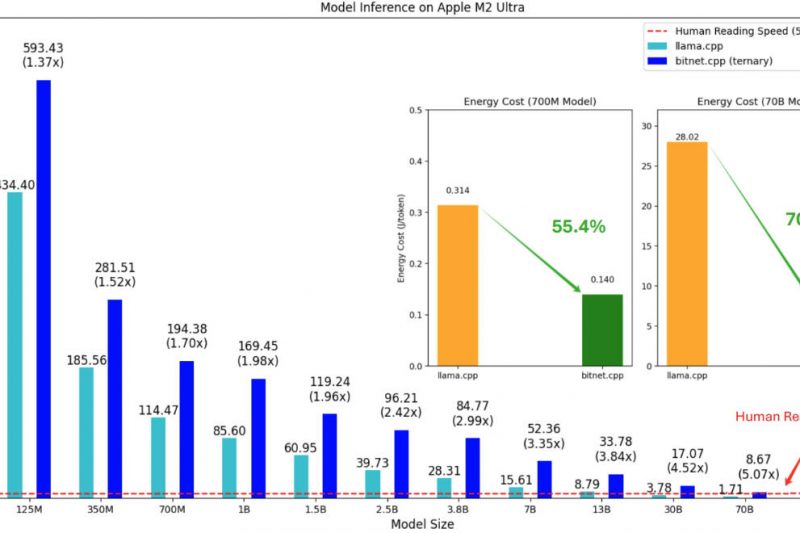

BitNet: Run 1.58-Bit LLMs Locally on CPUs with 6x Speedup and 82% Less Energy 24452

Running large language models (LLMs) used to require powerful GPUs, expensive cloud infrastructure, or specialized hardware—until BitNet changed the game.…