If you’re building or fine-tuning large language models (LLMs) for reasoning—whether in math, coding, search, or agentic workflows—you’ve likely hit…

Reinforcement Learning For Reasoning

MiMo: High-Performance Reasoning in a 7B Model—Outperforming 32B Models and Matching o1-mini 1637

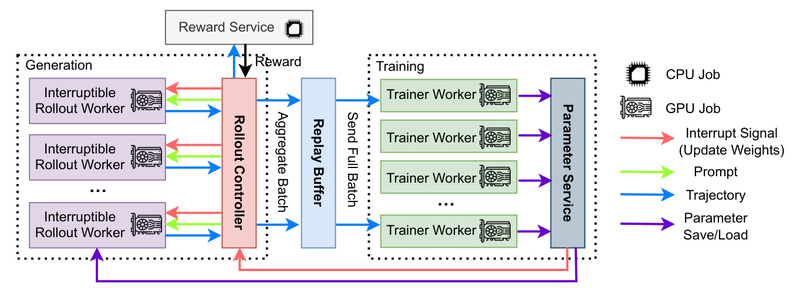

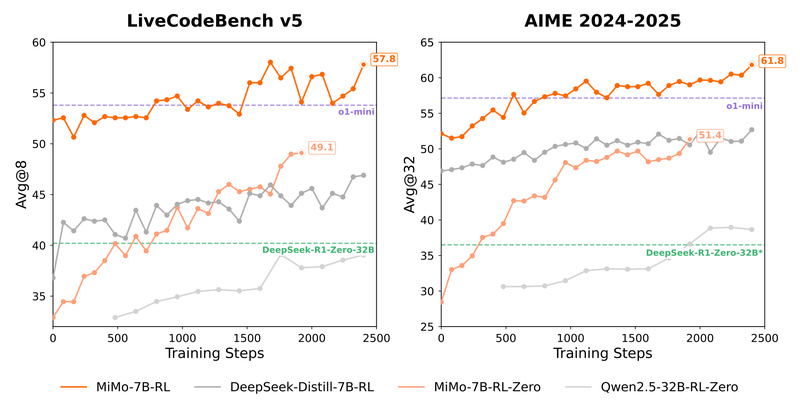

MiMo is a 7-billion-parameter language model purpose-built for reasoning-intensive tasks—spanning mathematics, code generation, and STEM problem solving—without the computational overhead…

rStar2-Agent: A 14B Math Reasoning Model That Outsmarts 671B Models with Smarter, Tool-Aware Agentic Reasoning 1356

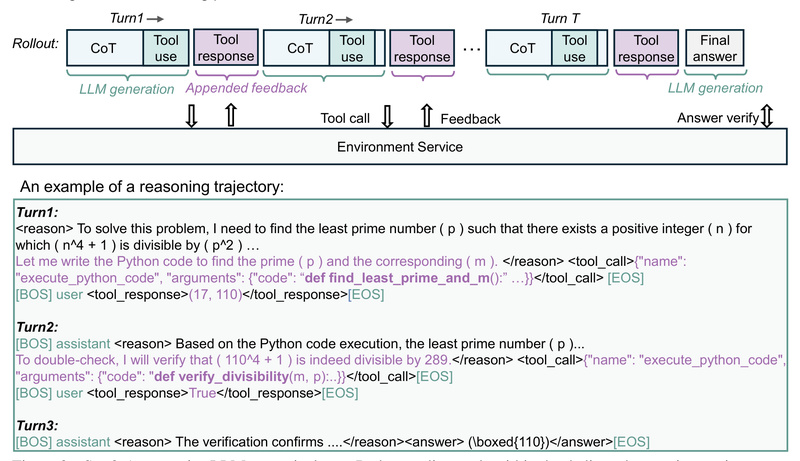

In the rapidly evolving landscape of large language models (LLMs), bigger isn’t always better—smarter is. Enter rStar2-Agent, a 14-billion-parameter reasoning…