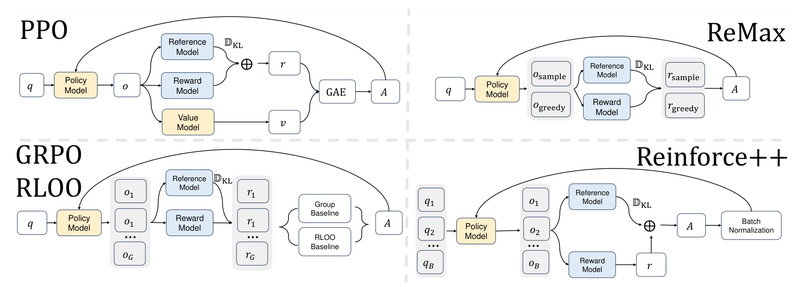

Aligning large language models (LLMs) with human preferences is essential for building safe, helpful, and reliable AI systems. Reinforcement Learning…

Reinforcement Learning From Human Feedback (RLHF)

Verl: A Flexible, High-Performance RLHF Framework for Aligning Large Language Models at Scale 17406

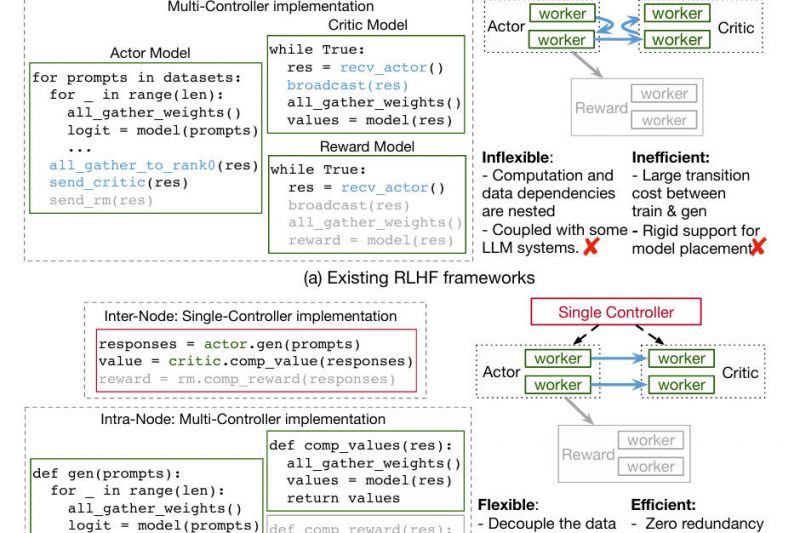

Verl (short for Volcano Engine Reinforcement Learning) is an open-source, production-ready framework designed specifically for Reinforcement Learning from Human Feedback…