In an era where deep learning models grow ever larger and more opaque, the demand for interpretable, efficient, and theoretically…

Representation Learning

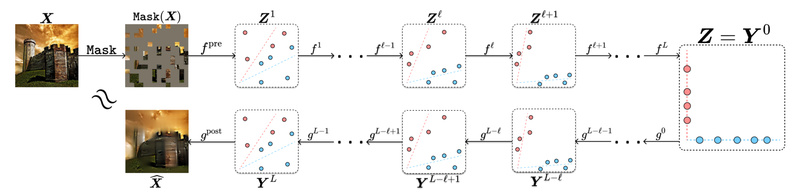

Meta-Transformer: One Unified Model for 12 Modalities—No Paired Data Needed 1644

In today’s AI landscape, building systems that understand multiple types of data—text, images, audio, video, time series, and more—is increasingly…