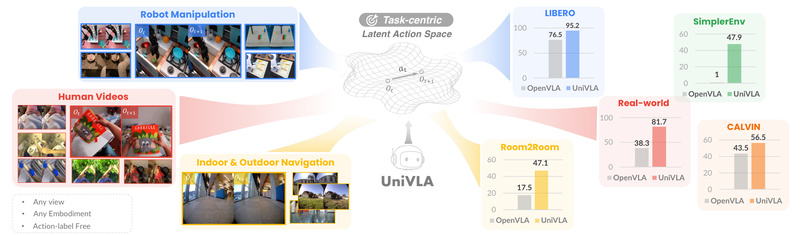

Imagine deploying a single robot policy that works across different hardware—robotic arms, mobile bases, or even human-inspired setups—without retraining from…

Robotic Manipulation

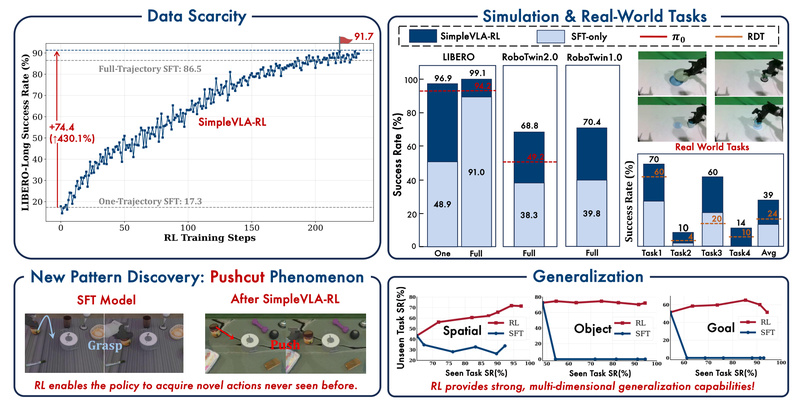

SimpleVLA-RL: Boost Robotic Task Performance with Minimal Data Using Reinforcement Learning 762

Building capable robotic systems that understand vision, language, and action—commonly referred to as Vision-Language-Action (VLA) models—has become a central goal…

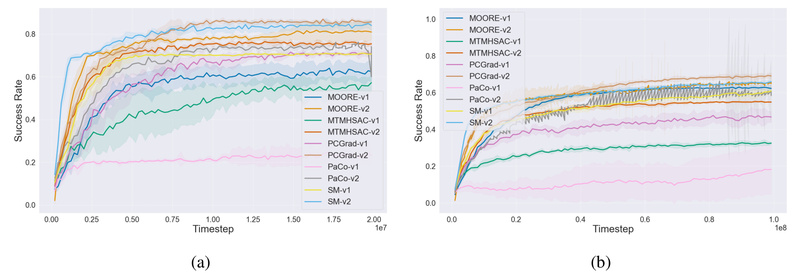

Meta-World+: A Reproducible, Standardized Benchmark for Multi-Task and Meta Reinforcement Learning in Robotic Control 1659

Evaluating reinforcement learning (RL) agents—especially those designed for multi-task or meta-learning scenarios—requires benchmarks that are consistent, well-documented, and technically accessible.…

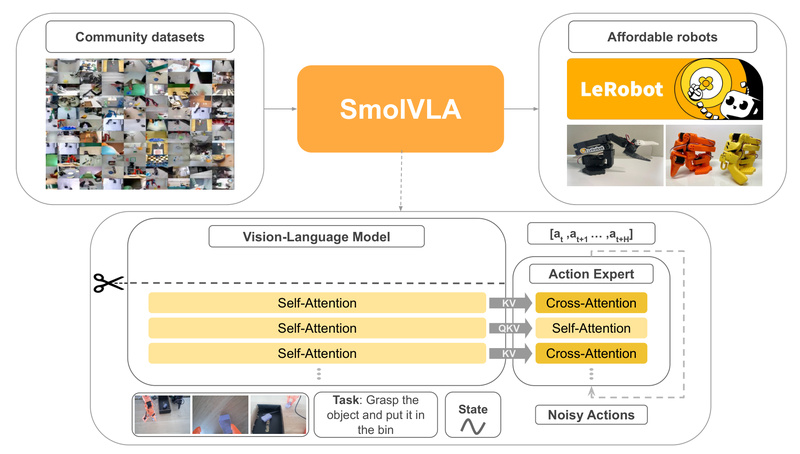

SmolVLA: High-Performance Vision-Language-Action Robotics on a Single GPU 20075

SmolVLA is a compact yet capable Vision-Language-Action (VLA) model designed to bring state-of-the-art robot control within reach of researchers, educators,…