Deploying large language models (LLMs) to handle long documents, extensive chat histories, or detailed technical manuals remains a major bottleneck…

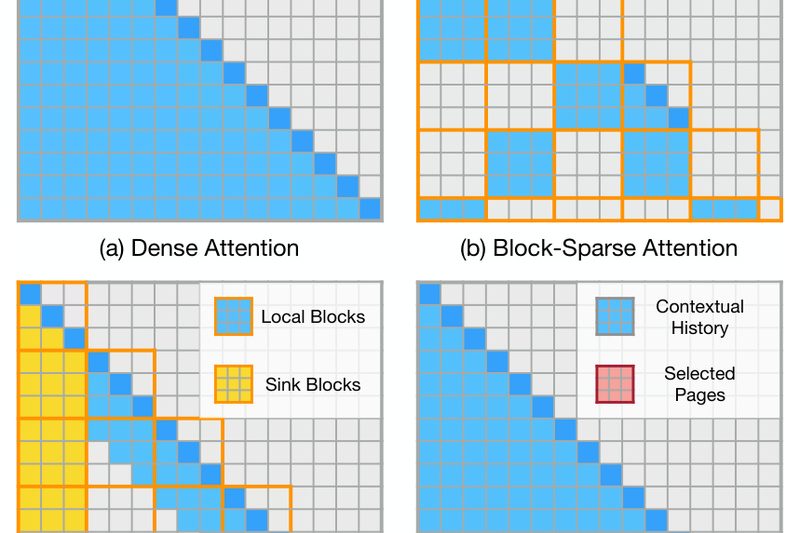

Sparse Attention

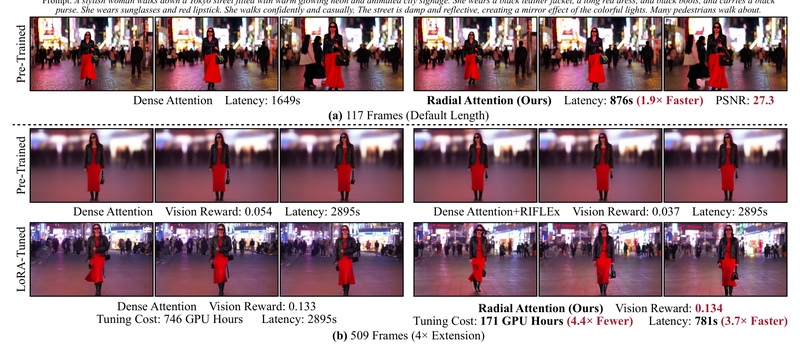

Radial Attention: Generate 4× Longer Videos 3.7× Faster with O(n log n) Sparse Attention 519

Generating high-quality, long-form videos with diffusion models remains one of the most computationally demanding tasks in generative AI. Standard attention…

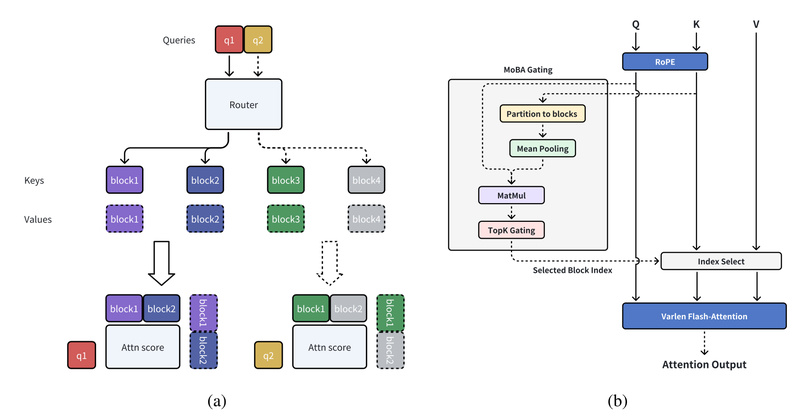

MoBA: Efficient Long-Context Attention for LLMs Without Compromising Reasoning Quality 2014

Handling long input sequences—ranging from tens of thousands to over a million tokens—is no longer a theoretical benchmark but a…

VSA: Accelerate Video Diffusion Models by 2.5× with Trainable Sparse Attention—No Quality Tradeoff 2780

Video generation using diffusion transformers (DiTs) is rapidly advancing—but at a steep computational cost. Full 3D attention in these models…