Training large language models (LLMs) on long sequences—whether for document-level instruction tuning, multi-modal reasoning, or complex alignment tasks—has long been…

Supervised Fine-tuning

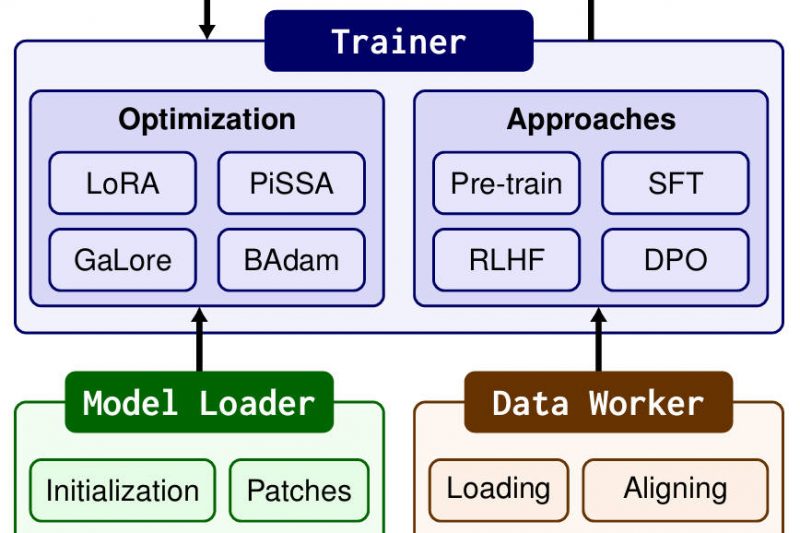

LlamaFactory: Fine-Tune 100+ Language Models Effortlessly—No Coding Required 63856

Fine-tuning large language models (LLMs) used to be a complex, time-consuming endeavor—requiring deep expertise in deep learning frameworks, custom code…