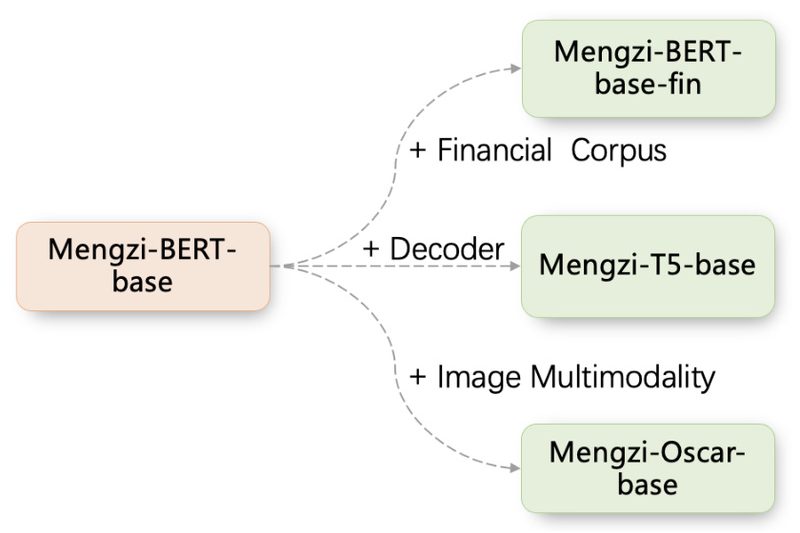

In recent years, pre-trained language models (PLMs) have revolutionized natural language processing (NLP), delivering state-of-the-art results across a wide spectrum…

Text Generation

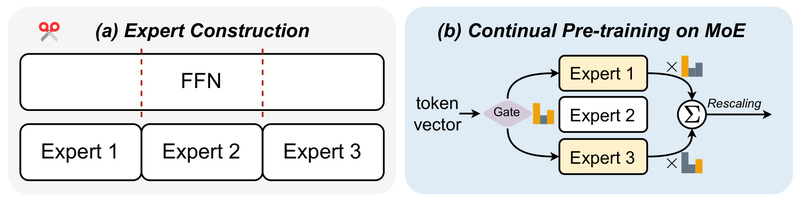

LLaMA-MoE: High-Performance Mixture-of-Experts LLM with Only 3.5B Active Parameters 994

If you’re a developer, researcher, or technical decision-maker working with large language models (LLMs), you’ve likely faced a tough trade-off:…

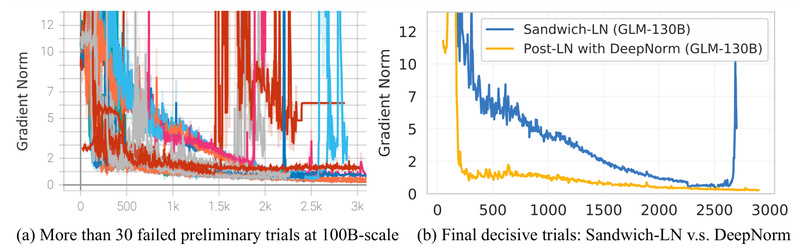

GLM-130B: A Truly Open, Bilingual 130B-Language Model That Runs on Consumer GPUs 7680

If you’re evaluating large language models (LLMs) for real-world deployment—especially in multilingual settings—you’ve likely hit a wall: most top-performing models…

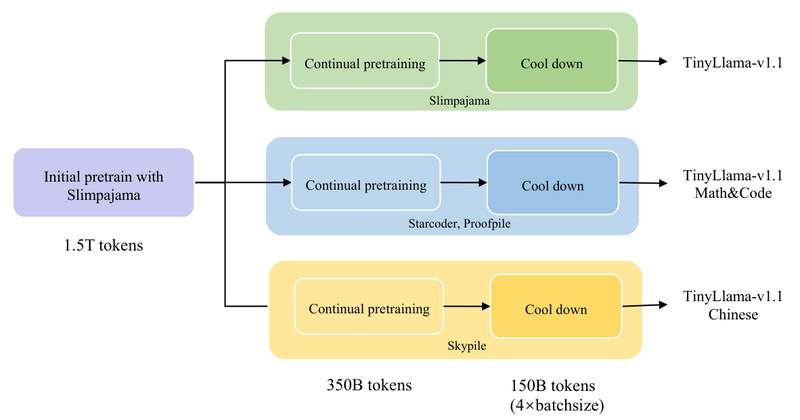

TinyLlama: A Fast, Efficient 1.1B Open Language Model for Edge Deployment and Speculative Decoding 8770

TinyLlama is a compact yet powerful open-source language model with just 1.1 billion parameters—but trained on an impressive 3 trillion…

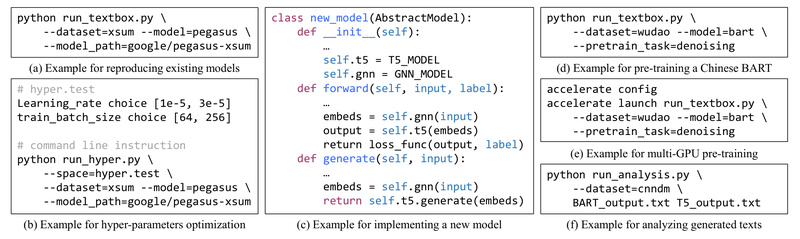

TextBox 2.0: A Unified Library for Rapid Text Generation with Pre-Trained Language Models 1096

If you’ve ever struggled to compare BART, T5, and a custom Chinese language model on summarization, translation, or dialogue generation—only…

llama.cpp: Run Large Language Models Anywhere—Fast, Lightweight, and Offline 91182

In an era where large language models (LLMs) power everything from chatbots to code assistants, deploying them outside of cloud…

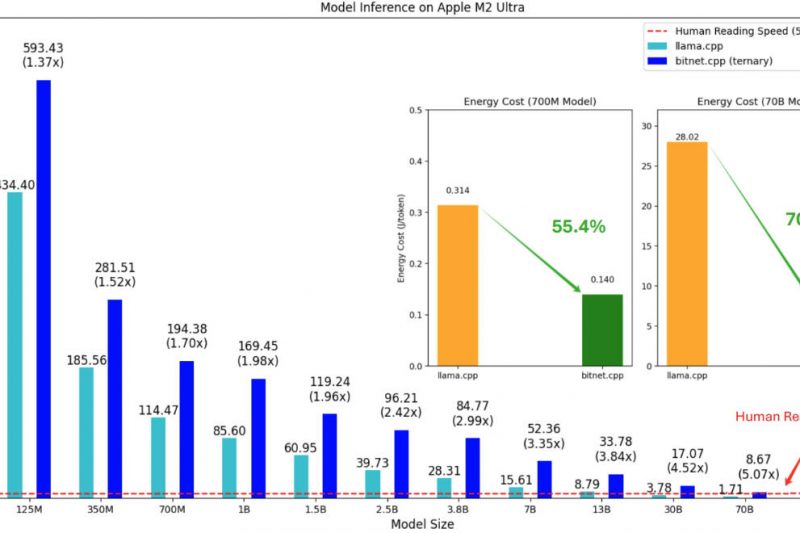

BitNet: Run 1.58-Bit LLMs Locally on CPUs with 6x Speedup and 82% Less Energy 24452

Running large language models (LLMs) used to require powerful GPUs, expensive cloud infrastructure, or specialized hardware—until BitNet changed the game.…