Building capable robotic systems that understand vision, language, and action—commonly referred to as Vision-Language-Action (VLA) models—has become a central goal…

Vision-Language-Action Modeling

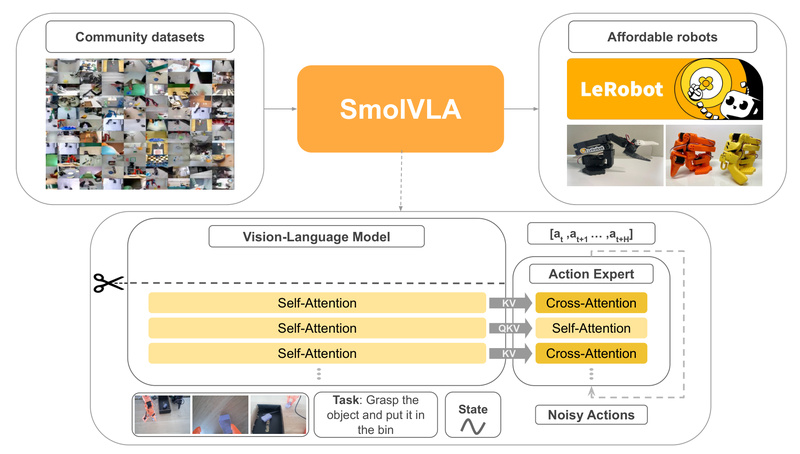

SmolVLA: High-Performance Vision-Language-Action Robotics on a Single GPU 20075

SmolVLA is a compact yet capable Vision-Language-Action (VLA) model designed to bring state-of-the-art robot control within reach of researchers, educators,…

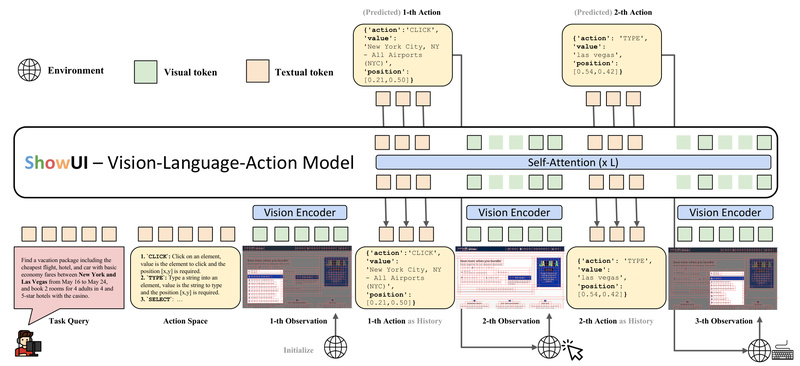

ShowUI: Open-Source Vision-Language-Action Model for Human-Like GUI Automation from Screenshots 1509

In today’s digital workflows, automating interactions with graphical user interfaces (GUIs)—whether on websites, mobile apps, or desktop software—is a high-value…