In today’s AI-driven product landscape, the ability to understand both images and text isn’t just a research novelty—it’s a practical…

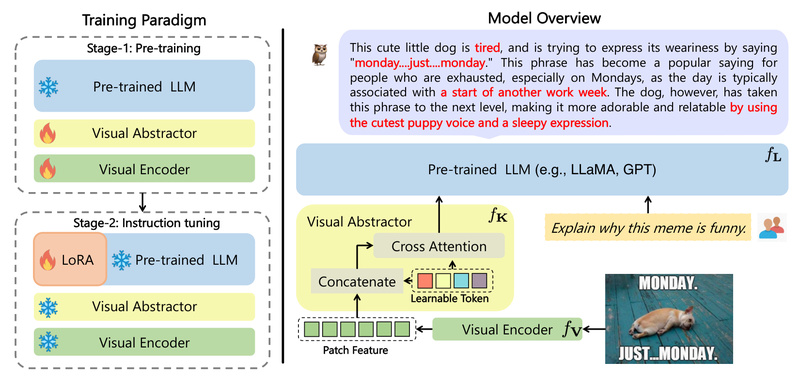

Vision-language Understanding

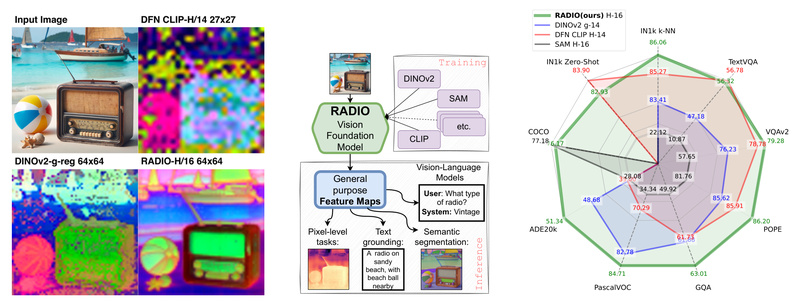

AM-RADIO: Unify Vision Foundation Models into One High-Performance Backbone for Multimodal, Segmentation, and Detection Tasks 1357

In modern computer vision, practitioners often juggle multiple foundation models—CLIP for vision-language alignment, DINOv2 for dense feature extraction, and SAM…

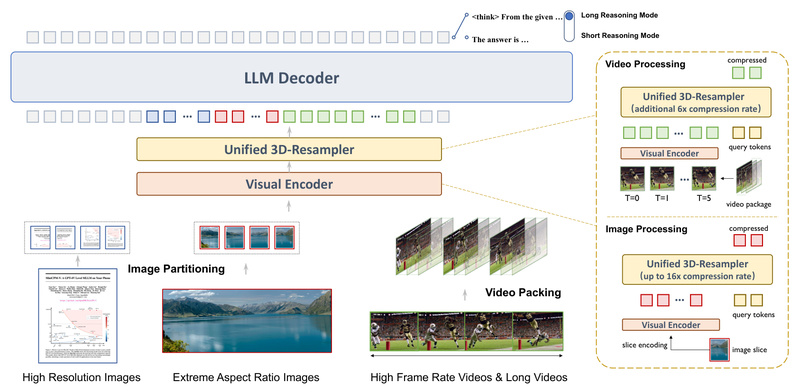

MiniCPM-V 4.5: GPT-4o-Level Vision Intelligence in an 8B Open-Source Model for Real-World Multimodal Tasks 22368

Multimodal Large Language Models (MLLMs) promise to transform how machines understand images, videos, and text—but most top-performing models come with…