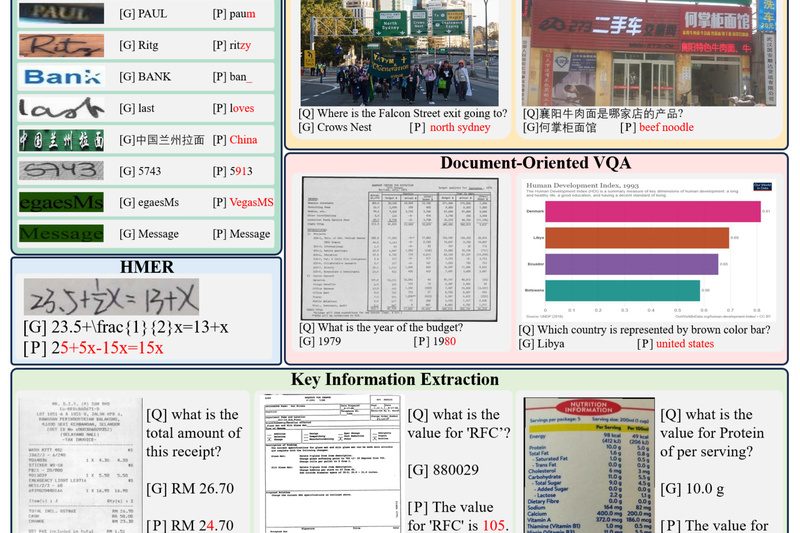

Large Multimodal Models (LMMs) like GPT-4V and Gemini promise powerful vision-language understanding—but how well do they actually read text in…

Visual Question Answering

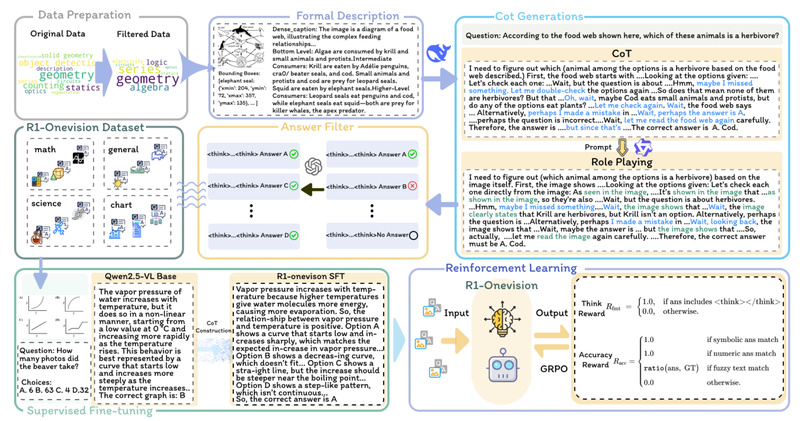

R1-Onevision: Solve Complex Visual Reasoning Problems with Step-by-Step Multimodal AI 569

In today’s AI landscape, most multimodal models can describe what’s in an image—but few can reason through it. If your…

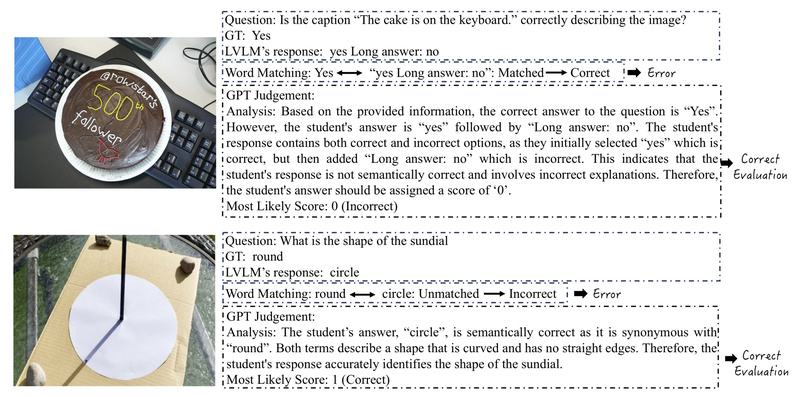

TinyLVLM-eHub: Fast, Lightweight Evaluation for Large Vision-Language Models Without Heavy Compute 539

As Large Vision-Language Models (LVLMs) grow increasingly capable—and increasingly complex—evaluating their multimodal reasoning, perception, and reliability has become a significant…

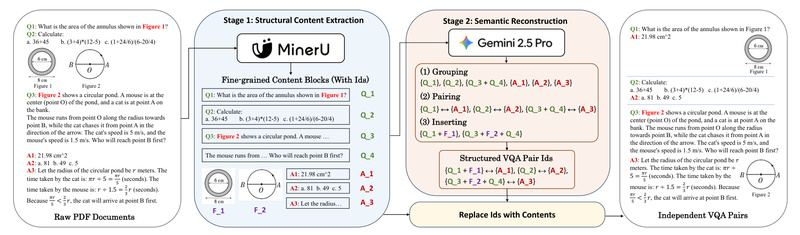

FlipVQA-Miner: Automatically Extract High-Quality Visual QA Pairs from Textbooks for Reliable LLM Training 1737

Large Language Models (LLMs) and multimodal systems increasingly demand high-quality, human-authored supervision data—especially for tasks requiring reasoning, visual understanding, and…

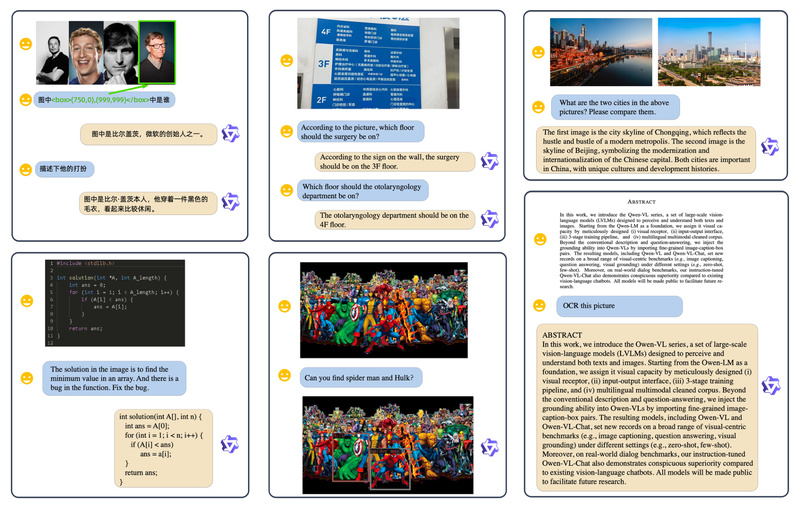

Qwen-VL: Open-Source Vision-Language AI for Text Reading, Object Grounding, and Multimodal Reasoning 6422

In the rapidly evolving landscape of multimodal artificial intelligence, developers and technical decision-makers need models that go beyond basic image…

Video-LLaVA: One Unified Model for Both Image and Video Understanding—No More Modality Silos 3417

If you’re evaluating vision-language models for a project that involves both images and videos, you’ve probably faced a frustrating trade-off:…

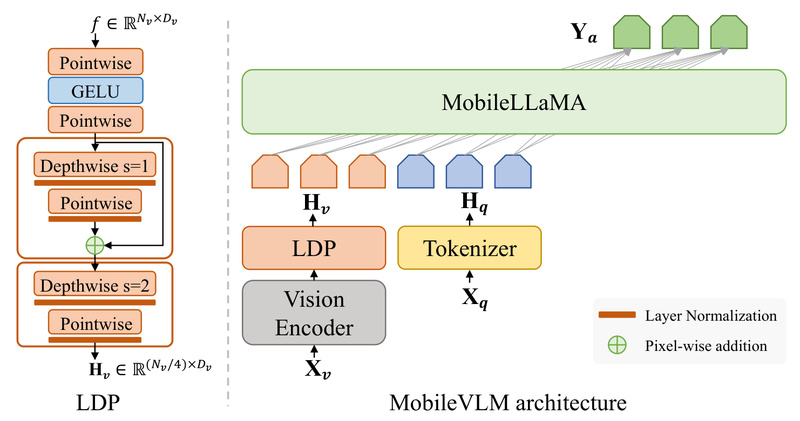

MobileVLM: High-Performance Vision-Language AI That Runs Fast and Privately on Mobile Devices 1314

MobileVLM is a purpose-built vision-language model (VLM) engineered from the ground up for on-device deployment on smartphones and edge hardware.…

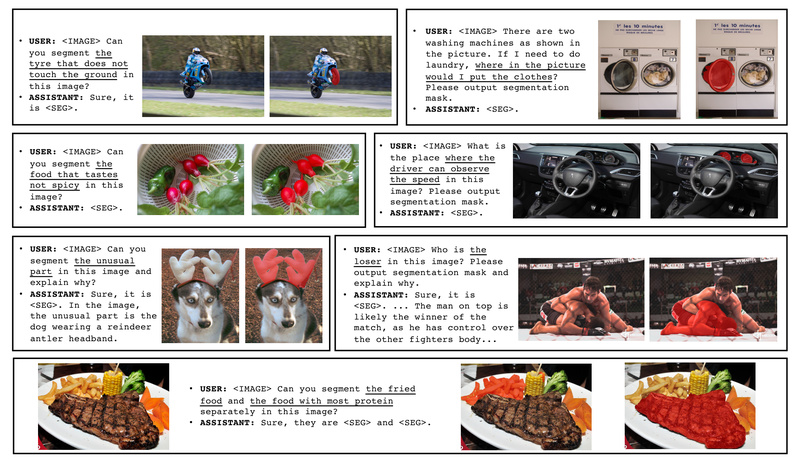

LISA: Segment Anything by Understanding What You *Really* Mean 2523

Imagine asking a computer vision system to “segment the object that makes the woman stand higher” or “show me the…

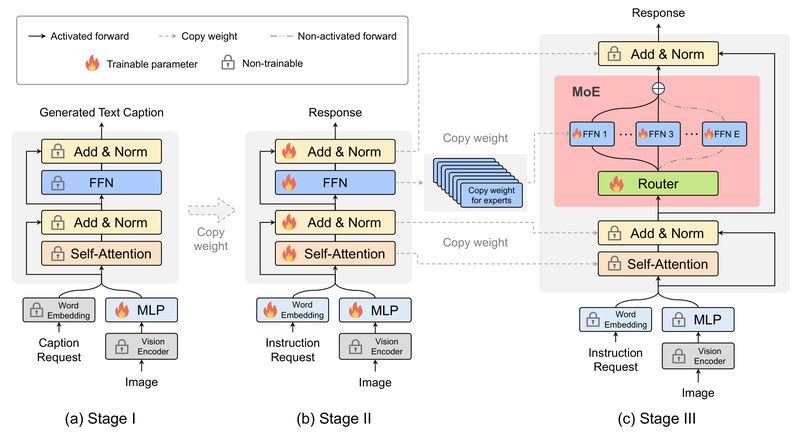

MoE-LLaVA: High-Performance Vision-Language Understanding with Sparse, Efficient Inference 2282

MoE-LLaVA (Mixture of Experts for Large Vision-Language Models) redefines efficiency in multimodal AI by delivering performance that rivals much larger…

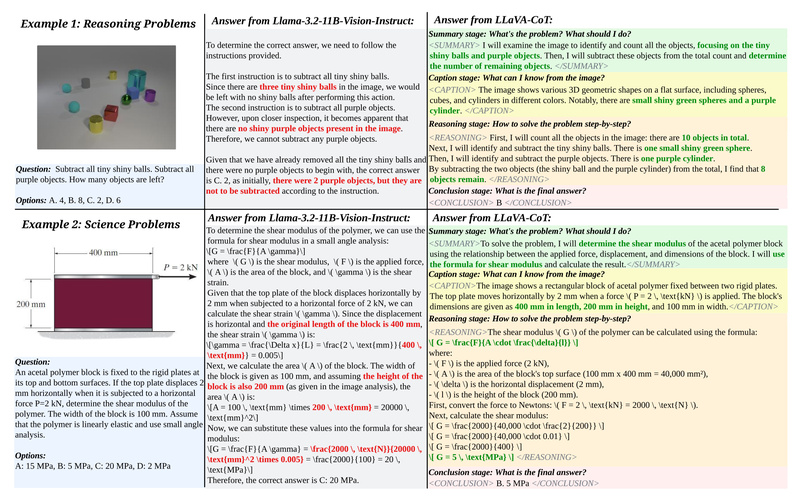

LLaVA-CoT: Step-by-Step Visual Reasoning for Reliable, Explainable Multimodal AI 2108

Most vision-language models (VLMs) today can describe what’s in an image—but they often falter when asked to reason about it.…