Processing Thai text presents unique challenges for developers and data scientists. Unlike English and many other languages, Thai is written…

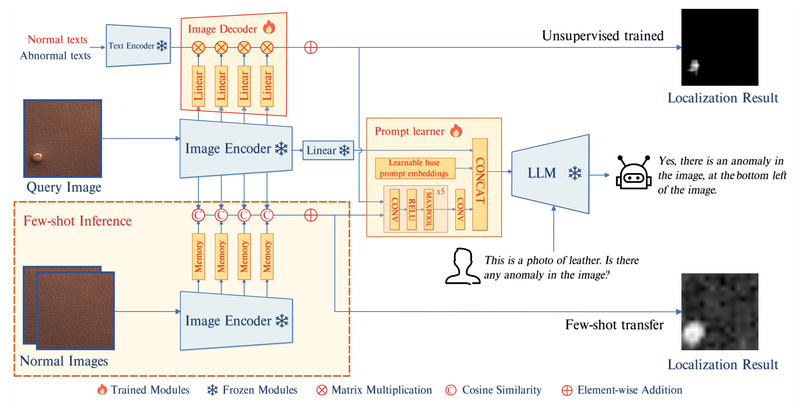

AnomalyGPT: Industrial Anomaly Detection Without Manual Thresholds or Labeled Anomalies 1043

In industrial quality control, detecting defects—like cracks in concrete, scratches on metal, or deformities in packaged goods—is critical. Yet traditional…

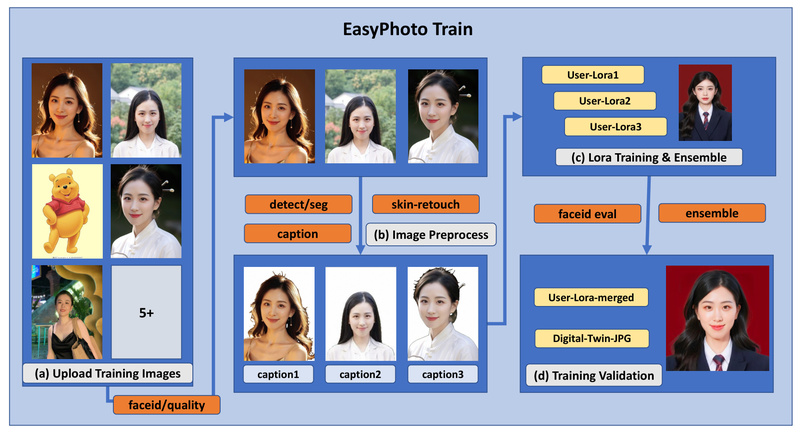

EasyPhoto: Generate Realistic, Identity-Preserving AI Portraits from Just 5–20 Photos 5188

In today’s fast-paced digital world, creating high-quality, personalized photos—whether for professional headshots, marketing campaigns, or custom avatars—often requires photography sessions,…

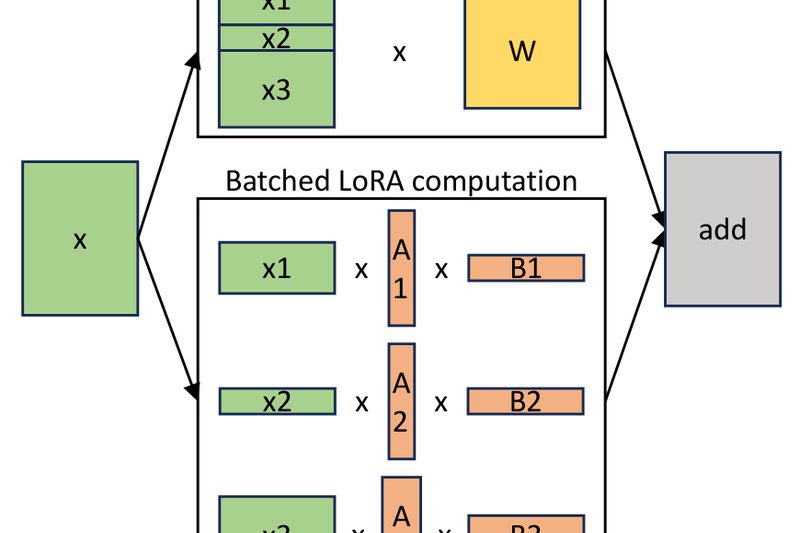

S-LoRA: Serve Thousands of Task-Specific LLMs Efficiently on a Single GPU 1879

Deploying dozens—or even thousands—of fine-tuned large language models (LLMs) has traditionally been a costly and complex endeavor. Each adapter typically…

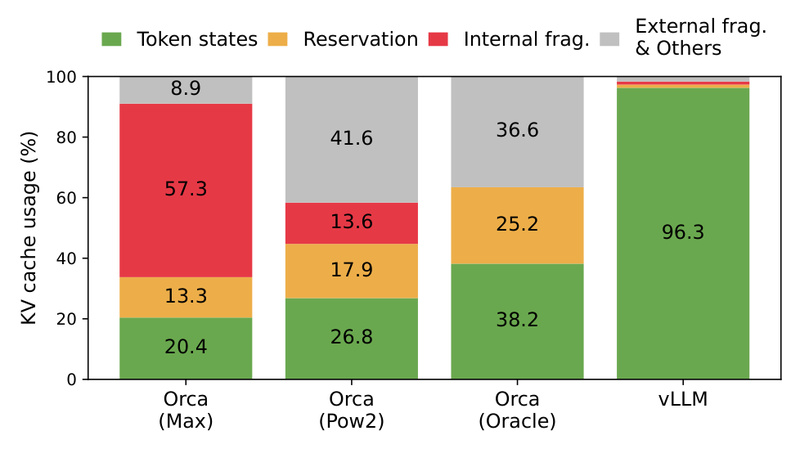

vLLM: High-Throughput, Memory-Efficient LLM Serving for Real-World Applications 65106

If you’re building or scaling a system that relies on large language models (LLMs)—whether for chatbots, embeddings, multimodal reasoning, or…

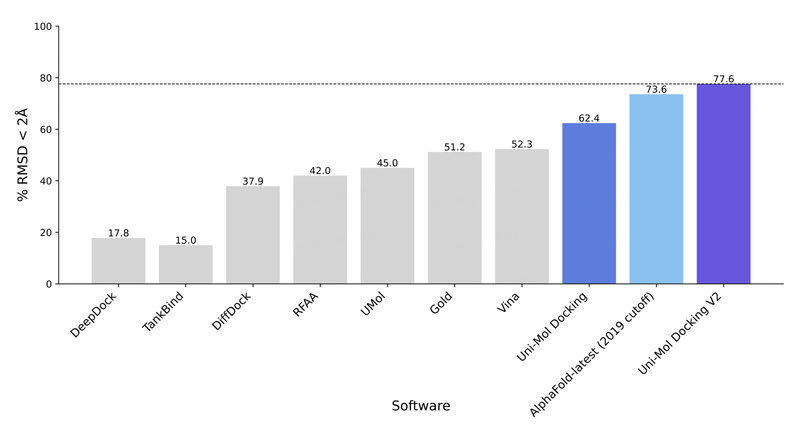

Uni-Mol: High-Accuracy 3D Molecular Modeling for Realistic Drug Discovery and Virtual Screening 1003

In the rapidly evolving field of computational drug discovery, one of the most persistent challenges is accurately predicting how small…

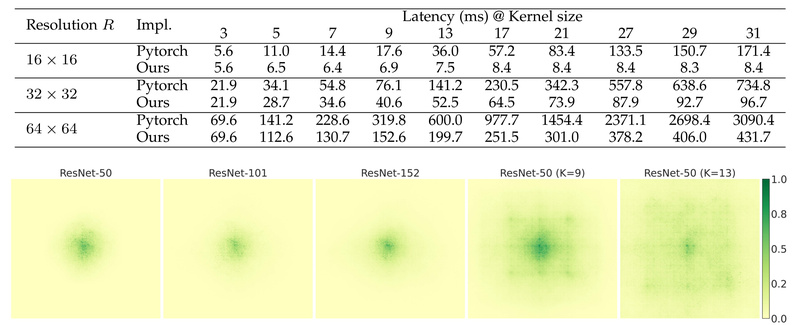

UniRepLKNet: A Universal Large-Kernel ConvNet for Faster, Stronger, and Truly Multimodal AI 1053

In the era of Vision Transformers and increasingly complex multimodal architectures, convolutional neural networks (ConvNets) have often been written off…

FluxMusic: High-Quality Text-to-Music Generation with Faster, More Controllable Rectified Flow Transformers 1713

FluxMusic represents a significant step forward in the field of AI-driven audio synthesis—specifically for generating music directly from natural language…

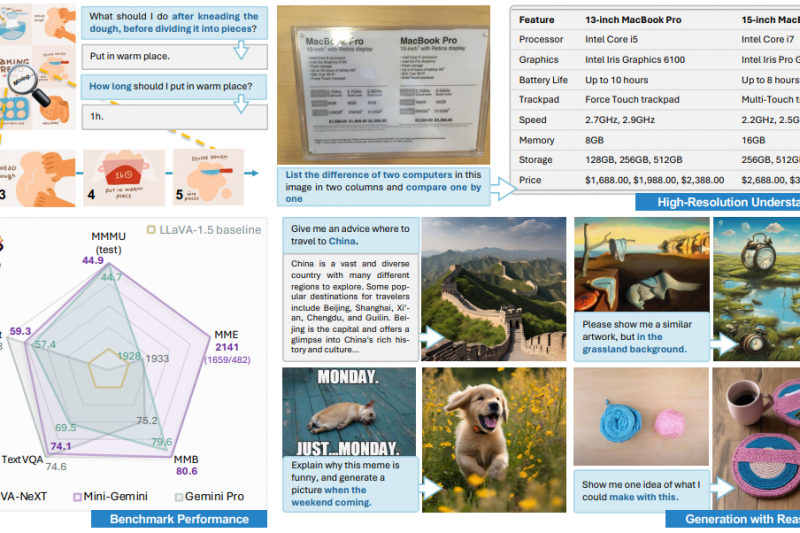

Mini-Gemini: Close the Gap with GPT-4V and Gemini Using Open, High-Performance Vision-Language Models 3323

In today’s AI landscape, multimodal systems that understand both images and language are no longer a luxury—they’re a necessity. Yet,…

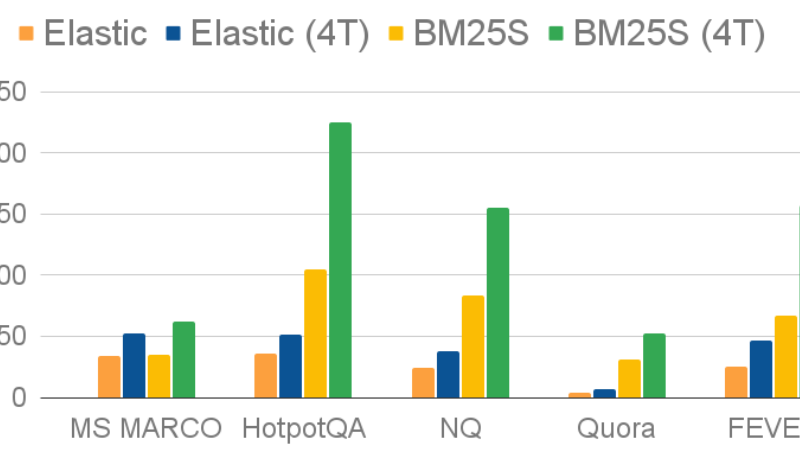

BM25S: Ultrafast Lexical Search in Pure Python—No Java, No PyTorch, Just Speed 1354

In today’s world of AI-powered search and retrieval, speed, simplicity, and low resource usage are non-negotiable—especially during prototyping, research, or…