Training large language models (LLMs) on long sequences—whether for document-level instruction tuning, multi-modal reasoning, or complex alignment tasks—has long been bottlenecked by GPU memory limits. While parameter-efficient methods like LoRA help, they often fall short when full-parameter fine-tuning or alignment (e.g., Supervised Fine-Tuning or Direct Preference Optimization) is required for high-quality results.

Enter 360-LLaMA-Factory, an open-source, plug-and-play extension of the widely adopted LLaMA-Factory framework. It introduces sequence parallelism (SP)—a technique that splits long input sequences across multiple GPUs during training—without altering your existing data pipelines, training scripts, or model configurations. When SP is disabled, 360-LLaMA-Factory behaves identically to the original LLaMA-Factory; when enabled, it unlocks the ability to train on sequences of hundreds of thousands of tokens using the same familiar interface.

Developed by 360Zhinao and already adopted in production-grade models like Light-R1 and TinyR1, 360-LLaMA-Factory brings industrial-grade long-context post-training capabilities to researchers and engineers who want correctness, simplicity, and scalability—without the overhead of framework migration or custom engineering.

Why Sequence Parallelism Matters for Post-Training

Most LLM post-training workflows—SFT, DPO, and their variants—are not designed to handle sequences beyond ~32k tokens on standard GPU clusters. Attempts to scale further often hit hard memory walls, even with gradient checkpointing and ZeRO-3 offloading.

Sequence parallelism addresses this by distributing the sequence dimension (not just parameters or batches) across devices. This reduces per-GPU activation memory and enables training on much longer contexts. However, integrating SP correctly into post-training is non-trivial: it requires synchronized attention computation, proper loss aggregation across devices, and careful data preprocessing to align token splits with parallel groups.

360-LLaMA-Factory solves these challenges out of the box.

Key Features That Make It Stand Out

Seamless Plug-and-Play Integration

You don’t need to rewrite your training loop or data loader. Simply replace the original LLaMA-Factory codebase with 360-LLaMA-Factory, and your existing YAML configs or command-line scripts will work unchanged. By default, it operates exactly like LLaMA-Factory. To enable SP, just add two lines:

sequence_parallel_size: 4 sequence_parallel_mode: "zigzag-ring" # or "ulysses"

That’s it—no model conversion, no pipeline overhaul.

Dual SP Backends: Zigzag-Ring and Ulysses

360-LLaMA-Factory supports two mature sequence parallelism strategies:

- Zigzag-ring attention (default): Built on

ring-flash-attn, optimized for low communication overhead in ring-based GPU topologies. - DeepSpeed Ulysses: A tensor-parallel approach that splits sequences along the sequence dimension uniformly.

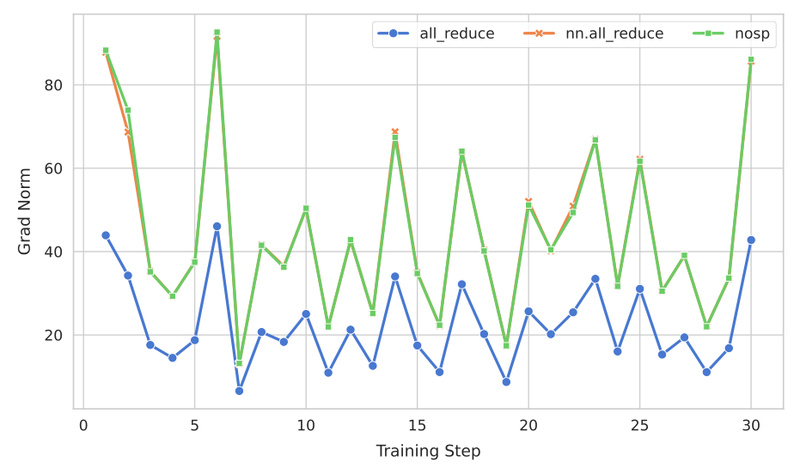

Both have been rigorously validated for correctness—loss curves with SP=1 vs. SP>1 overlap nearly perfectly—and benchmarked for speed parity (e.g., ~15 minutes for 64 steps on a 7B model with 28k tokens).

Full-Parameter Training at Scale

Unlike many SP implementations limited to LoRA or pretraining, 360-LLaMA-Factory enables full-parameter SFT and DPO on long sequences. Benchmarks show:

- 210k tokens on Qwen2.5-7B with 8×A800 GPUs (SP=8)

- 84k tokens for full-parameter DPO on the same 7B model

- 46k–86k tokens for 72B models, depending on SP size

This makes it uniquely suited for tasks where parameter efficiency isn’t enough—e.g., when aligning frontier models where every weight matters.

Minimal Code Changes, Maximum Modularity

The SP integration touches only three core components—model loading, data preprocessing, and loss computation—and does so through clean decorators and monkey-patching. This ensures maintainability and easy upstreaming (the team is actively working to merge SP into the main LLaMA-Factory repo).

Ideal Use Cases

360-LLaMA-Factory shines when you need:

- Long-document instruction tuning: Fine-tuning on legal contracts, scientific papers, or codebases exceeding 100k tokens.

- Multi-modal LLM alignment: Processing image-text sequences from models like Qwen-VL, where combined token lengths easily surpass 50k.

- High-fidelity DPO: Running full-parameter DPO on reasoning-heavy datasets (e.g., AIMO math benchmarks) without approximations.

- Rapid prototyping at scale: Testing long-context hypotheses without investing in new infrastructure or learning a new framework.

If your work involves models from 7B to 72B parameters and sequences beyond 32k tokens, this tool removes a major scalability barrier.

How It Solves Real-World Pain Points

Before 360-LLaMA-Factory, practitioners faced tough trade-offs:

- Switch frameworks (e.g., to Megatron-LM), requiring tedious model conversion and abandoning Hugging Face compatibility.

- Use buggy or unmaintained SP implementations (e.g., in XTuner or Swift), risking incorrect loss computation in DPO.

- Stick with short contexts, sacrificing task fidelity.

360-LLaMA-Factory eliminates these compromises. It runs natively in the Hugging Face ecosystem, inherits LLaMA-Factory’s rich support for SFT/DPO variants, and has been battle-tested in internal and external projects—including Kaggle competition models and enterprise training pipelines.

Getting Started in Practice

You can adopt 360-LLaMA-Factory in two ways:

Fresh Install

conda create -n 360-llama-factory python=3.11 -y conda activate 360-llama-factory git clone https://github.com/Qihoo360/360-LLaMA-Factory.git cd 360-LLaMA-Factory pip install -e ".[torch,metrics,deepspeed]"

Incremental Upgrade

If you already use LLaMA-Factory:

pip install --no-deps ring-flash-attn flash-attn git clone https://github.com/Qihoo360/360-LLaMA-Factory.git # Replace your llamafactory directory or launch directly from src/

Then, in your training config:

cutoff_len: 128000 # your desired sequence length sequence_parallel_size: 4 # must divide total GPU count evenly

The framework automatically pads, shifts, and splits your data across the SP group. No changes to your dataset format are needed.

Limitations and Considerations

While powerful, 360-LLaMA-Factory has current boundaries:

- attention_dropout is disabled during SP (due to

ring-flash-attnlimitations). If your model config includes it, the system resets it to zero. - SP is validated only for SFT and DPO—not yet for reward modeling (RM), KTO, or pretraining.

- Peak performance may require complementary optimizations (e.g., Liger kernels, DPO logits precomputation, or removing

logits.float()casts).

These are active areas of development, with community contributions welcome.

How It Compares to Alternatives

| Framework | SP in SFT | SP in DPO | Hugging Face Native | Ease of Use |

|---|---|---|---|---|

| 360-LLaMA-Factory | ✅ | ✅ | ✅ | ⭐⭐⭐⭐⭐ |

| OpenRLHF | ✅ | ✅ | Partial | ⭐⭐⭐ |

| XTuner / Swift | ❌/⚠️ | ❌/⚠️ | ✅ | ⭐⭐ |

| Megatron-LM | ✅ | ❌ | ❌ | ⭐ |

| EasyContext | ✅ | ❌ | ✅ | ⭐⭐ |

360-LLaMA-Factory stands out for correctness, ecosystem fit, and zero-friction adoption. For teams already using LLaMA-Factory, it’s the lowest-risk path to long-context post-training.

Summary

360-LLaMA-Factory delivers industrial-strength sequence parallelism as a drop-in upgrade to one of the most popular LLM post-training frameworks. It empowers practitioners to scale SFT and DPO to unprecedented sequence lengths—210k tokens and beyond—without rewriting code, switching ecosystems, or compromising on model quality. With verified correctness, dual SP backends, and seamless integration, it’s the pragmatic choice for anyone pushing the boundaries of long-context LLM alignment.

If your next project demands full-parameter training on ultra-long sequences, 360-LLaMA-Factory removes the infrastructure barrier—so you can focus on what matters: your data, your model, and your results.