If you’re building applications that require real-time 3D facial understanding—like video conferencing enhancements, augmented reality filters, biometric verification, or character animation—you know how hard it is to balance speed, accuracy, and stability. Most state-of-the-art 3D face alignment models demand powerful GPUs or sacrifice responsiveness for precision.

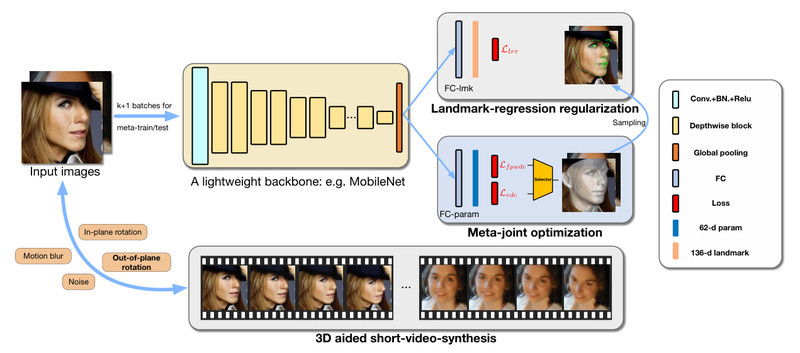

Enter 3DDFA_V2: a lightweight, CPU-friendly framework that delivers dense 3D face alignment at over 50 frames per second on a single CPU core, without compromising on accuracy or temporal stability in video sequences. Built on the ECCV 2020 paper "Towards Fast, Accurate and Stable 3D Dense Face Alignment", 3DDFA_V2 is designed explicitly for real-world deployment—especially in resource-constrained or latency-sensitive environments.

Unlike earlier approaches that prioritize benchmark scores over deployability, 3DDFA_V2 optimizes the entire pipeline: from face detection (using the fast FaceBoxes detector) to 3D Morphable Model (3DMM) parameter regression and dense mesh reconstruction. The result? A practical tool that developers and engineers can plug into live systems with minimal overhead.

Key Capabilities That Solve Real Engineering Problems

Blazing-Fast Inference with ONNX Acceleration

3DDFA_V2 leverages ONNX Runtime to minimize CPU latency. With the default MobileNet-V1 backbone, regressing full 3DMM parameters takes just 1.35ms per face on a modern laptop CPU when using 4 threads. Even the ultra-light MobileNet ×0.5 variant runs in under 0.5ms. This makes it feasible to integrate 3D face tracking into CPU-only pipelines—critical for edge devices, embedded systems, or cloud services where GPU costs are prohibitive.

Rich, Actionable Output Formats

The framework doesn’t just give you 3D coordinates. It supports a wide range of visual and geometric outputs, all controllable via simple command-line flags:

- 2D sparse & dense landmarks for facial feature localization

- Full 3D mesh with over 38,000 vertices

- Depth maps and PNCC (Projected Normalized Coordinate Code) for surface analysis

- Head pose estimation (yaw, pitch, roll)

- UV texture mapping for retexturing or avatar generation

- Export to standard .ply and .obj formats for 3D modeling pipelines

These features turn raw alignment into actionable data—whether you’re building an AR try-on app or analyzing facial micro-expressions in behavioral research.

Video-Aware Stability Through Temporal Smoothing

One of 3DDFA_V2’s standout innovations is its focus on stability across video frames. The authors introduce a virtual synthesis technique that simulates in-plane and out-of-plane head motion from a single image during training. This helps the model generalize better to natural head movement, reducing jitter and drift in live tracking.

For even smoother results, the repo includes demo_video_smooth.py, which uses look-ahead frames to stabilize alignment—ideal for video post-processing or interactive demos.

Ideal Use Cases

3DDFA_V2 shines in scenarios where real-time performance, low hardware requirements, and frame-to-frame consistency matter:

- Video conferencing: Add virtual backgrounds, gaze correction, or animated avatars without a GPU.

- Mobile and edge AR: Power face filters or makeup simulation on smartphones or IoT devices.

- Biometric analytics: Track subtle facial movements for fatigue detection, attention monitoring, or emotion inference.

- Content creation: Rapidly convert webcam footage into animatable 3D face meshes for game engines or VFX.

- Academic prototyping: Test 3D face alignment hypotheses with a stable, well-documented baseline that runs on a laptop.

Because 3DDFA_V2 avoids heavy dependencies and runs end-to-end on CPU, it’s also well-suited for privacy-sensitive applications where data can’t be sent to cloud GPUs.

Getting Started Is Deliberately Simple

The project lowers the barrier to entry:

- Clone the repo and run a single build script (

build.sh) to compile optimized Cython renderers. - Launch any demo with intuitive flags—e.g.,

python3 demo.py -f input.jpg -o 3d --onnxgenerates a 3D face mesh. - Experiment instantly via the provided Google Colab notebook, which requires zero local setup.

Pre-trained models (MobileNet and MobileNet ×0.5 variants) are included, and the ONNX export is ready to use—just add --onnx to any command for accelerated inference.

For developers integrating 3DDFA_V2 into larger systems, the modular codebase separates face detection, parameter regression, and rendering—making it easy to swap components or extend functionality.

Practical Limitations to Consider

While 3DDFA_V2 excels in many real-world settings, it’s important to understand its constraints:

- Extreme poses: Alignment may fail when the head yaw exceeds 90 degrees, as the model wasn’t trained on such profiles.

- Rapid motion: Very fast head movements can break the alignment-based tracking, since the system doesn’t re-detect faces every frame by default.

- Closed eyes: Training data (300W-LP) contains few closed-eye samples, so eye landmark accuracy drops in those cases.

- Windows support: Building the Cython components on Windows requires manual intervention, though community workarounds exist.

These aren’t dealbreakers—but they do mean 3DDFA_V2 is best suited for frontal-to-mid-profile use cases with moderate motion, which covers the majority of consumer and enterprise applications.

Summary

3DDFA_V2 isn’t just another academic face alignment model. It’s a production-ready toolkit engineered for speed, stability, and practicality. By delivering dense 3D face reconstruction at CPU-friendly speeds and supporting a rich set of outputs, it removes a major bottleneck for developers building real-time facial understanding systems. If your project demands low-latency 3D face analysis without GPU dependency, 3DDFA_V2 is one of the most efficient, well-maintained options available today.