Building reliable software from natural language prompts remains a major challenge—even for today’s most capable large language models (LLMs). While basic chat-based agents can handle simple tasks, they often fail on complex projects due to inconsistent logic, hallucinated specifications, or poorly structured code. This is where MetaGPT changes the game.

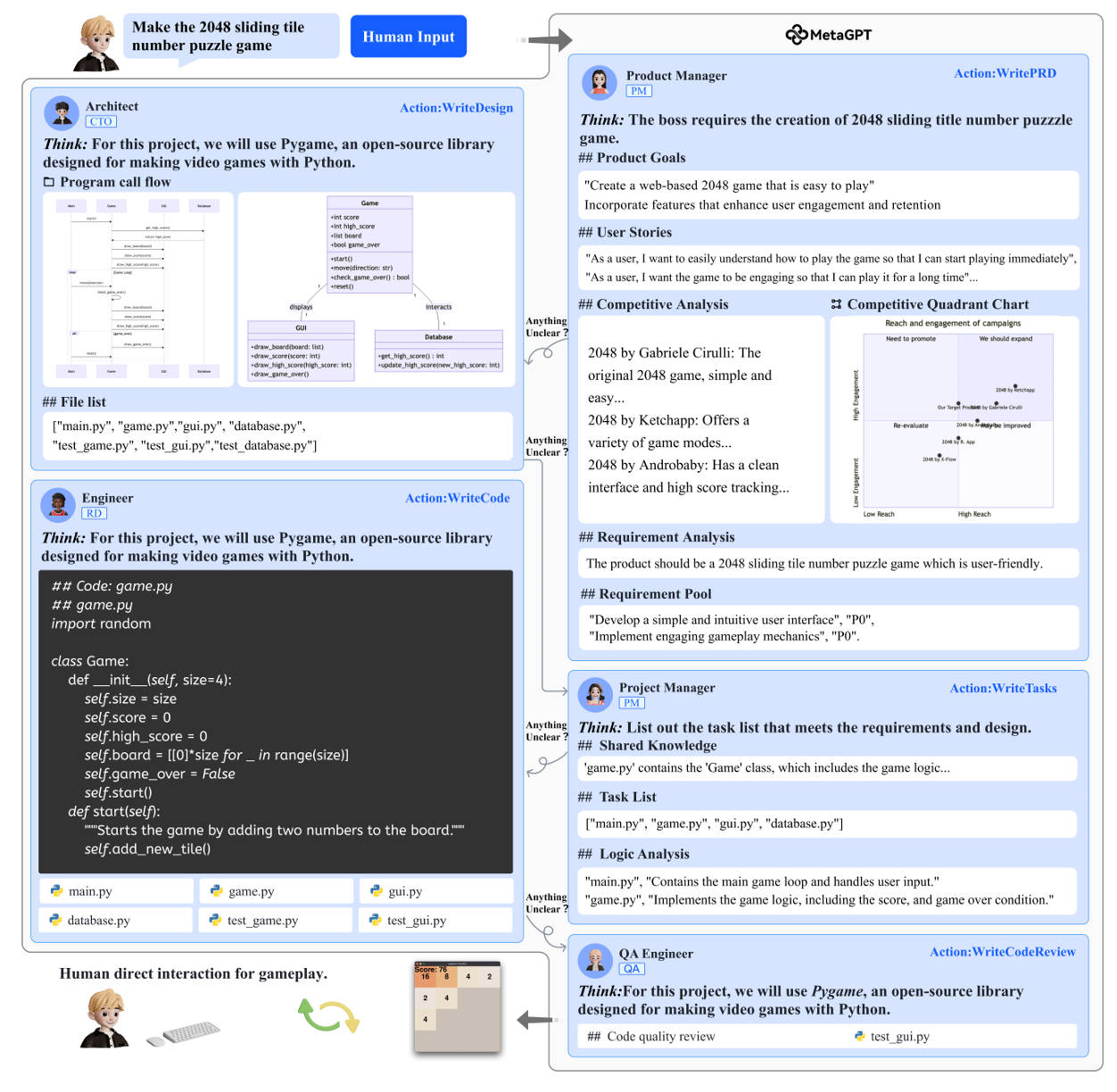

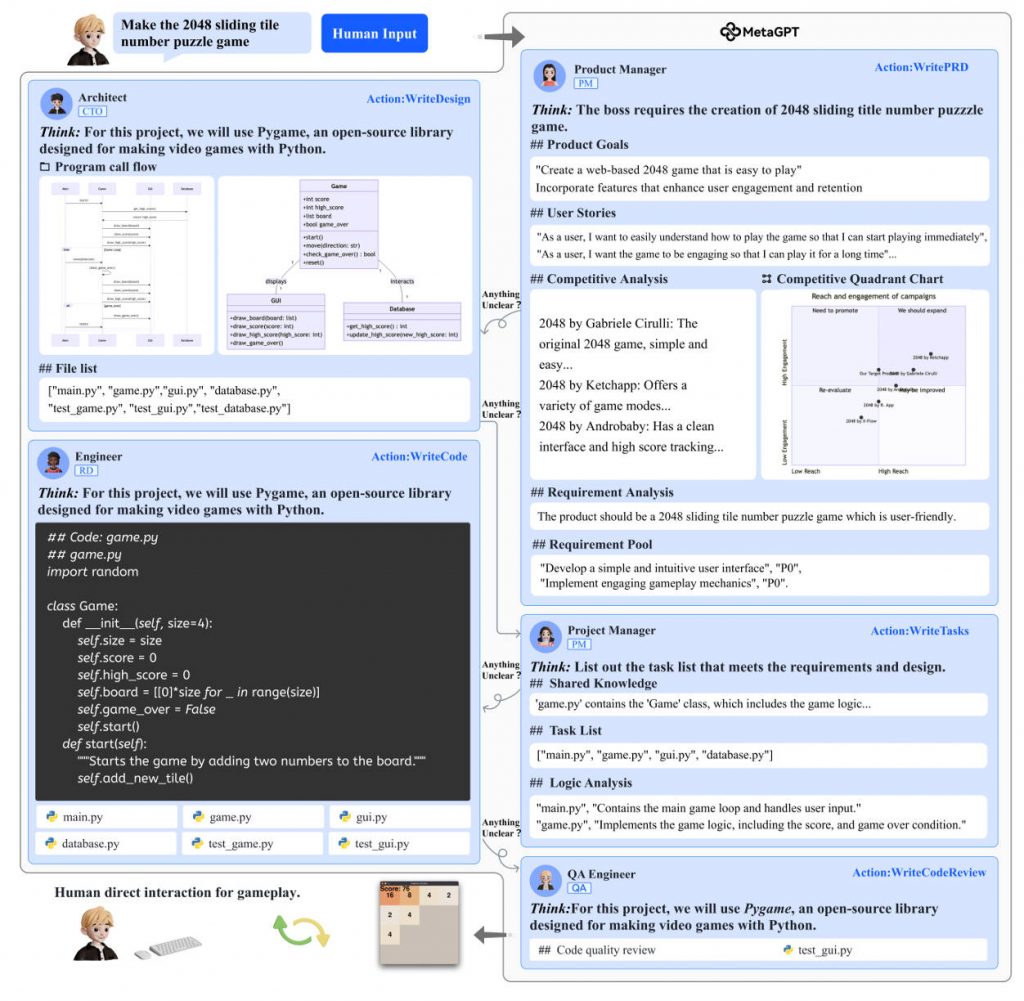

MetaGPT is an open-source, multi-agent framework that simulates an entire software company—complete with product managers, architects, engineers, and project managers—working together under standardized workflows. Instead of chaining generic LLM calls, MetaGPT embeds Standardized Operating Procedures (SOPs) into agent interactions, enabling structured collaboration that mimics real-world software development. The result? From a single-line requirement like “Create a 2048 game,” MetaGPT can generate coherent user stories, API designs, data structures, clean code, and technical documentation—dramatically reducing errors and fragmentation common in naive multi-agent systems.

Designed for developers, researchers, and technical teams, MetaGPT brings industrial-grade software engineering practices into the world of AI agents, making it uniquely suited for tasks that demand consistency, traceability, and role-based coordination.

Why MetaGPT Solves Real Problems in AI-Powered Development

Fragmentation and Hallucination in Existing Multi-Agent Systems

Most LLM-based agent frameworks treat problem-solving as a freeform dialogue. While this works for Q&A or brainstorming, it falls apart when building production-grade software. Without clear roles or verification steps, agents may contradict each other, skip critical design phases, or output code that doesn’t match requirements. These cascading hallucinations undermine trust and usability.

MetaGPT directly addresses this by enforcing structured workflows grounded in real software development SOPs. Each agent plays a well-defined role—e.g., the product manager defines user stories, the architect drafts system design, and engineers implement modules—while intermediate outputs are reviewed and validated before proceeding. This “assembly line” approach ensures logical continuity and reduces compounding errors.

Role-Based Collaboration with Human-Like Verification

Unlike generic chat agents, MetaGPT assigns domain-specific expertise to each role. The architect doesn’t just “write code”—it reasons about scalability and modularity. The engineer doesn’t just “implement”—it cross-checks against API contracts. This mirrors how human teams operate, but at AI speed. Crucially, agents verify intermediate artifacts, such as data schemas or interface definitions, before downstream tasks begin. This built-in quality control is absent in most open-source agent frameworks.

Practical Use Cases for Engineers and Researchers

MetaGPT excels in scenarios where speed, coherence, and completeness matter more than one-off code snippets:

- Rapid Prototyping: Turn a product idea (“Build a todo app with user auth”) into a full-stack repo in minutes, complete with documentation and folder structure.

- Automated Technical Writing: Generate requirements documents, API specs, or architecture diagrams directly from high-level prompts.

- Data Analysis Automation: Use the built-in Data Interpreter role to run end-to-end data science workflows—load datasets, perform analysis, and render visualizations—via natural language commands.

- Educational & Research Exploration: Simulate team dynamics in software engineering studies or test workflow automation hypotheses without manual scripting.

These capabilities help teams bypass early-stage bottlenecks, especially in startups or research labs where resources are limited but velocity is critical.

Getting Started Is Simpler Than You Think

Despite its sophisticated architecture, MetaGPT is designed for easy adoption:

- Install with a single command (requires Python 3.9–3.11 and Node.js/pnpm):

pip install --upgrade metagpt

- Configure your LLM provider (OpenAI, Azure, Ollama, etc.) via

~/.metagpt/config2.yaml. Just add your API key and model choice (e.g.,gpt-4-turbo). - Run from the command line or as a Python library:

metagpt "Create a weather dashboard with real-time API"

Or programmatically:

from metagpt.solectual_company import generate_repo repo = generate_repo("Build a snake game with Pygame")

The framework handles the rest—role assignment, task decomposition, and artifact generation—within a clean workspace directory.

Limitations and Realistic Expectations

MetaGPT is not a magic bullet. It depends on the quality of the underlying LLM, so performance varies with model choice (e.g., gpt-4-turbo yields better results than gpt-3.5-turbo). It also requires valid API keys and cannot function offline without a compatible local LLM setup (e.g., via Ollama).

While MetaGPT significantly improves output coherence, human review remains essential for production systems. Think of it as a “first draft” generator that accelerates ideation and scaffolding—not a replacement for QA, security audits, or deep architectural review.

Moreover, complex domain-specific logic (e.g., financial compliance or embedded systems) may still require manual refinement. But for standard web apps, data tools, or educational prototypes, MetaGPT delivers remarkable out-of-the-box value.

Summary

MetaGPT redefines what’s possible with multi-agent AI by embedding real-world software engineering discipline into LLM collaboration. By combining role-based agents, standardized workflows, and intermediate verification, it solves the core pain points of fragmentation and inconsistency that plague simpler agent frameworks. For technical teams looking to prototype faster, automate documentation, or explore AI-powered development workflows, MetaGPT offers a production-ready, open-source foundation that’s both powerful and surprisingly easy to adopt.

Whether you’re a solo developer testing an idea or a research team studying agent coordination, MetaGPT turns natural language into structured, actionable software—with the rigor of a real engineering team.