Stereo matching—the task of finding corresponding pixels between left and right images to infer depth—is foundational to 3D vision systems used in autonomous vehicles, robotics, augmented reality, and industrial inspection. Yet despite years of algorithmic progress, practitioners face a persistent challenge: most stereo models are brittle outside the narrow environments they were trained on. Change the lighting, switch from urban streets to rural farmland, or move indoors, and performance often collapses.

Stereo Anything, introduced in the paper Stereo Anything: Unifying Zero-shot Stereo Matching with Large-Scale Mixed Data, directly addresses this generalization crisis. Instead of proposing another specialized architecture, it takes a bold, data-centric approach: unify diverse real-world labeled datasets with massive-scale synthetic stereo pairs generated from unlabeled monocular images. The result is a stereo model that works robustly across unseen domains—without any fine-tuning.

Built on the open-source OpenStereo framework (https://github.com/XiandaGuo/OpenStereo), Stereo Anything isn’t just a research concept—it’s a practical, well-engineered toolkit ready for real-world evaluation and deployment in academic settings.

Why Generalization in Stereo Matching Is So Hard

Traditional stereo models are typically trained on datasets like KITTI (urban driving) or Middlebury (indoor scenes). These datasets, while high-quality, cover limited visual conditions. A model trained solely on KITTI may fail catastrophically in foggy weather, at night, or in agricultural settings where texture, scale, and object categories differ drastically.

This domain gap arises because deep learning models latch onto dataset-specific cues—road layouts, car models, lighting patterns—rather than learning truly general correspondence principles. Without exposure to sufficient visual diversity during training, zero-shot transfer is unreliable.

Stereo Anything tackles this by rejecting the “one dataset, one model” paradigm. It asks: What if we train on everything available—and more?

The Data-Centric Breakthrough Behind Stereo Anything

Stereo Anything’s core innovation lies not in network design, but in training data composition. It combines two complementary sources:

- Curated real-world stereo datasets: Including KITTI, Middlebury, ETH3D, DrivingStereo, Argoverse, and more—covering urban, rural, indoor, aerial, and synthetic environments.

- Large-scale synthetic stereo pairs: Generated automatically from unlabeled monocular images (e.g., from web-scale image collections), using depth estimation and image warping techniques. This dramatically expands visual diversity without manual labeling.

By training on this heterogeneous mixture—over a dozen datasets and millions of synthetic pairs—Stereo Anything learns robust, domain-agnostic features for correspondence. The model sees so many variations in texture, geometry, lighting, and semantics that it stops overfitting to any single domain.

In extensive zero-shot evaluations across four public benchmarks (including KITTI, Middlebury, and ETH3D), Stereo Anything achieves state-of-the-art generalization, outperforming models trained on single datasets by significant margins—even without seeing target-domain data during training.

Practical Benefits for Engineers and Researchers

Stereo Anything isn’t delivered as a black-box model; it’s part of the OpenStereo codebase, which offers a mature, production-ready infrastructure for stereo research and development:

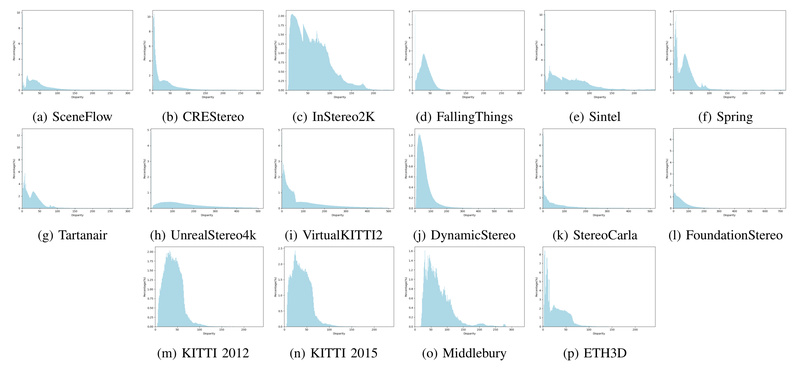

- Support for 15+ stereo datasets: From SceneFlow and Sintel to TartanAir and the newly released StereoCarla, making cross-dataset training and evaluation seamless.

- Reproduced SOTA models: Including PSMNet, GwcNet, RAFT-Stereo, and the project’s own FoundationStereo and LightStereo (ICRA 2025), all integrated with consistent APIs.

- Scalable training: Full support for Distributed Data Parallel (DDP) and Automatic Mixed Precision (AMP) to accelerate large-batch training on multi-GPU systems.

- Deployment-ready: TensorRT integration enables optimized inference for real-time applications.

- Clear diagnostics: Built-in TensorBoard logging and structured console output simplify debugging and performance tracking.

This ecosystem lowers the barrier to reproducing Stereo Anything’s results or adapting its methodology to new domains.

When Should You Use Stereo Anything?

Stereo Anything shines in scenarios where collecting labeled stereo data is expensive, slow, or impossible. Ideal use cases include:

- Deploying perception systems in new geographic regions (e.g., autonomous shuttles in Southeast Asia after training on European data).

- Operating under variable conditions: Rain, snow, dusk, or indoor-outdoor transitions where appearance shifts dramatically.

- Rapid prototyping in novel domains: Agricultural drones, warehouse robots, or underwater inspection—where no stereo benchmark exists yet.

- Academic research requiring strong zero-shot baselines: For evaluating domain adaptation, self-supervised learning, or cross-dataset generalization.

If your goal is a “one model fits most” stereo solution that avoids per-deployment retraining, Stereo Anything provides a compelling starting point.

How to Get Started

Getting started with Stereo Anything via OpenStereo is straightforward:

- Clone the repository: The codebase includes detailed setup instructions (

0.get_started.md). - Prepare datasets: Tutorials guide you through downloading and formatting supported datasets (15+ available).

- Configure training: Use YAML-based configs to mix real and synthetic data sources, choose a backbone (e.g., FoundationStereo), and enable DDP/AMP.

- Train or evaluate: Run zero-shot inference on unseen benchmarks without fine-tuning.

- Optimize for deployment: Export models to TensorRT for low-latency inference on edge devices.

The project is designed for extensibility—you can plug in your own data, models, or loss functions while leveraging the robust training pipeline.

Limitations and Considerations

While Stereo Anything significantly advances zero-shot stereo matching, users should note:

- Academic use only: The license explicitly prohibits commercial usage.

- Resource-intensive training: Scaling to mixed large-scale data requires substantial GPU memory and compute (though inference can be lightweight via TensorRT).

- Inherent stereo ambiguities remain: Like all stereo methods, it struggles in textureless regions (e.g., white walls), repetitive patterns, or highly reflective surfaces—though its diverse training helps mitigate these cases relative to narrow-domain models.

Summary

Stereo Anything redefines what’s possible in stereo matching by proving that data diversity—not just architectural novelty—drives real-world generalization. By unifying real and synthetic stereo data at scale, it delivers a model that works out-of-the-box across domains where traditional approaches fail.

For researchers and engineers in academia seeking a robust, flexible, and well-supported stereo solution that eliminates the need for per-scenario retraining, Stereo Anything—via the OpenStereo framework—offers a powerful, future-proof foundation.