In real-world computer vision systems—whether for autonomous vehicles, remote sensing, or robotic inspection—images rarely come from a single type of sensor. You might need to match a daytime RGB photo with a nighttime infrared image, align a depth map with a hand-drawn sketch, or fuse event camera data with conventional frames. Historically, this “modality gap” has broken standard image matching pipelines, which assume visually similar inputs. Most existing approaches either design bespoke models for specific modality pairs (e.g., RGB-to-infrared only) or train on small, real-world multimodal datasets that severely limit generalization.

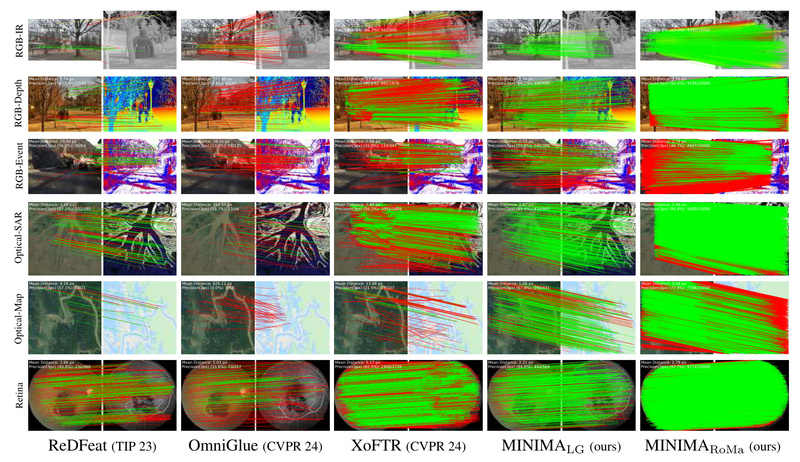

Enter MINIMA (Modality Invariant Image Matching): a unified framework that enables robust, zero-shot image matching across diverse imaging modalities—without requiring modality-specific architectures or hand-curated paired data. Instead of chasing architectural novelty, MINIMA tackles the root cause of poor cross-modality performance: data scarcity. By leveraging a generative data engine to synthesize a massive, labeled multimodal dataset called MD-syn, MINIMA trains a single matching pipeline that generalizes across 19 different modality combinations out of the box. For engineers and researchers building multimodal perception systems, this means one model, one training run, and reliable performance across sensors you may not have even seen during training.

Why Cross-Modality Matching Matters—and Why It’s Hard

Image matching is foundational to tasks like structure-from-motion, visual localization, and sensor fusion. But when inputs come from different physical sensing mechanisms—e.g., thermal vs. optical, or event-based vs. frame-based—their pixel-level statistics diverge dramatically. Traditional deep learning matchers (like SuperPoint or LoFTR) extract features assuming photometric consistency, causing them to fail catastrophically under modality shifts.

Prior solutions often fall into two camps:

- Modality-specific models: Require collecting and labeling paired data for each new sensor combination—a costly, non-scalable effort.

- Feature-level alignment: Attempt to force features into a shared space using complex adaptation modules, but these rarely generalize beyond narrow benchmarks.

MINIMA sidesteps both pitfalls by recognizing that the bottleneck isn’t the matcher—it’s the training data. If you could scale up diverse, accurately labeled multimodal pairs, even standard matchers would learn robust invariance.

How MINIMA Works: Data Scaling Over Architectural Complexity

At its core, MINIMA is deliberately simple in design but revolutionary in data strategy:

- Start with abundant RGB-only matching data: Datasets like MegaDepth provide millions of image pairs with precise geometric correspondences (e.g., via SfM).

- Use generative models to “translate” RGB into other modalities: Leveraging pre-trained vision models (e.g., Depth-Anything-V2 for depth, PaintTransformer for stylized outputs), MINIMA synthesizes matched pairs in modalities like infrared, depth, event, normal maps, sketches, and painted renditions—while preserving the original geometric labels.

- Build MD-syn: A comprehensive synthetic dataset spanning 6+ modalities, rich scene diversity, and pixel-perfect matching annotations inherited from the source RGB data.

- Train any off-the-shelf matcher on random modality pairs from MD-syn: No special layers or loss functions needed. The model learns modality-agnostic representations purely through exposure to varied inputs.

This data-centric approach yields surprising results: MINIMA-trained models outperform specialized baselines and even modality-tuned methods on real-world benchmarks, despite never seeing real multimodal data during training.

Real-World Applications Where MINIMA Delivers Immediate Value

MINIMA isn’t just a lab curiosity—it solves concrete problems in multimodal deployment:

- Autonomous Driving: Fuse RGB cameras with event cameras (for high-speed, low-latency scenes) or thermal sensors (for night/low-visibility conditions) using a single matching backbone.

- Remote Sensing: Align satellite imagery with artist-drawn sketches (e.g., historical maps) or synthetic aperture radar (SAR) outputs without retraining.

- Robotics & AR/VR: Match live RGB feeds with depth maps or surface normals from commodity sensors (e.g., Kinect, LiDAR) for robust 3D reconstruction.

- Industrial & Medical Inspection: Correlate visible-light photos with infrared thermography or X-ray images for defect detection, even when training data for that exact pairing is unavailable.

Critically, MINIMA operates in zero-shot mode: if your use case involves any of its supported modalities (infrared, depth, event, normal, sketch, paint), the pre-trained models likely work immediately—no fine-tuning required.

Getting Started: From Installation to Inference in Minutes

MINIMA is engineered for rapid adoption by technical teams:

-

Environment Setup:

git clone https://github.com/LSXI7/MINIMA.git cd MINIMA conda env create -f environment.yaml conda activate minima

-

Download Pre-trained Models:

Weights forminima_lightglue,minima_loftr,minima_roma, and other backbones are provided. Simply run:bash weights/download.sh

-

Run a Demo:

Test cross-modality matching (e.g., RGB vs. depth) with:python demo.py --method sp_lg --fig1 demo/vis_test.png --fig2 demo/depth_test.png --save_dir ./demo

-

Evaluate on Real Benchmarks:

Scripts are included for standard datasets like METU-VisTIR (RGB-infrared), DIODE (RGB-depth), and DSEC (RGB-event). For example:python test_relative_pose_infrared.py --method sp_lg

For large-scale synthetic evaluation, the full MD-syn dataset (based on MegaDepth) can be downloaded via OpenXLab or Hugging Face.

Limitations and Strategic Considerations

While MINIMA offers unprecedented flexibility, adopters should note:

- Synthetic Training Data: All models are trained on MD-syn. While experiments show strong zero-shot transfer to real data, performance on extremely domain-specific modalities (e.g., hyperspectral imaging) may require validation.

- Licensing Constraints: The

minima_lightgluevariant uses SuperPoint as a feature extractor, which is restricted to academic or non-commercial use under its original license. Commercial users should opt for alternatives likeminima_loftror verify compliance. - Modality Coverage: Currently supports 6 synthetic modalities. The team notes “More Modalities” as a future TODO—check the repository for updates if your sensor type isn’t listed.

Always evaluate MINIMA on a representative slice of your data before full integration.

Summary

MINIMA redefines cross-modality image matching by proving that data scale and diversity trump architectural complexity. By generating a massive, labeled multimodal dataset (MD-syn) and training standard matchers on it, MINIMA delivers a single, unified model that works across 19+ sensor combinations—zero-shot, out of the box. For teams building multimodal systems in robotics, autonomy, remote sensing, or industrial AI, it eliminates the need for custom pipelines per sensor pair, slashing development time and boosting robustness. With open-source code, pre-trained weights, and clear evaluation protocols, MINIMA is ready for real-world adoption today.