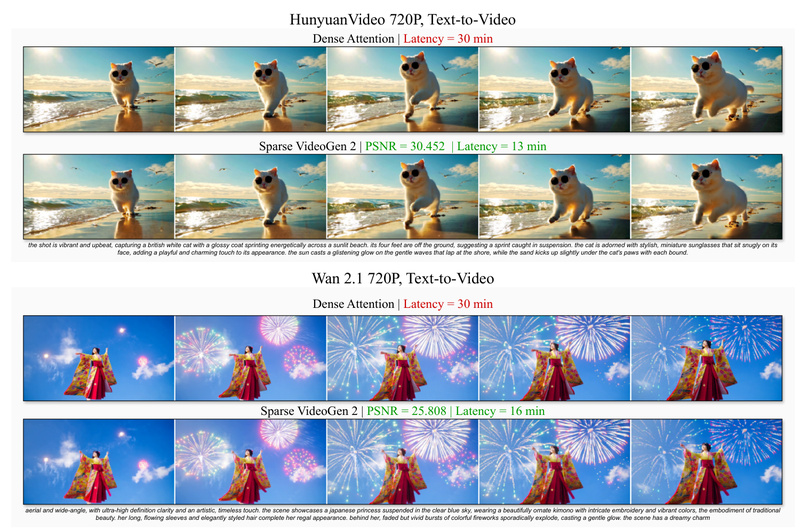

Video generation using diffusion transformers (DiTs) has reached remarkable visual fidelity—but at a steep computational cost. The quadratic complexity of full attention across space and time makes real-world deployment slow and expensive, especially for high-resolution outputs like 720p or beyond. Enter Sparse VideoGen2 (SVG2): a training-free, plug-and-play acceleration framework that slashes inference latency by up to 2.3× while preserving pixel-level quality (PSNR up to 30) on leading models like HunyuanVideo, Wan 2.1, and Cosmos.

Unlike methods that require model retraining, architectural changes, or quantization, SVG2 works out of the box with existing video diffusion pipelines. It rethinks how sparse attention is applied—not by position, but by semantic meaning—and reorganizes computation to align with GPU memory access patterns. The result? Faster video generation that doesn’t compromise on coherence, detail, or motion realism.

How SVG2 Solves Two Critical Bottlenecks in Video Diffusion

Traditional sparse attention methods in video generation suffer from two interrelated flaws that hurt either speed or quality:

-

Inaccurate critical token identification: Most approaches assume tokens close in space or time are equally important. But semantically, a moving car in one frame may matter far more than static background pixels—even if they’re neighbors. Clustering by position leads to blurry or incoherent outputs because key visual signals get averaged with irrelevant ones.

-

Wasted GPU computation: Even when critical tokens are identified, they’re often scattered across the attention map. GPUs excel at processing contiguous blocks of data, not sparse, interleaved patterns. Padding or irregular memory access cripples throughput, negating theoretical speedups.

SVG2 tackles both issues with a single elegant mechanism: semantic-aware permutation.

Using k-means clustering on token embeddings, SVG2 groups tokens by semantic similarity—not location. Then, it reorders these tokens so that all “important” ones are packed together in memory. This achieves two things simultaneously:

- Higher identification accuracy: Semantically coherent clusters yield more precise attention masks.

- GPU-friendly layout: Contiguous critical tokens eliminate padding and maximize memory bandwidth utilization.

The framework is training-free: it operates at inference time using the model’s own intermediate features, requiring zero fine-tuning or weight modifications.

Real-World Performance Gains You Can Trust

SVG2 delivers measurable, reproducible speedups without quality degradation:

- 2.30× faster on HunyuanVideo (PSNR ≈ 30)

- 1.89× faster on Wan 2.1 (PSNR ≈ 26)

Under the hood, SVG2 integrates:

- Top-p dynamic budget control: Dynamically allocates computation based on token importance, avoiding fixed sparsity ratios.

- Flash k-Means: A batched, Triton-accelerated k-means implementation that’s >10× faster than naive clustering—critical for low-overhead runtime token grouping.

- Custom CUDA/Triton kernels: Optimized implementations for RMSNorm, LayerNorm, and RoPE that achieve up to 20× higher memory bandwidth than Diffusers baselines.

These aren’t synthetic benchmarks—they’re end-to-end speedups on full 720p text-to-video and image-to-video pipelines.

Ideal Use Cases for Practitioners

SVG2 shines in scenarios where speed, quality, and ease of integration are non-negotiable:

- Production video generation services: Reduce cloud inference costs and latency for T2V/I2V APIs without retraining models your team already uses.

- Research labs on tight GPU budgets: Run more experiments per day on HunyuanVideo or Wan 2.1 without downgrading resolution or sequence length.

- Model developers seeking plug-and-play acceleration: Integrate SVG2 in minutes via pre-built scripts—no need to modify model code or training infrastructure.

Currently, SVG2 officially supports:

- Wan 2.1 (Text-to-Video and Image-to-Video)

- HunyuanVideo (Text-to-Video)

- Cosmos

Support for additional DiT-based video models is likely as long as they use 3D full attention.

Getting Started: Integration in Minutes

Adopting SVG2 requires no model changes—just a few environment setup steps and updated inference scripts:

GIT_LFS_SKIP_SMUDGE=1 git clone https://github.com/svg-project/Sparse-VideoGen.git cd Sparse-VideoGen # Create environment (Python 3.12 recommended) conda create -n SVG python=3.12.9 conda activate SVG # Install dependencies pip install uv uv pip install -e . pip install flash-attn --no-build-isolation # Build optimized kernels (includes Flash k-Means) git submodule update --init --recursive cd svg/kernels bash setup.sh

Then, run SVG2-accelerated inference with one command:

# Wan 2.1 Text-to-Video (720p) bash scripts/wan/wan_t2v_720p_sap.sh # HunyuanVideo Text-to-Video (720p) bash scripts/hyvideo/hyvideo_t2v_720p_sap.sh

The _sap.sh suffix denotes Semantic-Aware Permutation—the core of SVG2. Swap these in for your existing pipelines, and you’re done.

Limitations and Compatibility Notes

While SVG2 is broadly applicable, consider these boundaries:

- Model architecture: Only accelerates models using 3D full attention in DiT backbones (e.g., HunyuanVideo, Wan 2.1). It won’t work with recurrent or latent cache-based architectures.

- System requirements: Requires CUDA 12.4/12.8 and PyTorch 2.5.1/2.6.0. Older setups may need upgrades.

- FP8 attention: Not yet supported (planned for future release).

- Kernel compilation: Custom Triton/CUDA kernels must be built during setup—this adds ~5–10 minutes but is a one-time cost.

Performance gains scale with sequence length and resolution: longer videos and higher resolutions see the greatest speedups due to SVG2’s efficient memory layout.

Summary

Sparse VideoGen2 redefines what’s possible in training-free video acceleration. By replacing positional sparsity with semantic-aware token permutation, it solves the long-standing trade-off between speed and visual quality. With no retraining, minimal integration effort, and proven speedups up to 2.3×, SVG2 is a pragmatic choice for engineers and researchers deploying state-of-the-art video diffusion models today.

If you’re already using HunyuanVideo, Wan 2.1, or Cosmos in production or research, SVG2 offers immediate latency reduction—without compromising the visual fidelity your users expect.