Developing effective, task-oriented agents powered by large language models (LLMs) has become a priority for researchers and developers alike. However, many existing agent frameworks are overly complex, tightly coupled, or require steep learning curves—making rapid experimentation, architecture comparison, and multi-agent orchestration unnecessarily difficult.

AgentLite, an open-source library from Salesforce AI Research, directly addresses these pain points. Designed with simplicity and research agility in mind, it offers a lightweight, modular foundation for building both single and multi-agent LLM systems focused on completing concrete tasks—whether that’s answering complex questions, coordinating specialized tools, or benchmarking reasoning strategies.

Importantly, AgentLite isn’t another heavy platform. It’s a minimal yet expressive toolkit that lowers the barrier to entry while preserving full control over agent logic, reasoning flow, and collaborative behavior—perfect for technical evaluators who need to prototype, validate, and iterate quickly.

Why AgentLite? Solving Real Pain Points in LLM Agent Development

Many developers exploring LLM agents quickly hit roadblocks:

- Over-engineered frameworks that bury simple agent logic under layers of abstractions.

- Difficulty testing new reasoning strategies (like ReAct or reflection) because the codebase doesn’t cleanly separate reasoning from execution.

- Challenges in orchestrating multi-agent systems, especially when trying to compare different team structures or delegation mechanisms.

AgentLite cuts through this complexity. By providing a minimal core—centered around actions, agents, and task packages—it lets you define what an agent does and how agents collaborate with just a few lines of code. There’s no hidden orchestration engine or opaque middleware. You see exactly how control flows between agents, making debugging, modification, and extension straightforward.

This transparency is especially valuable for technical decision-makers who need to evaluate whether a specific agent architecture or reasoning method actually improves performance on their use case—without committing to a monolithic framework.

Core Strengths: What Makes AgentLite Stand Out

AgentLite’s design philosophy centers on three pillars that directly support rapid, reliable agent development:

1. Lightweight Codebase, Maximum Flexibility

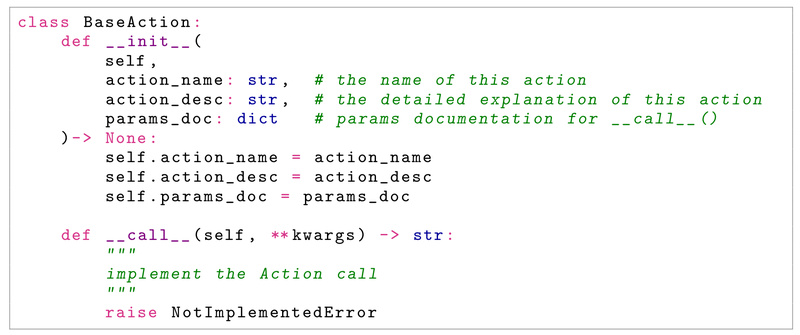

The entire library is built for easy modification. Adding a new agent type, custom reasoning loop, or communication protocol doesn’t require forking or deep framework knowledge. Everything—from actions to manager logic—is implemented as plain Python classes that inherit from clear base interfaces.

2. Task-Oriented by Design

Unlike general-purpose chat agents, AgentLite focuses on task completion. Each agent receives a structured TaskPackage with a clear instruction, and its job is to produce a final answer. This task-centric model makes evaluation consistent and success measurable—critical for research and product prototyping.

3. Research-First Architecture

AgentLite was created to accelerate academic and industrial research into LLM agent systems. It supports clean comparisons between single-agent vs. multi-agent setups, different reasoning strategies, and varied tool integrations. The included benchmarks (HotpotQA, Webshop, etc.) provide standardized evaluation grounds, while the simple API lets you plug in your own tasks or metrics.

When Should You Use AgentLite? Ideal Use Cases and Scenarios

AgentLite excels in scenarios where clarity, speed, and control matter more than out-of-the-box production scaling. Consider it if you’re:

- Prototyping a task-specific agent, such as a Wikipedia lookup bot or a math problem solver.

- Experimenting with multi-agent coordination, like a manager agent that delegates subtasks to specialized workers (e.g., one for web search, another for code execution).

- Benchmarking agent architectures on standardized datasets like HotpotQA or Tool-Operation tasks.

- Teaching or demonstrating LLM agent concepts, thanks to its readable, minimal code examples.

It’s particularly well-suited for academic labs, R&D teams, and engineers building proof-of-concept systems who need to validate ideas before investing in heavier infrastructure.

Getting Started in Minutes: A Simple, Hands-On Workflow

Starting with AgentLite takes just a few steps:

-

Install from source:

git clone https://github.com/SalesforceAIResearch/AgentLite.git cd AgentLite pip install -e .

-

Set your LLM API key (e.g., OpenAI):

export OPENAI_API_KEY=your_key_here

-

Define an action (e.g., a Wikipedia search tool):

from agentlite.actions.BaseAction import BaseAction from langchain_community.tools import WikipediaQueryRun class WikipediaSearch(BaseAction):def __init__(self):super().__init__(action_name="Wikipedia_Search",action_desc="Search Wikipedia for information.",params_doc={"query": "Search query string."})self.search = WikipediaQueryRun() def __call__(self, query):return self.search.run(query) -

Create an agent with that action:

from agentlite.agents import BaseAgent from agentlite.llm import get_llm_backend, LLMConfig llm = get_llm_backend(LLMConfig({"llm_name": "gpt-3.5-turbo"})) agent = BaseAgent(name="search_agent",role="Search Wikipedia to answer questions.",llm=llm,actions=[WikipediaSearch()] ) -

Run a task:

from agentlite.commons import TaskPackage task = TaskPackage(instruction="When was Microsoft founded?") response = agent(task) print(response)

For interactive demos, AgentLite also includes optional Streamlit UI support—simply install the UI dependencies and run streamlit run app/Homepage.py.

What to Keep in Mind: Limitations and Practical Considerations

While AgentLite shines in research and prototyping, it’s important to understand its current scope:

- LLM dependency: It relies on external LLM APIs (like OpenAI) or self-hosted models via compatible backends. You must manage API keys and rate limits yourself.

- Tool integration: Many example actions use LangChain tools (e.g., Wikipedia, DuckDuckGo), so leveraging those requires LangChain as a dependency.

- Task-focused, not conversational: AgentLite is optimized for goal-driven tasks, not open-ended chat or long-term memory management.

- Not a production deployment suite: It provides no built-in scaling, monitoring, or persistence—these must be added separately for production use.

These aren’t flaws, but intentional design choices that keep the library lean and focused on its core mission: enabling fast, transparent agent experimentation.

Summary

AgentLite removes the friction from building and evaluating task-oriented LLM agents. Its lightweight structure, clear abstractions, and research-friendly design make it an ideal choice for developers and researchers who need to quickly test agent architectures, reasoning strategies, or multi-agent collaboration patterns—without wrestling with bloated frameworks. If your goal is to innovate, validate, and iterate on LLM agent systems with minimal overhead, AgentLite offers a refreshingly simple path forward.