If you’re building or maintaining AI-powered coding assistants, you’ve likely faced a frustrating trade-off: fine-tune a model for one specific task—like code completion or bug fixing—and you get decent performance, but only for that narrow use case. Switch to another task, and you’re back to square one: retraining, redeploying, and managing yet another model version. This fragmentation wastes compute, inflates operational complexity, and ignores the natural synergy between related coding tasks.

Enter MFTCoder—an open-source, high-performance multi-task fine-tuning framework purpose-built for code-focused large language models (Code LLMs). Rather than training separate models for separate tasks, MFTCoder enables you to fine-tune a single model across multiple coding tasks simultaneously, delivering higher accuracy, faster training, and simpler deployment. Backed by rigorous research (published at KDD 2024 and EMNLP 2024) and proven in real-world benchmarks, MFTCoder isn’t just theoretical—it’s already powering state-of-the-art open models that outperform even GPT-4 on standard code evaluation suites.

Why Multi-Task Fine-Tuning for Code Models?

Traditional supervised fine-tuning (SFT) tailors a base LLM to a single dataset or task. While effective in isolation, this approach becomes unsustainable when you need your model to handle diverse coding scenarios—code generation, test prediction, documentation, refactoring, and more. Each new requirement demands a new fine-tuning run, multiplying training costs and complicating model management.

MFTCoder solves this by embracing multi-task learning (MTL)—the idea that related tasks can reinforce each other during training. Code-related tasks share underlying syntactic and semantic structures; learning to generate functions helps with writing tests, and understanding error messages improves debugging. MFTCoder leverages this interconnectedness, allowing gradients from multiple tasks to jointly shape model behavior, leading to better generalization and higher overall performance.

Critically, MFTCoder doesn’t just combine tasks—it balances them. Without careful orchestration, MTL suffers from data imbalance, mismatched convergence speeds, and dominance by easier tasks. MFTCoder addresses these issues head-on with techniques like CoBa (Convergence Balancer), introduced in its EMNLP 2024 paper, which dynamically adjusts task contributions to ensure stable, balanced training across heterogeneous datasets.

Key Capabilities That Set MFTCoder Apart

Unified Framework for Diverse Training Stacks

MFTCoder ships with two ready-to-use codebases:

- MFTCoder-accelerate: Built on Hugging Face

Accelerate, with optional integration of DeepSpeed ZeRO-3 or FSDP for large-scale distributed training. - MFTCoder-atorch: Leverages the ATorch framework for high-throughput distributed training.

This flexibility lets you plug MFTCoder into existing workflows—whether you’re using standard PyTorch tooling or specialized infrastructure.

Efficient Fine-Tuning for Real-World Constraints

Resource efficiency is baked into MFTCoder’s design:

- Supports LoRA, QLoRA, and full-parameter fine-tuning, enabling training even on consumer-grade GPUs (via 4-bit quantization).

- Includes offline tokenization to accelerate data preprocessing.

- Enables weight merging of LoRA adapters with base models, simplifying inference pipelines.

Broad Model Compatibility

MFTCoder integrates seamlessly with leading open-source Code LLMs, including:

- CodeLlama (and CodeLlama-Python)

- Qwen and Qwen2 (including MoE variants)

- DeepSeek-Coder

- StarCoder and StarCoder2

- Mixtral (8x7B MoE)

- ChatGLM3, CodeGeeX2, Gemma, and more

This “multi-model” support means you’re not locked into one architecture—you can apply MFTCoder’s advantages to the base model that best fits your needs.

High-Quality, Open Datasets

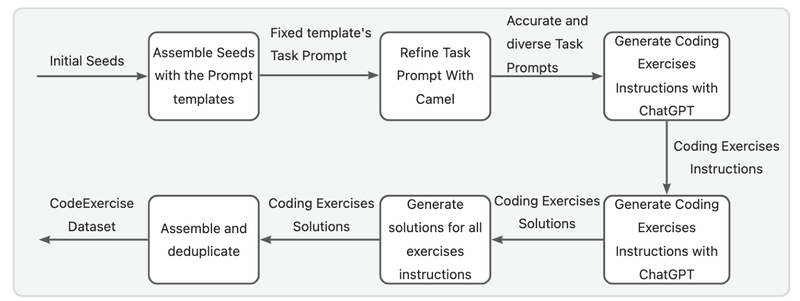

To jump-start your multi-task training, MFTCoder releases two curated instruction datasets:

- Evol-instruction-66k: A high-quality subset of evolved instructions, filtered to remove redundancy and HumanEval-like samples.

- CodeExercise-Python-27k: Focused on Python programming exercises, ideal for educational or practice-oriented applications.

These datasets are designed to complement each other and cover a broad spectrum of coding tasks.

Proven Performance on Industry Benchmarks

MFTCoder isn’t just fast—it’s accurate. Models fine-tuned with MFTCoder consistently rank at the top of public leaderboards:

| Model | HumanEval Pass@1 |

|---|---|

| CodeFuse-DeepSeek-33B | 78.7% |

| CodeFuse-CodeLlama-34B | 74.4% |

| CodeFuse-StarCoder2-15B | 73.2% |

| GPT-4 (zero-shot) | 67.0% |

Notably, CodeFuse-CodeLlama-34B surpasses GPT-4 on HumanEval—a standardized benchmark for functional correctness in Python code generation. Even the 4-bit quantized version retains 73.8% accuracy, proving that efficiency doesn’t have to sacrifice performance.

All these models are openly available on Hugging Face and ModelScope, so you can test, evaluate, or deploy them immediately—no proprietary APIs or black-box surprises.

Ideal Use Cases for Engineering and Research Teams

MFTCoder shines in scenarios where you need a versatile, high-quality coding assistant without the overhead of maintaining multiple models:

- Internal developer tools: Build a single model that handles code completion, unit test generation, and documentation—all from one fine-tuned checkpoint.

- Domain-specific code generation: Fine-tune on multi-task datasets that include your in-house programming patterns, error logs, or style guides.

- Resource-constrained environments: Use QLoRA + 4-bit quantization to fine-tune 30B+ models on a single GPU, then deploy the merged weights for low-latency inference.

- Research on code LLMs: Experiment with multi-task learning dynamics, convergence balancing, or cross-task generalization using a battle-tested framework.

By consolidating tasks into one training pipeline, MFTCoder reduces not just compute costs but also the cognitive load of managing a model zoo.

Getting Started Without the Headache

Adopting MFTCoder is designed to be straightforward:

- Prerequisites: CUDA ≥11.4, PyTorch ≥2.1, and (recommended) FlashAttention ≥2.3.

- Environment setup: Run the included

init_env.shscript to install dependencies. - Choose your stack: Opt for

MFTCoder-accelerateif you’re familiar with Hugging Face + DeepSpeed/FSDP; tryMFTCoder-atorchfor ATorch-based workflows. - Start training: Use provided scripts to launch multi-task fine-tuning with LoRA/QLoRA or full-parameter updates.

The framework includes detailed examples for major models and fine-tuning modes, so you can go from clone to training in minutes.

Limitations to Consider

While powerful, MFTCoder isn’t a magic bullet:

- GPU dependency: Even with QLoRA, you’ll need at least one modern GPU (e.g., A10, A100, or RTX 4090) for reasonable training times.

- Dataset quality matters: Multi-task gains depend on the diversity and quality of your training data. MFTCoder provides starter datasets, but domain-specific tasks may require additional curation.

- Model coverage isn’t universal: While support is broad, newer or highly customized architectures may need adapter integration.

That said, the project is actively maintained (v0.5 released in October 2024), with new models and alignment methods (like DPO, RPO, ORPO) added regularly.

Summary

MFTCoder redefines how we fine-tune code LLMs—not as isolated, single-purpose tools, but as unified, multi-skilled assistants that learn from the full breadth of programming tasks. By solving the fragmentation of traditional fine-tuning through intelligent multi-task learning, efficient resource usage, and open collaboration, it delivers higher accuracy, faster iteration, and simpler deployment—all while remaining fully open source.

For technical leads, ML engineers, and researchers tired of juggling dozens of narrowly tuned models, MFTCoder offers a compelling path toward a leaner, smarter code AI stack. With production-ready models already outperforming GPT-4 and a framework that scales from laptops to clusters, it’s a solution worth adopting today.