The Segment Anything Model (SAM) revolutionized 2D image segmentation by enabling zero-shot, promptable mask generation from RGB images. However, SAM’s reliance on texture and color often leads to over-segmentation—especially in visually homogeneous scenes where objects share similar appearances but differ in shape or spatial structure.

Enter SAD (Segment Any RGBD): a novel extension that injects geometric awareness into SAM by leveraging depth data. Instead of treating segmentation as a purely visual (RGB) task, SAD renders depth maps into pseudo-RGB images and feeds them into SAM, allowing the model to focus on object geometry rather than surface texture. This simple yet powerful shift significantly reduces fragmentation in segmentation outputs and improves object coherence—particularly in indoor environments where shape cues are more reliable than color.

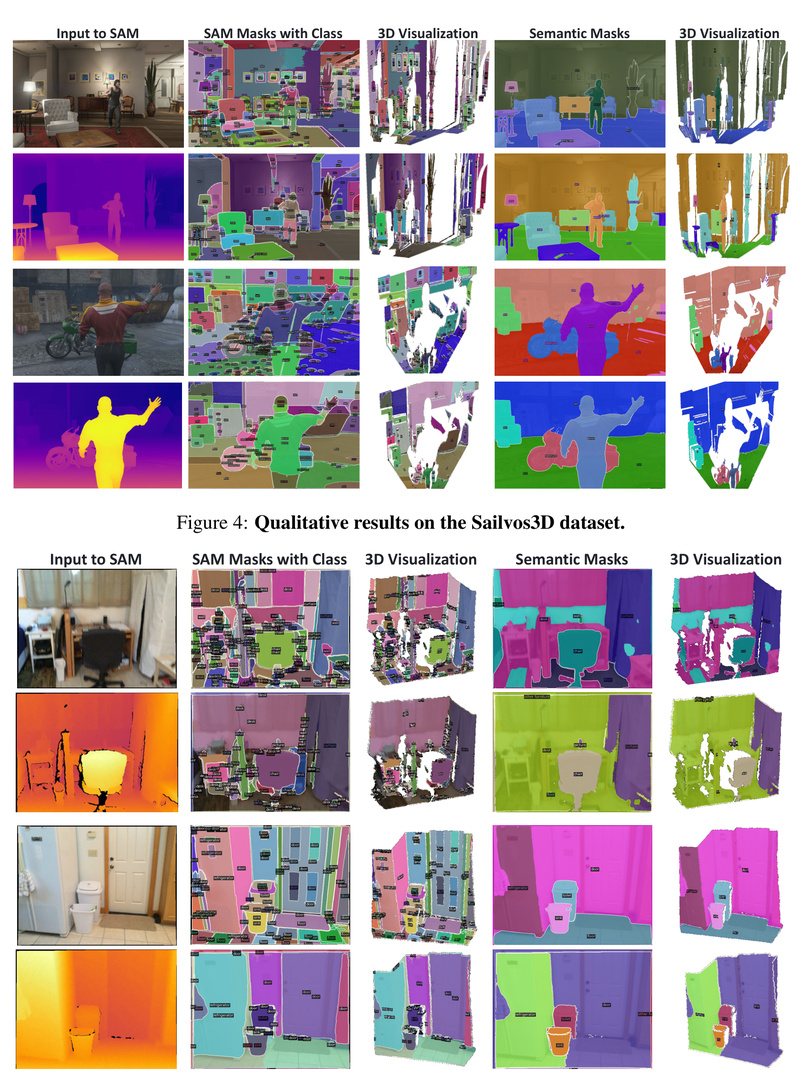

Built on top of SAM and integrated with OVSeg for open-vocabulary semantic labeling, SAD delivers not only improved 2D masks but also full 3D panoptic segmentation, making it a compelling choice for robotics, scene understanding, and any application powered by RGBD sensors.

How SAD Works: Geometry Over Texture

At its core, SAD exploits a key insight: humans can recognize objects in depth maps even without color or texture. To mimic this, SAD converts a raw depth map of size [H, W] into a rendered RGB-like image using a colormap—transforming geometric structure into a visual format SAM can process.

This rendered depth image is then passed through SAM, which generates object proposals based purely on shape boundaries. Because depth suppresses irrelevant texture variations (e.g., patterns on a tablecloth or lighting changes), SAM produces fewer, more spatially coherent masks.

Crucially, SAD doesn’t discard RGB entirely. It supports dual-mode input: users can choose to segment either the original RGB image or the rendered depth image—or even compare both. This flexibility lets practitioners select the best modality for their specific scenario.

After mask generation, SAD applies OVSeg, a zero-shot semantic segmentation model, to assign open-vocabulary class labels (e.g., “chair,” “lamp,” “door”) to each mask—enabling semantic understanding without task-specific training data. Finally, by combining mask geometry with depth values, SAD lifts results into 3D space for panoptic scene reconstruction.

Key Strengths That Solve Real Problems

SAD addresses several long-standing challenges in segmentation:

- Reduces Over-Segmentation: In RGB-only SAM, a single object like a table may be split into multiple fragments due to texture changes or shadows. SAD’s depth-based approach treats it as one unified entity—dramatically improving mask quality.

- Enables Geometry-Centric Understanding: When color fails (e.g., a white mug on a white countertop), geometry succeeds. SAD excels in these ambiguous cases where RGB-based models falter.

- Zero-Shot Semantic Segmentation: Thanks to OVSeg integration, SAD assigns meaningful class names without retraining—ideal for dynamic or open-world environments.

- 3D Panoptic Output: Beyond 2D masks, SAD reconstructs full 3D object instances with semantics, enabling downstream tasks like navigation or manipulation.

Ideal Use Cases

SAD is particularly valuable in domains where RGBD data is available and geometric structure matters more than appearance:

- Indoor Scene Understanding: Tested on ScanNetV2 and SAIL-VOS 3D, SAD handles cluttered rooms, furniture, and architectural elements with robustness.

- Robotics & Autonomous Systems: Robots equipped with depth cameras (e.g., Kinect, RealSense) can use SAD to identify object boundaries for grasping or path planning—even under poor lighting.

- 3D Reconstruction & Digital Twins: Accurate, geometry-aware segmentation accelerates the creation of semantic 3D models for AR/VR, simulation, or facility management.

- Assistive Technologies: For visually impaired navigation aids, understanding spatial layout via depth is more reliable than relying on color.

Getting Started: From Demo to Deployment

SAD is designed for practical adoption:

-

Run a Demo Instantly: A Hugging Face demo is available for quick testing. For local use, the repository includes a user-friendly UI (

ui.py) and sample inputs. -

Prepare Inputs: You’ll need aligned RGB and depth images. The depth map is automatically rendered using a colormap—no manual preprocessing required.

-

Run Inference: With pre-downloaded SAM (e.g.,

sam_vit_h_4b8939.pth) and OVSeg (ovseg_swinbase_vitL14_ft_mpt.pth) checkpoints placed in the repo, execute:python ui.py

Then click an example and hit “Send.” Results—including class-labeled masks and 3D visualizations—appear in 2–3 minutes.

-

Scale to Your Data: For custom datasets, simply provide your own RGBD pairs and follow the data preparation guidelines for ScanNet or SAIL-VOS 3D formats.

Limitations and Practical Considerations

While powerful, SAD isn’t a universal solution:

- Geometry Ambiguity: Objects with similar depth profiles (e.g., two adjacent chairs) may be merged into one mask. In such cases, RGB input remains useful.

- Requires RGBD Data: SAD cannot operate on RGB-only images. Ensure your hardware includes a depth sensor.

- Runtime: Inference takes 2–3 minutes per scene on standard hardware—acceptable for offline analysis but not real-time applications without optimization.

- Licensing: SAD inherits non-commercial licenses from OVSeg (CC-BY-NC 4.0) and SAM (Apache 2.0). Verify compliance before commercial deployment.

Summary

SAD bridges a critical gap in foundation model-based segmentation by reintroducing geometry into the loop. By rendering depth maps for SAM, it tames over-segmentation, enhances object coherence, and unlocks 3D semantic understanding—all without retraining. For practitioners working with RGBD data in robotics, indoor mapping, or 3D vision, SAD offers a practical, plug-and-play upgrade over standard SAM—turning depth from a passive channel into an active segmentation cue.

If your application struggles with fragmented masks or ambiguous textures, SAD might be the geometric lens your pipeline has been missing.