Large language models (LLMs) have made remarkable strides in generating coherent, context-aware responses. Yet, when it comes to complex, multi-step reasoning tasks—especially those requiring up-to-date information, external validation, or deep research—they often fall short. Hallucinations, lack of real-time data, and difficulty maintaining logical coherence over long inference chains remain persistent challenges.

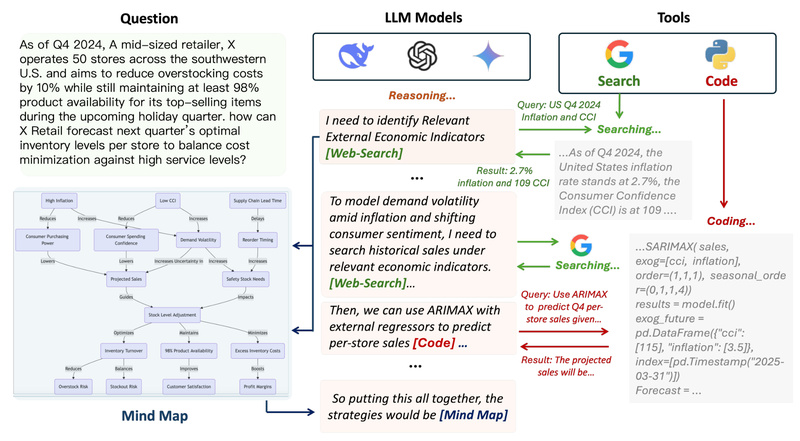

Enter Agentic Reasoning: an open-source framework designed to bridge this gap by integrating external agentic tools directly into the LLM reasoning pipeline. By combining dynamic web search, code execution, and a novel structured memory system called the Mind-Map agent, Agentic Reasoning empowers LLMs to conduct reliable, traceable, and deeply informed reasoning—making it especially well-suited for research-heavy, real-world problem solving.

Developed by researchers behind the paper “Agentic Reasoning: A Streamlined Framework for Enhancing LLM Reasoning with Agentic Tools”, this framework has demonstrated state-of-the-art performance among public models, rivaling even proprietary systems like OpenAI Deep Research when paired with capable base models such as DeepSeek-R1.

Why Agentic Reasoning Stands Out

1. Agentic Tool Integration for Real-World Grounding

Unlike standard LLM prompting, Agentic Reasoning doesn’t rely solely on internal knowledge. Instead, it dynamically invokes external tools during reasoning:

- Web search (via You.com, Bing, or Jina) to fetch current, factual information.

- Code execution to validate hypotheses, perform calculations, or analyze data.

- Structured memory to retain context and prevent drift over extended reasoning.

This tool-augmented approach ensures that conclusions are grounded in real evidence—not just statistical patterns from training data.

2. The Mind-Map Agent: Structured Reasoning at Scale

A key innovation in Agentic Reasoning is the Mind-Map agent, which constructs a knowledge graph of the reasoning process. Each node represents a fact, inference, or tool result, while edges encode logical dependencies. This structure:

- Maintains coherence across long, branching reasoning chains.

- Enables traceability: users can inspect why a conclusion was reached.

- Reduces redundancy and contradiction by tracking prior steps.

For tasks requiring dozens of search queries or iterative code runs, the Mind-Map agent prevents the “reasoning collapse” that often plagues naive tool-augmented LLMs.

3. Best-in-Class Web Search Capabilities

The framework includes a highly optimized Web-Search agent, refined through extensive experimentation. It outperforms prior search-augmented reasoning methods by intelligently refining queries, filtering noise, and integrating results into the reasoning graph. This is critical for deep research tasks where precision and relevance directly impact final accuracy.

Ideal Use Cases

Agentic Reasoning shines in scenarios where:

- Deep research is required (e.g., competitive intelligence, scientific literature synthesis, or market trend analysis).

- Multi-hop reasoning involves external validation (e.g., “Does this claim hold given today’s news?” or “Can we reproduce this result with real data?”).

- Auditability matters: users need to verify or explain how a conclusion was reached—common in academic, legal, or technical domains.

It’s less suited for simple Q&A or tasks that don’t benefit from external tooling. But for anything demanding rigor, recency, and structured logic, it’s a compelling choice.

Solving Real Pain Points

Standard LLMs struggle with three core limitations in complex reasoning:

-

Hallucination: Generating plausible but false information.

→ Agentic Reasoning mitigates this by grounding claims in retrieved evidence. -

Static knowledge: Inability to access post-training data.

→ Live web search ensures responses reflect the current world. -

Poor traceability: “Black-box” reasoning with no audit trail.

→ The Mind-Map agent provides a transparent, inspectable reasoning graph.

Together, these features help users make decisions with higher confidence and verifiability—essential in professional and research settings.

Getting Started

Agentic Reasoning is designed for hands-on evaluation, though it requires access to external APIs. Here’s how to run it:

-

Install dependencies:

conda env create -f environment.yml

-

Set API keys in your environment:

OPENAI_API_KEY(or another remote LLM provider)YDC_API_KEY(for You.com search, used in deep research mode)- Optional:

JINA_API_KEYandBING_SUBSCRIPTION_KEYfor alternative search backends

-

Run the main script:

python scripts/run_agentic_reason.py --use_jina True --jina_api_key "your_key" --bing_subscription_key "your_key" --remote_model "gpt-4o" --mind_map True --deep_research True

The --mind_map and --deep_research flags enable core advanced features. Note that while the framework is theoretically runnable, it is actively under development and may change rapidly.

Current Limitations

Prospective adopters should consider:

- Pre-alpha status: The project is not yet production-ready; expect breaking changes.

- API dependency: Requires multiple third-party services (OpenAI, You.com, etc.), which may incur costs or access restrictions.

- Manual orchestration: Full automation (e.g., “auto research”) remains on the roadmap (

TODOlist).

These factors make it best suited for experimentation, research prototyping, or as a reference architecture—not for mission-critical deployments without further hardening.

Summary

Agentic Reasoning redefines what’s possible with tool-augmented LLMs by combining dynamic external tool use with structured, graph-based memory. Its Mind-Map agent and advanced Web-Search module enable deep, coherent, and verifiable reasoning—addressing key weaknesses of conventional LLM approaches. While still in active development, it offers a powerful open-source blueprint for anyone tackling complex, research-intensive problems with AI. For technical decision-makers, researchers, and engineers needing reliable, evidence-backed reasoning, Agentic Reasoning is worth exploring today.