Medical image segmentation—especially in 3D CT scans—is a cornerstone of clinical decision support, surgical planning, and radiological research. Yet, despite the rise of powerful foundation models like Meta’s Segment Anything Model (SAM), practical adoption in medical contexts remains bottlenecked by annotation overhead. Traditional approaches, including medical adaptations of SAM, demand slice-by-slice manual prompts across entire datasets—a labor-intensive process that scales poorly with data volume.

Enter MedLSAM: the first fully automatic medical adaptation of SAM for 3D CT imaging. Developed by OpenMedLab, MedLSAM reimagines how segmentation is performed in volumetric medical data by decoupling localization from segmentation. It introduces MedLAM (Localize Anything Model for 3D Medical Images), a self-supervised foundation model that pinpoints anatomical structures using just a handful of template scans annotated with six extreme points. These localizations then guide SAM to perform accurate segmentation—without requiring any additional annotations on the target (query) data.

The result? High-quality segmentation across 38 distinct organs with near-constant annotation effort, regardless of dataset size. This breakthrough makes large-scale, automated medical image analysis not just feasible—but practical.

How MedLSAM Redefines Medical Segmentation Workflow

The Two-Stage Architecture: Localize First, Segment Second

MedLSAM’s innovation lies in its modular design:

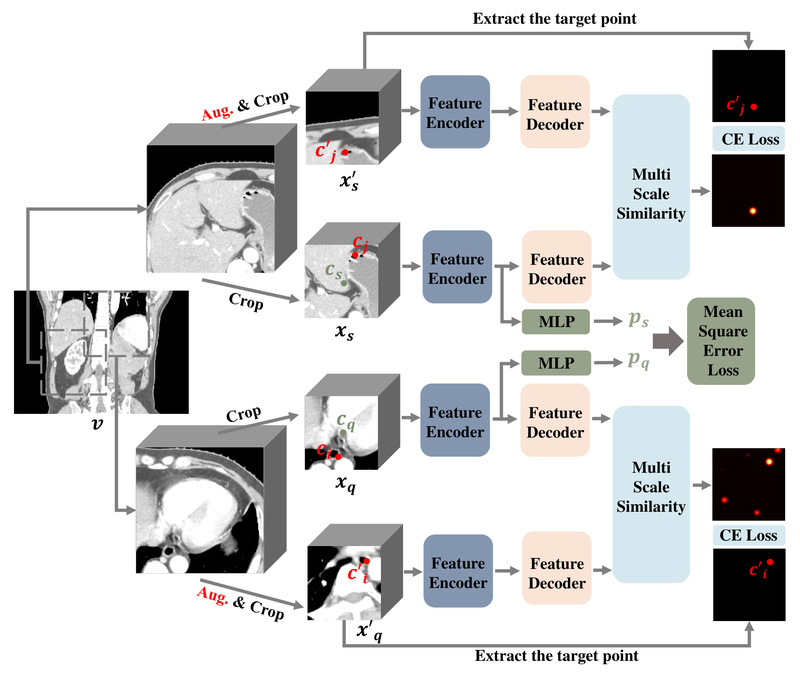

- MedLAM handles 3D localization: Given a few “support” CT scans with minimal extreme-point annotations (e.g., leftmost, rightmost, topmost, bottommost, frontmost, and rearmost points of an organ across axial, sagittal, and coronal views), MedLAM learns to generalize and locate the same anatomical structure in entirely new, unlabeled “query” scans.

- SAM (or MedSAM) handles 2D slice segmentation: Once MedLAM generates a tight 2D bounding box for each slice of the query volume, SAM automatically segments the organ within that box—no manual prompts needed per slice.

This separation dramatically reduces human involvement. Instead of annotating hundreds or thousands of slices, you annotate only six points on 3–10 template scans, and MedLSAM handles the rest.

Annotation Efficiency That Scales

In conventional SAM-based pipelines, annotation burden grows linearly with dataset size. MedLSAM breaks this dependency. Whether you’re processing 10 scans or 10,000, the annotation workload stays fixed—limited to the initial support set. This makes MedLSAM uniquely suited for:

- Large retrospective studies

- Multi-institutional collaborations

- Clinical deployment where labeling resources are scarce

Experiments on StructSeg and WORD datasets (covering 38 organs) confirm that MedLSAM achieves segmentation accuracy comparable to fully supervised models and manual-prompt SAM variants—with orders of magnitude less labeling.

Practical Strengths for Real Projects

Plug-and-Play with Existing SAM Ecosystem

MedLSAM doesn’t reinvent the wheel—it enhances it. You can use either the original SAM or the medical-optimized MedSAM as the segmentation backend. This compatibility means teams already familiar with SAM can adopt MedLSAM with minimal retraining or architectural overhaul.

Flexible Localization Modes: SPL vs. WPL

MedLSAM offers two inference strategies to balance speed and precision:

- Whole-Patch Localization (WPL): Processes the entire volume at once. Faster but may sacrifice accuracy for small or complex structures.

- Sub-Patch Localization (SPL): Divides the volume into overlapping sub-patches along the slice axis, enabling finer-grained localization. Recommended for challenging anatomies.

Users simply specify bbox_mode = SPL or WPL in the config file—no code changes required.

Zero Preprocessing Burden

Unlike many medical AI tools, MedLSAM performs on-the-fly normalization and intensity handling during inference. You do not need to preprocess your CT images—just point the system to raw .nii.gz files, and it handles the rest. This reduces pipeline complexity and avoids preprocessing-induced artifacts.

Getting Started: From Installation to Inference

Minimal Setup Requirements

MedLSAM runs on standard deep learning stacks:

- Python 3.10

- PyTorch ≥ 1.11

- Libraries:

nibabel,SimpleITK,MONAI,tqdm,scipy

Installation takes minutes:

conda create -n medlsam python=3.10 -y conda activate medlsam pip install torch git clone https://github.com/openmedlab/MedLSAM cd MedLSAM && pip install -e .

Model Checkpoints

Download three pretrained weights:

medlam.pth(MedLAM localization model)sam_vit_b_01ec64.pth(original SAM) ormedsam_vit_b.pth(medical SAM)

Place them in the checkpoint/ directory.

Data Organization (No Moving Files!)

MedLSAM uses text files to reference data—no need to restructure folders. Create four .txt files:

support_image.txt/support_label.txt: Paths to 3–10 annotated template scansquery_image.txt/query_label.txt: Paths to unlabeled scans to segment

Ensure image-label pairs are aligned by line order (e.g., line 1 in image list ↔ line 1 in label list).

Run Inference in One Command

For SPL-based segmentation:

CUDA_VISIBLE_DEVICES=0 python MedLSAM_SPL_Inference.py --config_file your_config.txt

Outputs include:

- Segmentation masks (

.npzand.png) - Per-organ Dice Similarity Coefficient (DSC) scores

A single GPU with ≥12GB VRAM is recommended.

Ideal Use Cases for Teams and Researchers

MedLSAM excels in scenarios where:

- Rapid prototyping is needed (e.g., validating segmentation feasibility across new organs)

- Labeling resources are limited (e.g., startups, academic labs, hospitals in low-resource settings)

- Scalability matters (e.g., building population-scale imaging databases)

It’s particularly valuable for projects focused on thoracic, abdominal, or head-and-neck CT analysis, given its training on diverse anatomical structures from StructSeg and WORD.

Important Limitations to Acknowledge

While powerful, MedLSAM isn’t a universal solution:

- Modality-specific: Designed exclusively for 3D CT—not validated on MRI, ultrasound, or X-ray.

- Not zero-shot: Requires extreme-point annotations on support scans. True zero-shot segmentation (no labels at all) isn’t supported.

- Anatomy coverage: Trained on 38 organs across two datasets; performance on rare or unseen structures may degrade.

- Prompt limitations: Currently supports bounding boxes derived from extreme points only—no scribble, point, or text prompts.

- License: Distributed under CC-BY-NC 4.0, prohibiting commercial use without permission.

These constraints make MedLSAM best suited for non-commercial research, academic validation, and internal prototyping—not direct deployment in commercial clinical products.

Summary

MedLSAM represents a significant leap toward practical, scalable medical image segmentation. By combining self-supervised localization (MedLAM) with the segmentation prowess of SAM, it slashes annotation requirements while preserving accuracy. For project leaders and technical decision-makers working with 3D CT data, MedLSAM offers a compelling path to automate segmentation workflows—without drowning in manual labeling. If your goal is to segment anatomical structures efficiently across large CT datasets with minimal human effort, MedLSAM deserves a serious look.