Keypoint detection—identifying specific, semantically meaningful points on objects like joints on a human body, facial landmarks on an animal, or corners on a piece of furniture—is foundational to countless real-world applications. From enabling expressive character animation and robotic manipulation to powering virtual fitting rooms and sports analytics, accurate and flexible keypoint detection is essential.

Yet most existing solutions are limited: they’re built for narrow domains (e.g., human pose only), rely on rigid two-stage pipelines (detect object → estimate keypoints), and struggle when faced with the messy diversity of real-world images. What if you could detect any keypoint—on a horse, a hand, a sofa, or a fly—with a single, unified model, simply by describing what you’re looking for?

Enter X-Pose: the first end-to-end, prompt-based keypoint detection framework that works across articulated, rigid, and soft objects using either textual or visual prompts. Developed by researchers at IDEA and The Chinese University of Hong Kong, Shenzhen, X-Pose redefines what’s possible in multi-object keypoint detection by combining strong generalization, multi-modal prompting, and a massive, unified training dataset—UniKPT.

The Problem with Traditional Keypoint Detectors

Conventional keypoint detectors are highly specialized. Models trained on COCO work well for humans but fail completely on animals. Those tuned for hands or faces can’t generalize to furniture or vehicles. Worse, many rely on two-stage architectures: first detect the object bounding box, then regress keypoints within it. This introduces error propagation—if the box is off, the keypoints are too—and limits flexibility in open-world scenarios where object categories are unknown or mixed.

Moreover, these models assume fixed keypoint definitions. Ask them to detect 68 facial landmarks on a cat? They’ll either ignore the request or produce nonsense. This rigidity makes them impractical for applications like wildlife monitoring, cross-category product inspection, or interactive AR experiences that must handle diverse objects dynamically.

One Model, Any Keypoint: How X-Pose Works

X-Pose breaks this mold by treating keypoint detection as a promptable task. Instead of deploying separate models for each object type, you use a single X-Pose model and provide a textual prompt (e.g., "horse" or "face") or a visual prompt (e.g., a reference image with annotated keypoints). The model then outputs the corresponding keypoints in a single forward pass—no object detection stage required.

This is made possible through an end-to-end architecture that aligns vision and language via cross-modality contrastive learning. When you input "person" and the model sees a human in the image, it doesn’t just detect generic joints—it activates the precise 17-point COCO skeleton. Likewise, "face" triggers the 68-point facial landmark structure. The system even defaults intelligently: if the category is ambiguous, it falls back to a broad animal skeleton that covers many unseen classes.

UniKPT: The Engine Behind X-Pose’s Generalization

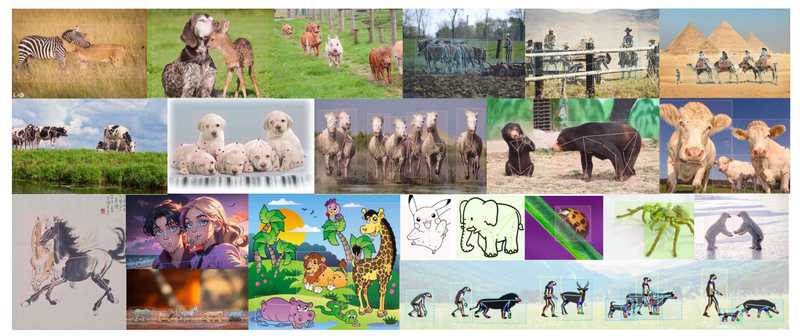

X-Pose’s versatility stems from UniKPT, a novel unified dataset that merges 13 public keypoint benchmarks into one coherent training corpus. UniKPT spans 1,237 object categories, 338 distinct keypoint types, and over 418,000 instances—from humans and macaques to desert locusts, cars, tables, and skirts.

Critically, each keypoint comes with a textual description (e.g., “left knee” or “rear hoof”), enabling the model to learn semantic correspondences between words and visual structures. This allows X-Pose to generalize to unseen categories: if it has never seen a kangaroo during training but understands “hind leg” and “tail” from other animals, it can still infer plausible keypoints.

UniKPT’s scale and diversity are why X-Pose performs robustly across image styles, lighting conditions, and poses—making it uniquely suited for in-the-wild deployment.

Real-World Performance That Stands Out

X-Pose doesn’t just generalize—it excels quantitatively. In fair comparisons against state-of-the-art methods:

- It outperforms non-promptable detectors by +27.7 AP

- It beats visual-prompt-based approaches by +6.44 PCK

- It surpasses textual-prompt-based models by +7.0 AP

Even more compelling are its qualitative results: X-Pose accurately localizes fine-grained keypoints on complex scenes—such as 68 facial landmarks on dogs, cats, and even cartoon characters—despite never being explicitly trained on those combinations. This demonstrates a rare blend of precision and adaptability.

Getting Started with X-Pose

Deploying X-Pose is straightforward. After cloning the repository and installing dependencies, you can run inference on a single image with a simple command:

CUDA_VISIBLE_DEVICES=0 python inference_on_a_image.py -c config/UniPose_SwinT.py -p weights/unipose_swint.pth -i your_image.jpg -o output_dir -t "face" -k "68_face_points"

The -t flag specifies the object category (e.g., "person", "horse", "car"), while -k selects a predefined keypoint skeleton from predefined_keypoints.py. If you omit -k, X-Pose automatically infers the most appropriate structure based on the category.

For interactive experimentation, launch the Gradio demo:

python app.py

This provides a user-friendly interface to test X-Pose without writing code.

Current Limitations

While X-Pose is production-ready for inference, a few limitations exist:

- Training code is not yet public, so users cannot fine-tune or retrain from scratch.

- Visual prompting (using reference images as input) is not fully supported in the current demo; textual prompts are the primary interface.

- The UniKPT dataset is for non-commercial research only, so commercial deployments require careful licensing review.

These are temporary gaps—the team plans to release training code and enhance visual prompting support in future updates.

When Should You Choose X-Pose?

X-Pose is ideal if your project requires:

- Multi-object keypoint detection without maintaining separate models

- Rapid prototyping with minimal labeling (just provide a category name)

- Robustness in real-world conditions—varying lighting, occlusion, pose, and object types

- Cross-domain applications like animal behavior analysis, interactive animation, retail product digitization, or robotics perception

If you’re building systems that must understand the geometry of diverse real-world entities—from humans to household items—X-Pose offers a unified, future-proof foundation.

Summary

X-Pose represents a paradigm shift in keypoint detection: from rigid, category-specific tools to a single, promptable model that understands any object’s structure. Powered by the massive UniKPT dataset and a novel end-to-end architecture, it delivers both state-of-the-art accuracy and unprecedented flexibility. For developers, researchers, and engineers working on perception systems in open-world environments, X-Pose isn’t just an incremental improvement—it’s a new standard.