For teams building AI-powered visual applications—whether in creative tools, digital content platforms, or rapid prototyping—the trade-off between image quality, speed, and compute cost has long been a bottleneck. Diffusion models deliver high-fidelity results but suffer from slow sampling and high computational demands. Autoregressive (AR) models offer faster inference but traditionally struggle with image reconstruction quality, especially at high resolutions like 1024×1024.

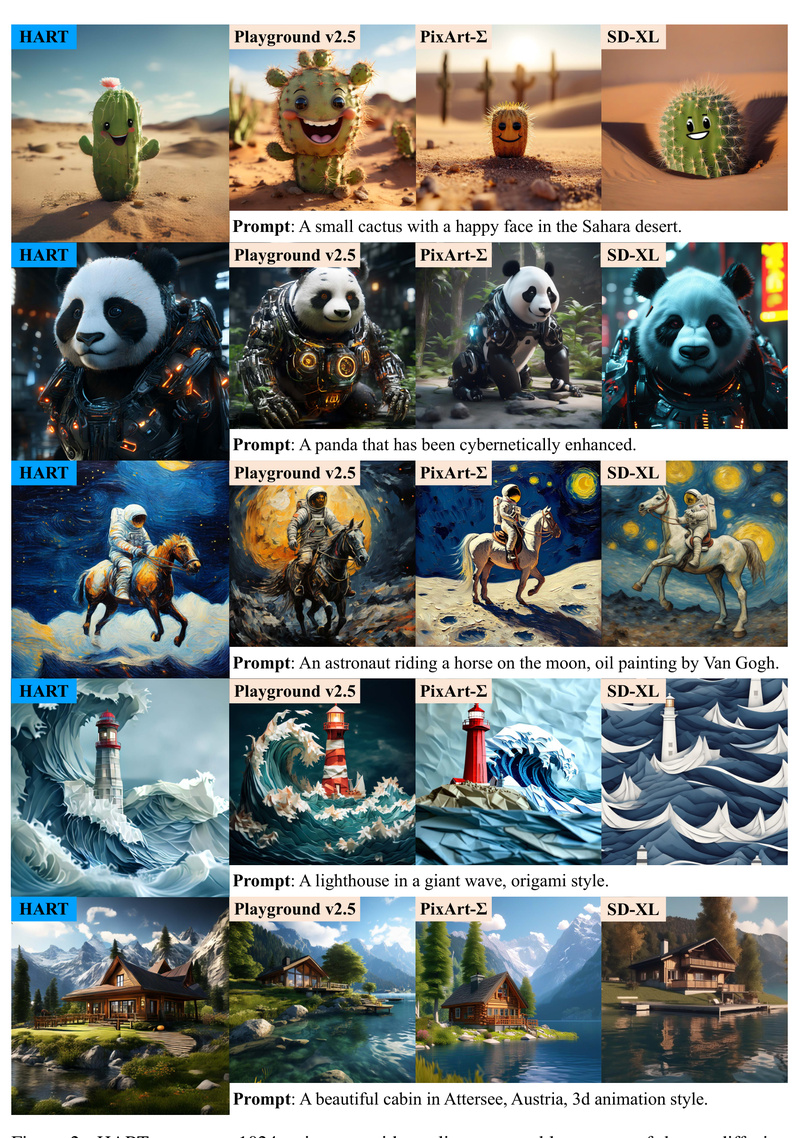

Enter HART (Hybrid Autoregressive Transformer)—a breakthrough that bridges this gap. Developed by MIT’s Han Lab, HART directly generates full-resolution 1024×1024 images with quality that rivals or even surpasses state-of-the-art diffusion models, while running 4.5–7.7× faster and using 6.9–13.4× fewer compute operations (MACs). This makes HART not just a research curiosity, but a practical, production-ready alternative for engineers and product teams seeking efficiency without sacrificing visual fidelity.

Why HART Solves Real-World Problems

Traditional autoregressive image generators rely solely on discrete tokenizers, which compress visual information into a limited vocabulary of tokens. This approach often loses fine details, leading to blurry or distorted reconstructions—especially at high resolutions. On the MJHQ-30K benchmark, discrete-only tokenizers (like those in VAR) achieve a reconstruction FID of 2.11, which directly limits generation quality.

HART introduces a hybrid tokenizer that splits the autoencoder’s continuous latent representation into two parts:

- Discrete tokens that capture the global structure and semantics (“the big picture”),

- Continuous residual tokens that preserve fine-grained details the discrete tokens can’t express.

The discrete component is modeled by a scalable-resolution autoregressive transformer, while the residual component is refined by a lightweight 37M-parameter diffusion module. This hybrid design reduces reconstruction FID from 2.11 to 0.30—a dramatic improvement that translates into a 31% boost in generation FID, from 7.84 down to 5.38.

Critically, HART doesn’t just match diffusion models—it outperforms them in both FID and CLIP Score, while being significantly more efficient. For practitioners tired of waiting minutes per image or provisioning expensive GPU clusters, this efficiency-to-quality ratio is transformative.

Key Technical Advantages

1. High-Quality 1024×1024 Image Generation

Unlike many AR models that max out at 256×256 or 512×512, HART natively supports 1024×1024 resolution without cascaded pipelines or post-upscaling hacks. This simplifies deployment and ensures pixel-perfect output for professional use cases.

2. Unmatched Inference Efficiency

HART achieves 4.5–7.7× higher throughput than leading diffusion models. In practical terms, this means generating dozens of high-res images per minute on a single GPU—ideal for batch processing or interactive applications.

3. Drastically Lower Compute Footprint

With 6.9–13.4× fewer MACs (Multiply-Accumulate operations), HART reduces both energy consumption and hardware requirements. Teams can deploy it on mid-tier GPUs without compromising on resolution or quality.

4. Open Source and Ready to Use

As of October 2024, the HART team has released inference code, a Gradio demo, and pretrained checkpoints—enabling immediate experimentation and integration.

Ideal Use Cases for HART

HART is particularly well-suited for scenarios where speed, resolution, and fidelity must coexist:

- Creative AI Tools: Applications like concept art generators, mood board creators, or design assistants that require instant visual feedback.

- Content Platforms: Social media or e-commerce platforms needing batch generation of high-quality product visuals or avatars.

- Prototyping & Simulation: Rapid iteration in robotics, gaming, or AR/VR where photorealistic 1024px assets are needed on-demand.

- Resource-Constrained Environments: Startups or edge deployments where GPU memory or inference latency is a hard constraint.

If your workflow currently relies on slow diffusion pipelines or low-resolution AR models, HART offers a compelling upgrade path.

Getting Started with HART

Setting up HART is straightforward for developers familiar with PyTorch and Hugging Face models:

-

Clone the repository and install dependencies:

git clone https://github.com/mit-han-lab/hart cd hart conda create -n hart python=3.10 && conda activate hart conda install -c nvidia cuda-toolkit -y pip install -e . cd hart/kernels && python setup.py install

-

Download required models:

- The main HART model:

mit-han-lab/hart-0.7b-1024px - Text encoder:

mit-han-lab/Qwen2-VL-1.5B-Instruct(used for prompt understanding) - Safety filter:

google/shieldgemma-2b(recommended for public demos)

- The main HART model:

-

Run inference:

- Launch a Gradio demo with

app.pyfor interactive testing. - Use

sample.pyfor command-line generation with single or multiple prompts.

- Launch a Gradio demo with

All outputs are saved as standard image files, making integration into existing pipelines seamless.

Limitations and Practical Considerations

While HART represents a major leap forward, adopters should note a few current constraints:

- Dependency on specific components: HART requires Qwen2-VL for text encoding and ShieldGemma for safety filtering in the demo setup. Swapping these out may require custom integration.

- Inference-only release: As of October 2024, training code and tokenizer training scripts are not public. You can fine-tune or train from scratch only if you implement the hybrid tokenizer yourself.

- Early-stage ecosystem: Documentation and community support are still growing. Teams should allocate time for initial debugging and environment setup.

These limitations don’t diminish HART’s value for inference—but they do shape realistic expectations for customization and long-term maintenance.

Summary

HART redefines what’s possible with autoregressive image generation by delivering high-resolution, high-fidelity images with diffusion-beating quality and transformer-level speed. By combining discrete and continuous representations, it overcomes the core weaknesses of prior AR models while slashing computational costs. For technical decision-makers evaluating generative vision models, HART offers a rare balance: production-ready performance, open-source accessibility, and a clear path to faster, leaner visual AI systems. If your project demands 1024×1024 image generation without the latency and cost of diffusion models, HART deserves serious consideration.