Fast-BEV emerges as a compelling solution to a longstanding challenge in autonomous vehicle (AV) perception: achieving both high inference speed and competitive accuracy in Bird’s-Eye View (BEV) representation without relying on resource-intensive components. Traditional BEV methods often depend on transformer-based view transformations or explicit depth estimation—modules that are powerful but computationally heavy, making real-time on-vehicle deployment difficult. Fast-BEV rethinks this paradigm by demonstrating that strong BEV perception can be achieved through a streamlined, lightweight architecture that prioritizes deployability without sacrificing performance.

Designed explicitly for automotive-grade hardware, Fast-BEV delivers real-time inference while matching or surpassing the accuracy of more complex alternatives. Its release marks a shift toward practical, production-ready BEV baselines that balance engineering constraints with perception quality—making it highly relevant for teams working on embedded AV systems, robotics perception stacks, or real-time spatial understanding tasks.

Core Innovations That Enable Speed and Accuracy

Fast-BEV’s effectiveness stems from five carefully engineered components, each addressing a specific bottleneck in conventional BEV pipelines:

Lightweight View Transformation

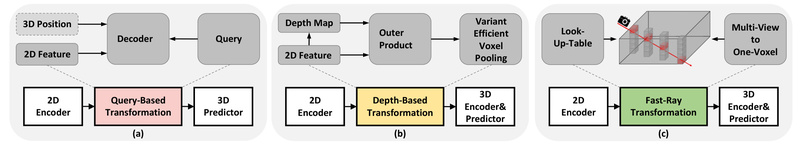

Instead of using heavy transformer modules or depth networks to map 2D image features into 3D BEV space, Fast-BEV introduces a geometry-based, CUDA-optimized view transformation. This approach projects features directly using camera intrinsics and extrinsics, significantly reducing latency while maintaining spatial fidelity. The method is not only faster but also deployment-friendly—requiring no additional trainable parameters for depth or attention.

Multi-Scale Image Encoder

To capture rich visual semantics across different resolutions, Fast-BEV employs a multi-scale image encoder (e.g., ResNet variants with feature pyramid enhancements). This allows the model to retain fine-grained details from higher-resolution layers while leveraging broader contextual cues from deeper layers—improving object detection and segmentation robustness in complex urban scenes.

Efficient BEV Encoder

The BEV encoder is tailored for low-latency execution on GPUs commonly found in automotive platforms. By using optimized convolutional blocks and avoiding unnecessary complexity, it ensures that once features are in BEV space, the downstream processing remains fast and memory-efficient.

Dual-Space Data Augmentation

Overfitting is a common issue in BEV training due to limited real-world datasets. Fast-BEV combats this with strong augmentation strategies applied in both the image domain (e.g., photometric distortions, scaling) and the BEV domain (e.g., rotation, flipping, translation in bird’s-eye coordinates). This dual-space approach enhances generalization without increasing model size.

Multi-Frame Temporal Fusion

Leveraging temporal consistency across video frames, Fast-BEV incorporates a multi-frame fusion mechanism that aggregates features from past frames (e.g., up to four frames with fixed intervals). This boosts detection stability for moving objects and improves overall scene understanding—critical for dynamic driving environments.

Real-World Performance Benchmarks

Fast-BEV’s design translates into measurable gains on standard benchmarks. On the nuScenes validation set using a single NVIDIA 2080 Ti:

- The ResNet-50 variant achieves 52.6 FPS with a 47.3% NDS (NuScenes Detection Score).

- This outperforms BEVDepth-R50 (41.3 FPS, 47.5% NDS) and BEVDet4D-R50 (30.2 FPS, 45.7% NDS)—notably delivering higher speed with comparable or better accuracy.

- The largest model (R101@900×1600 input resolution) reaches 53.5% NDS, establishing a new efficiency-accuracy frontier for camera-only BEV systems.

These results confirm that Fast-BEV successfully breaks the traditional speed-accuracy trade-off, offering a viable path to real-time perception on edge devices.

Practical Deployment Advantages

Beyond architecture, Fast-BEV prioritizes real-world usability:

- CUDA & TensorRT Support: The community-extended CUDA-FastBEV repository provides optimized inference kernels, enabling further speedups through low-level GPU acceleration.

- Quantization Ready: Both Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT) in int8 are supported, facilitating deployment on hardware with limited precision support (e.g., NVIDIA Jetson Orin).

- Modular Codebase: Built on the OpenMMLab ecosystem (MMDetection, MMSegmentation), it integrates smoothly with existing perception pipelines and supports common training practices.

Getting Started: Setup and Workflow

Adopting Fast-BEV follows a straightforward pipeline:

- Environment: Requires Python ≥3.6, PyTorch ≥1.8.1, MMCV-full 1.4.0, and compatible versions of MMDetection and MMSegmentation.

- Dataset: Organize the nuScenes dataset with standard splits (train/val/test) and precomputed info files (e.g.,

nuscenes_infos_val_4d_interval3_max60.pkl). - Pretrained Models: Download provided ImageNet-pretrained backbones (e.g.,

cascade_mask_rcnn_r50_fpn...) to bootstrap training. - Training: Launch experiments via config files (e.g.,

fastbev_m4_r50_s320x880...)—models typically converge in 20 epochs. - Inference & Optimization: Use the CUDA-FastBEV repo for TensorRT conversion and int8 quantization to maximize on-device throughput.

While the main repository labels deployment as “TODO,” the availability of external optimized inference code fills this gap for production use.

Ideal Use Cases

Fast-BEV is particularly well-suited for:

- Autonomous driving stacks requiring real-time, camera-only BEV perception on embedded GPUs.

- Robotic navigation systems operating in structured urban or campus environments where LiDAR is unavailable or cost-prohibitive.

- Research prototyping where a fast, strong baseline is needed to evaluate novel BEV components without infrastructure overhead.

Its focus on speed, simplicity, and accuracy makes it a pragmatic choice for teams prioritizing deployability over architectural novelty.

Limitations and Considerations

Prospective adopters should note a few constraints:

- Sensor Modality: Fast-BEV is camera-only and does not fuse LiDAR or radar data. Teams using multi-sensor setups will need to integrate it as a vision-only component.

- Dataset Scope: Validation is primarily on nuScenes; performance on other datasets (e.g., Waymo, KITTI) may require retraining or adaptation.

- Deployment Docs: The official GitHub lacks detailed deployment guides, though the community-driven CUDA-FastBEV implementation mitigates this for inference optimization.

These factors don’t diminish its value but clarify its niche: high-performance, real-time BEV perception in vision-centric, resource-constrained settings.

Summary

Fast-BEV redefines what’s possible in lightweight BEV perception by proving that high frame rates and strong accuracy can coexist—even without transformers or depth heads. With its deployable architecture, strong benchmarks, and support for quantization and TensorRT, it offers a ready-to-adopt foundation for real-world autonomous systems. For engineers and researchers seeking a fast, accurate, and production-viable BEV baseline, Fast-BEV stands out as a pragmatic and powerful choice.