PytorchInsight is a practical, research-oriented PyTorch library designed to accelerate deep learning development—especially for computer vision practitioners who need reliable, high-performing models without diving into complex theoretical implementations. At its core, it offers clean, reproducible implementations of state-of-the-art convolutional neural network (CNN) architectures, pre-trained models, and standardized training protocols. One of its standout contributions is the inclusion of the Spatial Group-wise Enhance (SGE) module, a lightweight attention mechanism that significantly improves feature learning with virtually no added computational cost.

Built with usability in mind, PytorchInsight enables teams and individual researchers to integrate advanced vision backbones—like SGE-ResNet, SE-ResNet, or SK-ResNet—into their workflows with minimal code changes. Whether you’re fine-tuning a model for production deployment or validating academic claims, this library helps you avoid reinventing the wheel while delivering measurable accuracy gains on standard benchmarks like ImageNet and COCO.

Why SGE and Other Attention Modules Matter

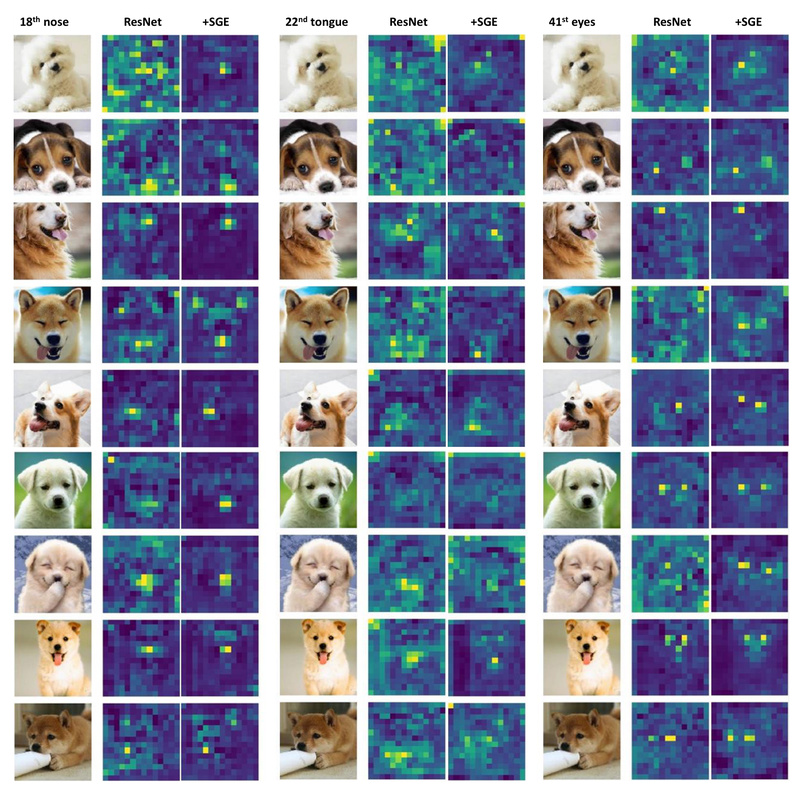

Traditional CNNs often struggle to distinguish between semantically meaningful features and background noise, especially when objects appear in cluttered scenes. The SGE module, introduced in the paper “Spatial Group-wise Enhance: Improving Semantic Feature Learning in Convolutional Networks,” addresses this by enhancing relevant sub-features within each semantic group while suppressing noise—all guided by internal feature similarities rather than external supervision.

Critically, SGE achieves this without introducing extra parameters or noticeable computational overhead. For example:

- SGE-ResNet50 improves ImageNet Top-1 accuracy by 1.2% (from 76.4% to 77.6%) over the standard ResNet50.

- On object detection tasks using COCO, it delivers 1.0–2.0% AP gains across popular frameworks like Faster R-CNN, Mask R-CNN, and Cascade R-CNN—despite having identical parameter counts and nearly the same FLOPs as the baseline.

Beyond SGE, PytorchInsight also includes other well-known attention mechanisms such as SE (Squeeze-and-Excitation), SK (Selective Kernel), CBAM, and BAM, allowing side-by-side comparison and flexible integration based on project needs.

Practical Use Cases Where PytorchInsight Shines

This library is particularly valuable in the following scenarios:

1. Improving Production Vision Systems

If your image classification or object detection pipeline relies on ResNet-style backbones and you need a quick, low-risk upgrade, replacing ResNet50 with SGE-ResNet50 can yield consistent accuracy improvements—without changing your inference infrastructure or increasing latency.

2. Reproducing Research Results

PytorchInsight provides official training logs, pre-trained weights, and exact command-line recipes for models like SGE-ResNet101 (78.8% Top-1 on ImageNet). This eliminates common reproducibility pitfalls, making it ideal for internal benchmarking or academic validation.

3. Rapid Prototyping of Vision Models

The library supports both large models (e.g., ResNet101) and mobile-friendly architectures (e.g., ShuffleNetV2) with tailored training settings—such as label smoothing, cosine learning rate decay, and bias weight decay exclusion—ensuring optimal performance across hardware constraints.

Getting Started Is Straightforward

Adopting PytorchInsight requires minimal setup:

- Clone the repository:

git clone https://github.com/implus/PytorchInsight - Choose a pre-integrated model—for instance,

sge_resnet50 - Use the provided training or evaluation commands with your ImageNet path

For example, to evaluate SGE-ResNet101:

python -W ignore imagenet.py -a sge_resnet101 --data /path/to/imagenet1k/ -e --resume ../pretrain/sge_resnet101.pth.tar

Pre-trained models are available via Google Drive and Baidu Drive (links in the repo), enabling immediate inference or fine-tuning on custom datasets.

Limitations and Key Considerations

While powerful, PytorchInsight has clear boundaries:

- Vision-focused: It targets CNN-based image tasks (classification, detection) and does not support NLP, audio, or multimodal models.

- Retraining needed: To benefit from modules like SGE, you typically need to retrain the backbone—not just swap in a module post-hoc. This requires access to GPU clusters (the examples assume 8-GPU setups).

- PyTorch only: No TensorFlow or JAX support is provided.

That said, for vision teams working within the PyTorch ecosystem, these constraints are often aligned with existing workflows.

Summary

PytorchInsight lowers the barrier to adopting cutting-edge CNN enhancements like SGE by offering plug-and-play modules, reproducible training protocols, and verified performance gains—all with negligible computational cost. If your work involves improving image recognition accuracy or validating attention mechanisms in real-world settings, this library provides a robust, production-ready foundation without unnecessary complexity.