Optical flow estimation—the task of predicting per-pixel motion between consecutive video frames—is foundational in computer vision applications ranging from autonomous driving to robotics and video analysis. However, many state-of-the-art models suffer from excessive computational demands, large memory footprints, or complex architectures that hinder real-world deployment.

LiteFlowNet, introduced in CVPR 2018 as a spotlight paper, directly addresses these challenges. It delivers higher accuracy than heavier models like FlowNet2 while using just 5.37 million parameters—over 30 times smaller—and running 1.36 times faster. Built for practicality, LiteFlowNet combines architectural innovations with engineering discipline to offer a compelling balance of speed, size, and performance, making it an ideal choice for projects where efficiency and reliability are non-negotiable.

Why LiteFlowNet Stands Out

Outperforms Larger Models with Far Fewer Resources

When LiteFlowNet was released, FlowNet2 dominated optical flow benchmarks but required 162.49M parameters. LiteFlowNet not only surpassed FlowNet2 on the KITTI 2012 (3.27% vs. 4.82% Out-Noc error) and KITTI 2015 (9.38% vs. 10.41% Fl-all error) leaderboards, but did so with a model under 6MB in size. It also edges out PWC-Net—a contemporary lightweight alternative—on KITTI while using ~40% fewer parameters.

This isn’t just a theoretical win. For teams working under hardware constraints, this means faster inference, lower power consumption, and easier deployment on edge devices without sacrificing accuracy.

Core Innovations That Solve Real Engineering Problems

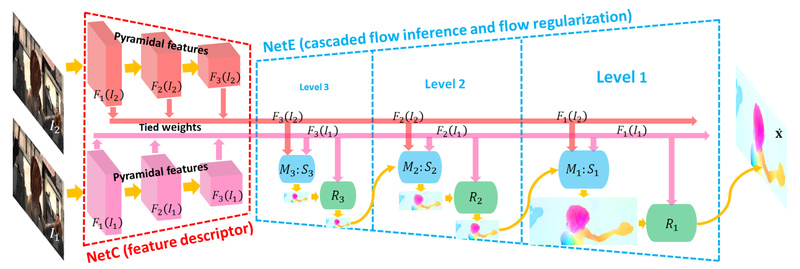

LiteFlowNet’s efficiency stems from four key design choices, each targeting a specific pain point in optical flow systems:

1. Cascaded Flow Inference for Early Correction

Instead of estimating flow in a single pass, LiteFlowNet uses a lightweight cascaded network at each pyramid level. This allows early refinement of coarse predictions, significantly improving accuracy—especially in complex motion regions. It also enables seamless integration of descriptor matching, enhancing robustness without added overhead.

2. Feature Warping Instead of Image Warping

While FlowNet2 warps input images to align frames, LiteFlowNet warps deep feature maps (via its f-warp layer). This reduces noise propagation and preserves semantic information, leading to cleaner, more consistent flow fields—critical for downstream tasks like object tracking or scene understanding.

3. Feature-Driven Local Convolution for Boundary Refinement

Optical flow often suffers from outliers and blurry motion boundaries. LiteFlowNet introduces a novel f-lconv layer that applies local convolutions guided by feature context, effectively suppressing outliers and sharpening motion discontinuities. This results in sharper, more physically plausible flow estimates.

4. Efficient Pyramidal Feature Extraction

The network uses a streamlined encoder-decoder pyramid that balances receptive field coverage with computational cost. This structure ensures multi-scale motion cues are captured efficiently—essential for handling both large displacements and fine-grained movements.

Ideal Use Cases for Practitioners

LiteFlowNet excels in scenarios where real-time performance, limited compute, or model size constraints are decisive factors:

- Autonomous vehicles and ADAS systems: Reliable flow estimation on KITTI makes it suitable for motion understanding in driving scenes.

- Drones and robotics: Low-latency inference enables real-time navigation and obstacle avoidance on embedded GPUs.

- Mobile or edge video analytics: Its compact size allows integration into mobile apps or IoT devices without cloud dependency.

- Industrial automation: Stable, deterministic performance supports high-throughput visual inspection pipelines.

Its strong benchmark results on Sintel (challenging synthetic scenes with motion blur and occlusions) and KITTI (real-world autonomous driving data) provide confidence in its robustness across diverse environments.

Getting Started Without a Research Background

You don’t need to train from scratch to benefit from LiteFlowNet. The official repository provides:

-

Pre-trained models optimized for specific benchmarks:

liteflownet: trained on FlyingChairs + FlyingThings3Dliteflownet-ft-sintel: fine-tuned for Sintelliteflownet-ft-kitti: fine-tuned for KITTI

-

Ready-to-run testing scripts that accept image pair lists and output standard

.floflow files.

For developers preferring modern frameworks, community reimplementations are available in PyTorch and TensorFlow, easing integration into existing pipelines. While the original code is based on a modified Caffe (requiring CUDA 8.0 and cuDNN 5.1 for compilation), these ports offer more familiar APIs without sacrificing fidelity to the original architecture.

Fine-tuning is supported but optional—many applications can deploy the provided models out-of-the-box.

Practical Limitations to Consider

LiteFlowNet is not without trade-offs:

- The original implementation relies on a customized Caffe build, which may feel outdated compared to PyTorch or JAX ecosystems. Compilation requires careful environment setup.

- Stage-wise training (as described in the paper) is used to maximize performance, which adds complexity for custom training workflows.

- While highly efficient, LiteFlowNet2 (TPAMI 2020) and LiteFlowNet3 (ECCV 2020) offer further improvements in accuracy and speed. If your project doesn’t require strict compatibility with the original model, these newer versions may be preferable.

That said, for teams needing a proven, lightweight, and well-documented optical flow solution with strong benchmark validation, LiteFlowNet remains an excellent starting point.

Why Choose LiteFlowNet Over Alternatives?

Compared to mainstream options:

| Model | KITTI15 (Fl-all) | Model Size (M) | Runtime (ms) |

|---|---|---|---|

| FlowNet2 | 10.41% | 162.49 | 121 |

| PWC-Net | 9.60% | 8.75 | ~40 |

| LiteFlowNet | 9.38% | 5.37 | ~88 |

LiteFlowNet delivers better accuracy than both, with the smallest model size among the three. While slightly slower than PWC-Net on modern GPUs, its parameter efficiency makes it far more suitable for memory-constrained environments.

It avoids the experimental complexity of newer architectures while providing production-ready performance—a rare combination for a CVPR spotlight paper.

Summary

LiteFlowNet redefines what’s possible in optical flow estimation: it proves that lightweight models can outperform bloated predecessors when architecture is carefully engineered. By focusing on cascaded refinement, feature-level warping, and context-aware regularization, it solves real-world problems like motion blur, outliers, and deployment bottlenecks.

For project leads, engineers, and researchers weighing optical flow solutions, LiteFlowNet offers a pragmatic, high-performing, and compact alternative—ideal for applications where every megabyte and millisecond counts.