Remote sensing imagery—captured from satellites, drones, or aircraft—presents unique challenges for computer vision systems. Objects are often small, densely packed, oriented at arbitrary angles, and embedded in complex backgrounds. Standard deep learning backbones, designed primarily for natural images, frequently struggle to capture the long-range contextual cues essential for accurate interpretation in these scenarios.

Enter LSKNet (Large Selective Kernel Network): a lightweight, domain-aware backbone architecture purpose-built for remote sensing tasks. Introduced in the International Journal of Computer Vision (2024) and earlier at ICCV 2023, LSKNet rethinks how receptive fields are modeled by introducing a dynamic, large-selective-kernel mechanism. This allows the network to adaptively focus on the appropriate spatial context for each object—whether it’s a compact vehicle or an elongated runway—without inflating computational cost.

The result? State-of-the-art performance across classification, object detection, and semantic segmentation benchmarks, all within a compact architecture that’s suitable for deployment on edge devices or large-scale processing pipelines.

Why Remote Sensing Needs a Specialized Backbone

General-purpose vision models like ResNet or EfficientNet assume certain statistical regularities found in everyday photos: centered subjects, consistent lighting, and moderate scale variation. Remote sensing images defy these assumptions.

Key challenges include:

- Small object prevalence: Ships, cars, or storage tanks may occupy only a few pixels.

- Variable context requirements: Detecting a bridge may require analyzing a 512×512 region, while identifying a single vehicle might only need 64×64—but standard fixed-kernel convolutions can’t adjust accordingly.

- Orientation diversity: Objects appear at arbitrary rotations, demanding richer spatial understanding.

LSKNet directly addresses these issues by encoding remote sensing prior knowledge into its architecture: namely, that different objects require different ranges of contextual information, and the model should selectively expand its receptive field accordingly.

Core Innovation: The Large Selective Kernel Mechanism

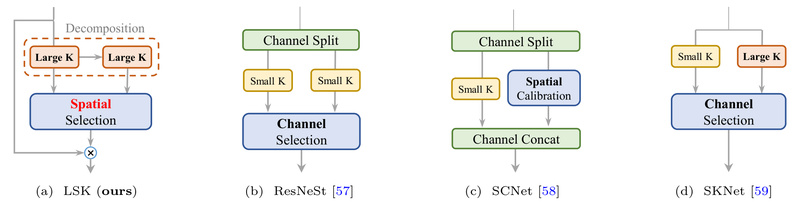

At the heart of LSKNet is its Large Selective Kernel (LSK) module. Unlike conventional convolutions with fixed kernel sizes (e.g., 3×3), LSKNet employs multiple parallel large-kernel branches (e.g., 13×13, 25×25, etc.) and uses a lightweight attention mechanism to dynamically fuse their outputs based on local content.

This design offers two critical advantages:

- Adaptive context modeling: The network automatically emphasizes the kernel size that best captures the relevant spatial extent for a given feature location.

- Computational efficiency: Despite using large kernels, LSKNet remains lightweight by leveraging depthwise separable convolutions and channel-wise selection—avoiding the quadratic cost explosion of standard large convolutions.

To date, LSKNet is the first work to apply such a selective large-kernel strategy specifically to remote sensing, where long-range dependencies are not just helpful—they’re essential.

Proven Performance Across Remote Sensing Benchmarks

LSKNet isn’t just theoretically elegant—it delivers measurable gains across major datasets:

- DOTA1.0 (object detection): LSKNet-S achieves 81.64% mAP with Oriented R-CNN, outperforming prior backbones. With EMA fine-tuning, it reaches 81.85%. Its evolution, Strip R-CNN (built on LSKNet principles), pushes this to 82.75% mAP—a new state of the art.

- FAIR1M-1.0: LSKNet-S attains 47.87% mAP, significantly above the previous best of 45.60%.

- HRSC2016 (ship detection): Achieves 98.46% [email protected]:0.95, demonstrating robustness on fine-grained maritime targets.

- Semantic segmentation: Extended via LSKNet + GeoSeg, it delivers strong results on Potsdam, Vaihingen, LoveDA, and UAVid datasets.

Crucially, these results are achieved without complex post-processing, ensembling, or architectural overhauls—just by swapping in LSKNet as the backbone.

Practical Integration: Plug-and-Play with MMRotate

LSKNet is implemented as part of the MMRotate ecosystem, a widely adopted framework for oriented object detection in PyTorch. This makes adoption straightforward:

- Pre-trained models: ImageNet-pretrained LSKNet-T (tiny) and LSKNet-S (small) backbones are publicly available.

- Ready-to-use configs: Detection configurations for Oriented R-CNN, RoI Transformer, R3Det, and S2ANet are provided under

configs/lsknet/. - Easy setup: Installation via conda and pip, with dependencies managed through

mim(OpenMMLab’s package manager). - Tutorials & Colab: Beginner-friendly guides help users fine-tune on custom datasets or reproduce benchmark results.

For teams already using MMDetection or MMRotate, integrating LSKNet requires only changing a single line in the config file—making it a low-friction upgrade.

Ideal Use Cases

LSKNet excels in scenarios where:

- Accuracy on small or dense objects is critical (e.g., counting vehicles in urban areas, detecting vessels in ports).

- Compute resources are limited, such as on drones, satellites, or field-deployed edge servers.

- Multi-task pipelines are needed—using one backbone for detection, segmentation, and classification simplifies maintenance and reduces redundancy.

- Rapid prototyping is required, thanks to available pre-trained weights and standardized configs.

Important Considerations

While powerful, LSKNet comes with a few constraints:

- License: Released under CC BY-NC 4.0—free for academic and non-commercial use, but commercial deployment requires explicit permission from the authors.

- Ecosystem dependency: Built on PyTorch ≥1.6 and MMRotate, so familiarity with OpenMMLab’s config system is helpful.

- Domain specificity: Optimized for remote sensing; not intended as a drop-in replacement for general vision tasks like ImageNet classification or COCO detection.

Summary

LSKNet represents a paradigm shift in remote sensing backbones: instead of scaling up model size, it scales intelligence—by embedding domain priors directly into the architecture. Its selective large-kernel mechanism dynamically adapts to the spatial context demands of diverse objects, delivering top-tier accuracy with minimal computational overhead.

For project leads, researchers, and engineers working with aerial or satellite imagery, LSKNet offers a compelling combination of performance, efficiency, and ease of integration. Whether you’re building a ship monitoring system, an urban change detection pipeline, or a land cover mapping tool, LSKNet provides a robust, lightweight foundation that respects the unique realities of remote sensing data.

With open-source code, pre-trained models, and seamless compatibility with MMRotate, there’s never been a better time to trial LSKNet in your next remote sensing project.