Autonomous driving systems demand robust, real-time perception under all environmental conditions—but traditional deep learning models struggle with the high computational cost and energy consumption required to process dense 4D radar data. Enter SpikingRTNH, a breakthrough spiking neural network (SNN) architecture designed specifically for 3D object detection using full 4D radar tensors. By replacing standard ReLU activations with biologically inspired Leaky Integrate-and-Fire (LIF) neurons and introducing a novel Biological Top-Down Inference (BTI) strategy, SpikingRTNH slashes energy usage by 78% while maintaining competitive detection accuracy—making it ideal for battery-powered and embedded autonomous systems that must operate reliably in rain, fog, snow, and other adverse weather conditions.

Built as part of the open-source K-Radar project from KAIST’s AVELab, SpikingRTNH is not just a theoretical proposal; it’s a practical, deployable solution backed by the world’s first large-scale public dataset of full-dimensional 4D radar tensors (Range-Azimuth-Elevation-Doppler). This enables realistic training and evaluation without the data degradation common in point-cloud-based radar approaches. For engineers and researchers prioritizing energy efficiency without sacrificing robustness, SpikingRTNH offers a compelling path forward.

Why Energy Efficiency Matters in Radar-Based Perception

4D radar sensors generate dense, high-resolution data across four dimensions—offering superior object shape recognition and stable performance in poor visibility compared to LiDAR or cameras. However, this richness comes at a cost: conventional artificial neural networks (ANNs) consume significant power when processing such high-density inputs, especially on embedded platforms with strict thermal and energy budgets.

In real-world autonomous vehicles—particularly electric or robotic platforms—power consumption directly impacts range, operational time, and system cost. SpikingRTNH addresses this by leveraging the inherent sparsity and event-driven nature of spiking neural networks, which only activate neurons when necessary, mimicking the efficiency of the human brain. This paradigm shift is critical for moving perception workloads from high-power GPUs to low-power neuromorphic or edge AI hardware.

Core Innovations: How SpikingRTNH Delivers Efficiency Without Compromise

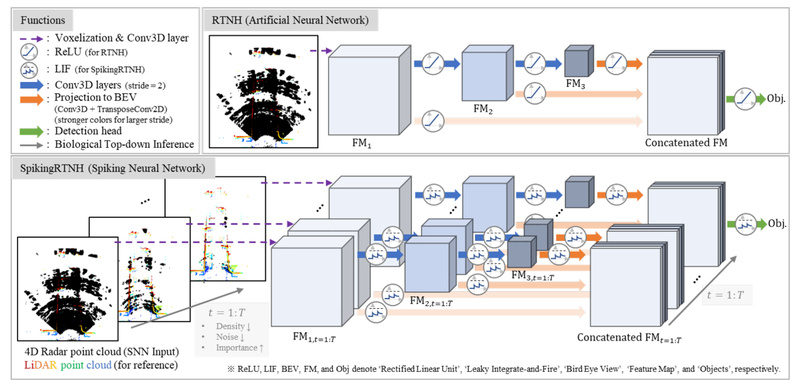

Leaky Integrate-and-Fire (LIF) Neurons Replace ReLU

Unlike standard deep learning models that use continuous activation functions like ReLU, SpikingRTNH implements LIF spiking neurons. These accumulate input over time and fire only when a membrane potential threshold is crossed—dramatically reducing redundant computations. This bio-inspired design enables significant energy savings, especially when deployed on compatible hardware accelerators.

Biological Top-Down Inference (BTI) for Smarter Point Processing

SpikingRTNH introduces Biological Top-Down Inference (BTI), a novel processing strategy inspired by human visual cognition. Instead of treating all radar points equally, BTI processes the 4D radar tensor sequentially from high-density to low-density regions. Since high-density regions typically correspond to strong, reliable reflections (e.g., from vehicle bodies), while low-density points often represent noise or sidelobes, this approach prioritizes informative data early in the inference pipeline. The result? Improved robustness and more efficient use of computational resources.

Proven Performance on Real-World Data

Evaluated on the K-Radar dataset—which includes 35,000 frames across diverse road types and severe weather conditions—SpikingRTNH achieves 51.1% AP 3D and 57.0% AP BEV, matching the performance of its ANN counterpart (RTNH) while using 78% less energy. This demonstrates that SNNs are not just theoretically efficient but practically viable for safety-critical automotive perception tasks.

Ideal Use Cases: Where SpikingRTNH Shines

SpikingRTNH is particularly well-suited for the following scenarios:

- Autonomous vehicles operating in adverse weather: Its reliance on 4D radar—unaffected by fog, rain, or snow—ensures consistent perception when optical sensors degrade.

- Battery-constrained platforms: Electric shuttles, delivery robots, or drones benefit from the dramatic energy reduction, extending operational time.

- Embedded radar perception systems: Developers building custom radar AI for edge devices can leverage SpikingRTNH’s compatibility with low-power SNN hardware.

- Research in neuromorphic computing: The project provides a rare real-world benchmark for applying SNNs to complex 3D detection tasks beyond toy datasets.

Note that SpikingRTNH is designed exclusively for 4D radar tensor inputs, not standard point clouds. This is a feature, not a limitation: by preserving the full RAED structure, it avoids the information loss inherent in pre-processed radar representations.

Getting Started: Integration and Workflow

SpikingRTNH is integrated into the K-Radar open-source repository at https://github.com/kaist-avelab/k-radar, which includes:

- The full K-Radar dataset (15TB, available via server, Google Drive, or shipped HDD)

- Training and inference pipelines for SpikingRTNH and its ANN baseline (RTNH)

- Visualization tools for inspecting 4D radar tensors and detection results

- Sensor calibration utilities and pre-processing modules

To use SpikingRTNH:

- Prepare the K-Radar dataset following the provided Dataset Preparation Guide.

- Run the pre-processing pipeline, which includes two-stage noise filtering to suppress sidelobes—a critical step for high-quality detection.

- Train or evaluate SpikingRTNH using the included PyTorch-based code (Apache 2.0 licensed).

- Visualize results with the GUI tool to compare detections against LiDAR and camera references.

The repository also supports auto-labeling, sensor fusion, and radar data synthesis—making it a comprehensive ecosystem for 4D radar AI development.

Limitations and Practical Considerations

While SpikingRTNH offers compelling advantages, adopters should be aware of several constraints:

- Hardware specificity: 4D radar data distributions vary significantly across sensor models due to differences in chirp design, antenna patterns, and signal processing. SpikingRTNH is trained and validated on K-Radar’s specific radar hardware; performance on other 4D radars may require domain adaptation or fine-tuning.

- Specialized input format: The model requires 4D radar tensors, not point clouds. Teams using radar systems that output only pre-processed point clouds cannot directly deploy SpikingRTNH without access to raw tensor data.

- Focus on energy, not raw speed: The primary optimization target is energy efficiency, not inference latency. While SNNs can be fast on neuromorphic hardware, standard GPU inference may not show speed gains.

- Single-sensor design: SpikingRTNH is a radar-only detector. It does not natively support camera or LiDAR fusion, though the broader K-Radar project includes separate modules for multi-sensor integration (e.g., Availability-aware Sensor Fusion).

Summary

SpikingRTNH redefines what’s possible in energy-constrained autonomous perception by proving that spiking neural networks can deliver real-world 3D object detection performance on dense 4D radar data—with a 78% reduction in energy use. Its innovations—LIF neurons and Biological Top-Down Inference—are not just academic curiosities but practical tools for building robust, efficient, all-weather perception systems. For teams working on autonomous driving, robotics, or embedded AI where power is at a premium, SpikingRTNH offers a rare combination of scientific novelty, open accessibility, and real-world applicability. With full code and data available under permissive licenses, it lowers the barrier to entry for researchers and engineers ready to explore the next generation of bio-inspired, radar-centric perception.