Animating a static portrait—whether a photo of a person or a pet—into a lifelike, expressive video has long been a challenging problem in computer vision and generative AI. Most state-of-the-art approaches rely on diffusion models, which, while powerful, are computationally heavy and often too slow for real-world applications like social media filters, interactive avatars, or video editing tools.

LivePortrait changes this narrative. Developed by researchers at Kuaishou Technology and academic collaborators, LivePortrait is an efficient, non-diffusion-based framework that delivers high-quality portrait animation by leveraging an implicit keypoint representation combined with novel stitching and retargeting controls. It strikes a rare balance: fast enough for real-time use (just 12.8ms per frame on an RTX 4090), controllable enough for fine-grained editing, and generalizable across diverse inputs—including humans, cats, and dogs.

Already adopted by major platforms like Kuaishou, Douyin, Jianying, and WeChat Channels, LivePortrait is not just a research prototype—it’s a production-ready tool engineered for creators, developers, and product teams who need speed, reliability, and creative control.

Why LivePortrait Stands Out

Speed Without Sacrificing Quality

Unlike diffusion-based methods that require dozens of iterative steps to generate each frame, LivePortrait operates in a single forward pass. This architectural choice enables real-time performance without compromising visual fidelity. On modern GPUs, it processes frames at over 75 FPS—making it suitable for interactive applications where latency matters.

Precise Motion Control Through Stitching and Retargeting

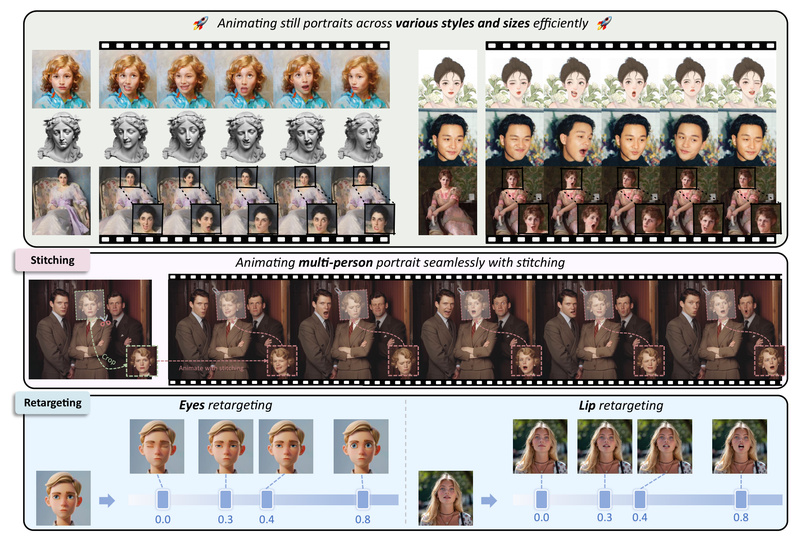

One of LivePortrait’s core innovations is its use of compact implicit keypoints that act like blendshapes—mathematical representations of facial configurations. Built on this foundation, the framework introduces two lightweight modules:

- Stitching: Seamlessly integrates motion from the driving source (e.g., a video of someone talking) into the static source portrait.

- Retargeting: Enables expressive control by decoupling global head motion from local facial deformations, allowing users to exaggerate or suppress specific expressions.

These modules are implemented via small MLPs with negligible computational overhead, meaning you gain fine-grained control without slowing down inference.

Trained on Massive, Real-World Data

LivePortrait was trained on approximately 69 million high-quality video frames using a mixed image-video strategy. This large-scale, diverse dataset contributes to its strong generalization—handling varied lighting, poses, ethnicities, and even animal species—without requiring per-subject fine-tuning.

Beyond Humans: Unified Animation for Pets

While many portrait animation tools focus solely on human faces, LivePortrait extends support to cats and dogs through a specialized animal model. This expands its utility for pet content creators, veterinary telepresence, or entertainment apps—though note that animal mode currently requires Linux or Windows with an NVIDIA GPU.

Practical Use Cases

LivePortrait shines in scenarios where speed, privacy, and user control intersect:

- Social Media Effects: Power real-time filters that animate user-uploaded photos using live camera feeds or pre-recorded motions.

- Video Editing Tools: Enable “video-to-video” editing, where a source video’s appearance is preserved while adopting motion from another clip—ideal for dubbing, parody, or accessibility.

- Privacy-Preserving Workflows: Instead of uploading raw driving videos, users can export motion templates (

.pklfiles) that encode only pose and expression data. These templates protect identity while enabling reuse across different source portraits. - Interactive Avatars: Integrate with voice or text inputs (via external drivers) to create talking-head avatars for virtual assistants, gaming, or customer service.

The system supports both image-to-video (animate a photo) and video-to-video (restyle one video using another’s motion) modes, offering flexibility for different creative pipelines.

Getting Started Is Easy

Despite its advanced capabilities, LivePortrait is designed for accessibility:

-

Clone the repository and set up a Python 3.10 environment using Conda.

-

Install dependencies—OS-specific instructions are provided for Linux, Windows, and macOS (including Apple Silicon support for human animation).

-

Download pretrained weights via Hugging Face with a single command.

-

Run inference using simple CLI commands:

python inference.py -s source.jpg -d driving.mp4

Or launch the Gradio-based web interface for point-and-click control, including pose sliders, regional editing, and auto-cropping options.

For Windows users, one-click installers eliminate setup friction entirely. Mac users with Apple Silicon can run the human model out of the box (though performance is slower than GPU-based systems).

Advanced users can further accelerate inference using torch.compile (Linux-only), which yields 20–30% speedups after an initial optimization phase.

Limitations and Best Practices

To achieve optimal results, keep the following in mind:

- Driving Video Requirements: For best quality, the first frame of the driving video should show a frontal face with neutral expression. The subject’s head should dominate the frame, ideally cropped to a 1:1 aspect ratio. If not, enable

--flag_crop_driving_videoto auto-crop, though manual adjustment may yield better alignment. - Hardware Constraints: Animal animation only works on NVIDIA GPU-equipped Linux or Windows machines due to dependencies like X-Pose. macOS users cannot run animal mode.

- Ethical Use: As with all portrait animation technologies, LivePortrait carries deepfake risks. The developers intentionally embed subtle visual artifacts in outputs to aid detection, and users are urged to follow ethical guidelines—especially avoiding non-consensual or deceptive applications.

Summary

LivePortrait redefines what’s possible in real-time portrait animation. By moving away from diffusion models and embracing a keypoint-driven architecture enhanced with stitching and retargeting controls, it delivers unprecedented speed, controllability, and generalization. Whether you’re building a social app, a creative tool, or a research prototype, LivePortrait offers a robust, well-documented, and actively maintained solution that’s already proven in production at scale.

With open-source code, pretrained models, cross-platform support, and a vibrant community ecosystem—including integrations with ComfyUI, FaceFusion, and Stable Diffusion WebUI—it’s never been easier to bring lifelike animation to your projects.