In the rapidly evolving landscape of large language models (LLMs), achieving strong reasoning capabilities often comes at the cost of massive datasets, complex training pipelines, or proprietary techniques. OpenAI’s o1 model demonstrated that allocating extra compute during inference—a strategy known as test-time scaling—can significantly enhance reasoning performance. However, its methodology remains undisclosed, leaving researchers and practitioners searching for accessible alternatives.

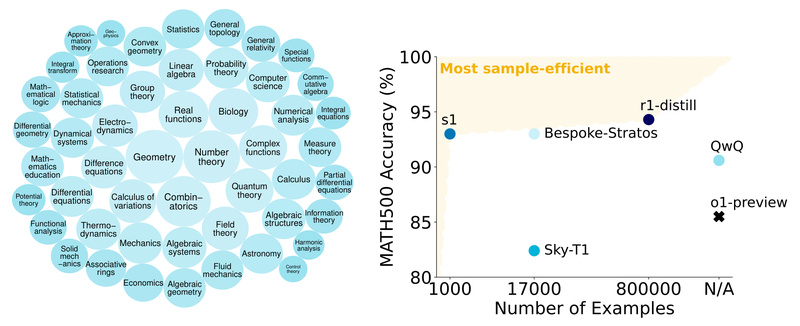

Enter S1: a minimalist, open-source approach that delivers o1-preview-level reasoning using only 1,000 carefully curated examples and a clever inference-time control mechanism called budget forcing. Built on top of Qwen2.5-32B-Instruct, S1 not only matches but exceeds o1-preview on competition math benchmarks like MATH and AIME24—by up to 27%. Crucially, everything is open: model weights, training data, code, and evaluation scripts. For teams seeking transparent, reproducible, and plug-and-play reasoning upgrades without hidden tricks or massive infrastructure, S1 offers a compelling solution.

How S1 Works: Simplicity Meets Strategic Inference

The s1K Dataset: Quality Over Quantity

S1’s foundation is the s1K dataset—a compact collection of 1,000 questions paired with high-quality reasoning traces. These traces aren’t randomly gathered; they’re selected based on three rigorously validated criteria: difficulty, diversity, and reasoning quality. Ablation studies in the paper confirm that each criterion contributes meaningfully to final performance. This focus on signal-rich examples enables strong generalization from minimal supervision—a stark contrast to conventional fine-tuning that demands tens or hundreds of thousands of samples.

Budget Forcing: Intelligent Control at Inference Time

The real innovation of S1 lies in budget forcing, a test-time technique that dynamically regulates how long the model “thinks” before finalizing its answer.

Here’s how it works:

- During generation, the model may prematurely signal completion (e.g., by emitting an end token).

- Budget forcing intercepts this signal and appends the word “Wait” to the output, effectively prompting the model to reconsider.

- This can be repeated multiple times, extending the reasoning trace within a predefined token budget.

- Often, this extra “thinking time” allows the model to catch and correct earlier errors—leading to substantially improved accuracy, especially on complex multi-step problems.

Importantly, budget forcing doesn’t require retraining. It’s applied purely during inference, making it a flexible, low-overhead enhancement that can be toggled on or off based on available compute or latency constraints.

Why S1 Stands Out

1. Exceptional Performance with Minimal Resources

S1-32B achieves 57% accuracy on AIME24—a notoriously difficult high school math competition—surpassing o1-preview. Even more impressively, this gain is unlocked not through larger models or more training data, but through smarter use of existing capacity at test time. When scaled with budget forcing, performance consistently improves beyond the baseline, demonstrating reliable extrapolation.

2. Full Openness and Reproducibility

Unlike closed models that hint at capabilities without sharing methods, S1 provides:

- The fine-tuned model (

simplescaling/s1-32Band improveds1.1-32B) - The s1K and s1K-1.1 datasets

- Training, inference, and evaluation scripts

- Detailed documentation for vLLM and Hugging Face Transformers

This transparency empowers researchers to validate claims, reproduce results, and build upon the work—accelerating collective progress in reasoning-focused LLM development.

3. Practical and Lightweight Integration

Integrating S1 into existing workflows is straightforward. Whether you’re using vLLM for high-throughput serving or Transformers for experimentation, the model loads like any standard LLM. Activating budget forcing requires only a few lines of code that manage token budgets and inject “Wait” tokens—no architectural changes needed.

Ideal Use Cases

S1 excels in scenarios demanding structured, multi-step reasoning, such as:

- Mathematical problem solving (e.g., competition math, symbolic reasoning)

- Algorithmic code generation requiring correctness guarantees

- Scientific question answering where intermediate justification matters

- Educational tools that benefit from verifiable reasoning traces

It’s particularly valuable for teams that:

- Operate under fixed model budgets but can afford modest inference-time overhead

- Need o1-like reasoning without access to proprietary systems

- Prioritize auditability and want to understand how answers are derived

Getting Started

To run S1 with Transformers:

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("simplescaling/s1.1-32B", device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("simplescaling/s1.1-32B")

# Use standard chat template + generate

For vLLM with budget forcing, you:

- Set a max token limit for the “thinking” phase (e.g., 32,000 tokens)

- Generate the initial reasoning trace

- Append “Wait” and regenerate if the model ends early

- Finalize the answer after the desired number of self-check iterations

The repository includes ready-to-run scripts for training, evaluation (via a modified lm-evaluation-harness), and data recreation—making experimentation accessible even to those new to test-time scaling.

Limitations and Considerations

While powerful, S1 has important constraints:

- It’s built specifically on Qwen2.5-32B-Instruct; the approach isn’t yet generalized to arbitrary base models.

- In high-temperature settings, vLLM may encounter out-of-vocabulary token errors due to extended generations—though a simple patch can mitigate this.

- Budget forcing requires careful token budget management to avoid hitting context limits or wasting compute.

- Performance gains are most pronounced on structured reasoning tasks; benefits on open-ended or creative tasks may be less consistent.

Summary

S1 proves that breakthrough reasoning performance doesn’t require secrecy or scale. By combining a tiny, high-signal dataset with intelligent test-time control, it delivers o1-preview-beating results in an open, reproducible, and easy-to-adopt package. For researchers, engineers, and product teams seeking reliable, verifiable, and high-performance reasoning—without the black box—S1 is a compelling and practical choice.