If you’re working on machine learning models that aim to emulate or accelerate physics-based simulations—whether in fluid dynamics, astrophysics, or biological systems—you’ve likely hit a major roadblock: the lack of large-scale, diverse, and standardized simulation datasets. Enter The Well, a groundbreaking open-source initiative that delivers exactly what the scientific ML community needs: 15 terabytes of high-quality numerical simulation data spanning 16 distinct physical domains, all wrapped in a clean, PyTorch-native interface.

Developed by Polymathic AI in collaboration with researchers from institutions like the Flatiron Institute, NYU, Princeton, and UC Berkeley, The Well isn’t just another dataset dump. It’s a curated benchmarking ecosystem designed to help researchers and engineers evaluate, compare, and improve surrogate models for complex spatiotemporal systems—without spending months generating their own simulation data.

Why Standard Physics Datasets Fall Short

Traditionally, ML research in scientific computing has relied on small, narrowly scoped datasets—often limited to one or two types of partial differential equations (PDEs), like the classic Navier-Stokes or heat equation. While useful for prototyping, these datasets don’t reflect the messy, varied realities of real-world physical systems.

This creates three critical problems:

- Limited generalizability: Models trained on a single simulation type rarely generalize to others.

- Unfair comparisons: Without a common benchmark, it’s hard to tell if a new model truly outperforms prior work or just overfits to a specific dataset.

- High entry barriers: Generating high-fidelity simulations requires domain expertise, HPC access, and significant computational time—resources many ML practitioners simply don’t have.

The Well directly addresses all three.

What Makes The Well Unique

1. Unprecedented Diversity in Physical Systems

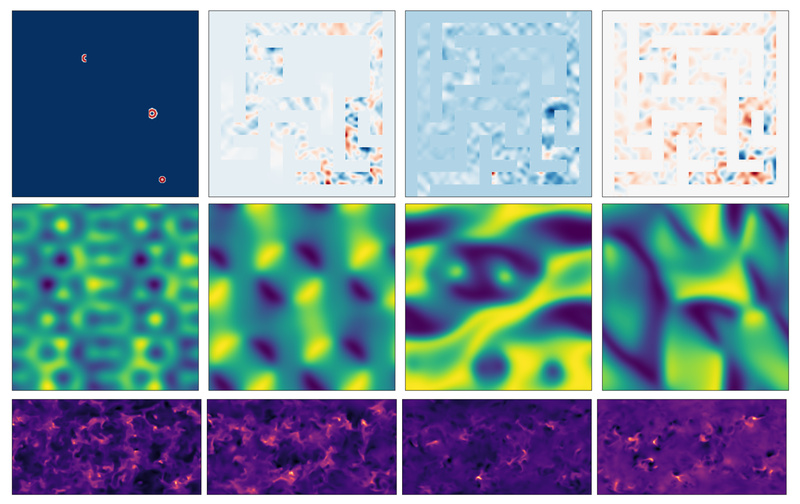

The Well brings together simulations from wildly different domains:

- Biological systems (e.g., active matter)

- Fluid dynamics (turbulent flows, multi-phase systems)

- Acoustic scattering

- Astrophysical phenomena (magneto-hydrodynamic simulations of supernova explosions and extra-galactic fluids)

This diversity ensures models are stress-tested on a wide range of temporal evolutions, spatial scales, and governing equations—not just variations of the same problem.

2. Plug-and-Play PyTorch Integration

You don’t need to write custom data loaders or parsers. The Well provides a unified WellDataset class that works seamlessly with torch.utils.data.DataLoader. Whether you’re downloading data locally or streaming from Hugging Face, the interface stays consistent:

from the_well.data import WellDataset from torch.utils.data import DataLoader trainset = WellDataset(well_base_path="path/to/data",well_dataset_name="active_matter",well_split_name="train" ) train_loader = DataLoader(trainset)

This reduces boilerplate and accelerates experimentation.

3. Built-in Benchmarking Infrastructure

The repository includes reference implementations of baseline models (e.g., Fourier Neural Operators) and a Hydra-powered training pipeline. You can launch a full benchmark run with a single command:

cd the_well/benchmark python train.py experiment=fno server=local data=active_matter

Pretrained checkpoints are even available on Hugging Face for quick evaluation—ideal for comparing your new architecture against established baselines.

4. Flexible Data Access

With datasets ranging from 6.9 GB to over 5 TB, The Well offers two access modes:

- Local download via the

the-well-downloadCLI for high-throughput training - Streaming from Hugging Face for quick prototyping without massive storage commitments

Who Should Use The Well?

The Well is ideal for:

- ML researchers developing next-generation surrogate models for PDEs

- Computational scientists looking to replace slow numerical solvers with fast neural approximators

- Engineers in climate modeling, aerospace, or biomedical simulation who need robust, data-driven emulators

- Educators and students teaching or learning scientific machine learning with real-world data

If your work involves learning the dynamics of physical systems over space and time, The Well provides the data foundation you’ve been missing.

How The Well Solves Practical Pain Points

Eliminates the “Simulation Bottleneck”

Instead of waiting weeks to generate training data, you can start training within hours of installation. The simulations were produced by domain experts using validated numerical solvers—so you inherit their rigor without the overhead.

Enables Fair, Cross-Domain Model Evaluation

By offering 16 standardized datasets, The Well lets you test whether your model truly understands physics—or just memorizes one problem type. This is critical for publishing credible, reproducible results.

Lowers the Floor for Scientific ML

You no longer need a PhD in computational fluid dynamics to work on fluid ML. The Well abstracts away domain-specific complexities while preserving physical fidelity.

Getting Started Is Straightforward

-

Install the package:

pip install the_well # or from source for development git clone https://github.com/PolymathicAI/the_well && pip install .

-

Download or stream data:

the-well-download --base-path ./data --dataset active_matter --split train

-

Plug into your training loop: Use the

WellDatasetclass as shown above.

For benchmarking, install extra dependencies:

pip install the_well[benchmark]

Important Limitations to Consider

- Storage demands: The full collection is 15 TB. Plan accordingly—most users will work with 1–2 datasets.

- Compute requirements: Training on high-resolution spatiotemporal data requires GPUs with ample memory.

- Baselines are not SOTA: The provided models are meant as starting points, not performance ceilings. The real value lies in pushing beyond them.

Should You Use The Well in Your Project?

Ask yourself:

- Are you modeling spatiotemporal physical systems?

- Do you need realistic, diverse simulation data—not synthetic toy problems?

- Are you tired of incomparable results across fragmented datasets?

- Do you want to accelerate scientific workflows with ML surrogates?

If you answered “yes” to most of these, The Well is likely a strong fit.

Summary

The Well fills a critical gap in scientific machine learning by providing a large-scale, diverse, and standardized collection of physics simulation datasets—complete with tooling for training, evaluation, and benchmarking. It lowers barriers to entry, promotes reproducibility, and challenges researchers to build models that generalize across physical domains. For anyone serious about surrogate modeling in computational science, The Well isn’t just useful—it’s essential.