In today’s AI landscape, developers and researchers often juggle separate models for vision, language, and video—each with its own architecture, training pipeline, and inference quirks. Enter Show-o, a breakthrough unified multimodal foundation that collapses this fragmentation into a single Transformer. Show-o doesn’t just understand images or generate text—it does both, along with creating and interpreting videos, all within one native architecture. Its successor, Show-o2, refines this vision with improved scalability, dual-path fusion, and native support for flow matching alongside autoregressive language modeling.

For practitioners tired of stitching together disjointed systems, Show-o offers a compelling alternative: one model, multiple modalities, zero compromises on core capabilities. Whether you’re building an AI assistant that answers questions about live video feeds or a creative tool that generates high-resolution images from text prompts, Show-o simplifies your stack without sacrificing performance.

Core Innovations That Unify Multimodal AI

Native Support for Text, Images, and Videos in One Architecture

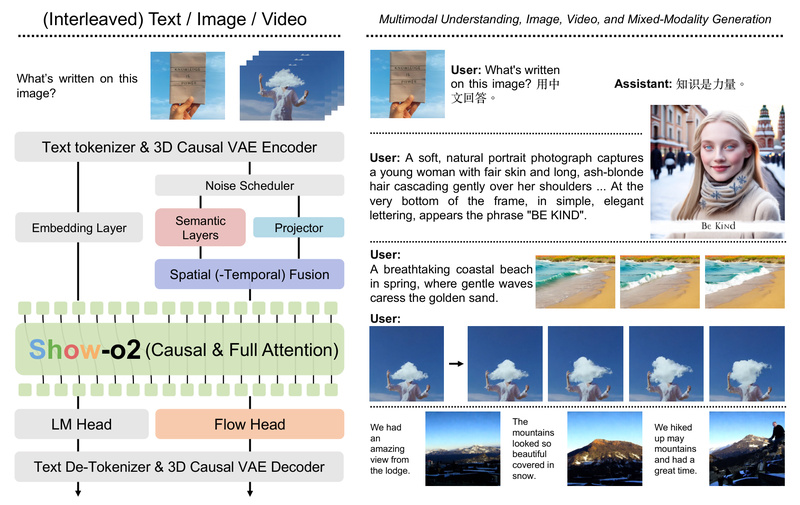

Unlike traditional pipelines that treat vision and language as separate domains, Show-o operates in a 3D causal variational autoencoder (VAE) space where all modalities—text tokens, image patches, and video frames—are represented uniformly. This design allows the same Transformer backbone to process and generate across modalities without task-specific heads or adapters.

Show-o2 extends this to video by introducing temporal awareness into the VAE space, enabling coherent video understanding and generation without retraining separate components.

Dual-Path Spatial-Temporal Fusion

A key technical insight in Show-o2 is the dual-path fusion mechanism. One path handles spatial dependencies critical for image understanding and generation, while the other incorporates temporal dynamics for video. This separation ensures that each modality’s unique structural priors are respected, yet both are trained jointly within the same model—enabling true scalability from static images to dynamic video sequences.

Autoregressive Language + Flow Matching for Vision

Show-o combines two generative paradigms natively:

- Autoregressive modeling for text: standard causal attention predicts the next token, ideal for language understanding and generation.

- Flow matching for images and videos: a continuous generative approach that offers high-fidelity, stable visual synthesis compared to discrete diffusion or GANs.

This hybrid strategy allows Show-o to excel at both discrete symbolic reasoning (e.g., VQA) and continuous perceptual generation (e.g., 512×512 image synthesis).

Ready-to-Use Models at Practical Scales

The team released both 1.5B and 7B parameter variants, with and without high-resolution (HQ) enhancements for better text rendering in generated images. These models are available on Hugging Face under showlab/show-o2-1.5B, showlab/show-o2-7B, and specialized versions fine-tuned for video understanding—making deployment feasible even for teams without massive compute budgets.

Real-World Use Cases Where Show-o Shines

All-in-One AI Assistants

Imagine an assistant that can:

- Answer “What’s happening in this video?”

- Generate a new scene based on a written description

- Modify part of an image (e.g., replace a car with a bicycle) using text-guided inpainting

Show-o supports all these tasks out of the box via a single inference engine—no switching between models or APIs.

Creative Content Generation

With scripts like inference_t2i.py, you can generate, inpaint, or extrapolate images from text. For example:

- Text-to-image: “A futuristic city at sunset with flying cars” → 512×512 image

- Inpainting: Replace masked regions in an image using natural language

- Extrapolation: Extend an image beyond its original boundaries (e.g., widen a landscape)

These capabilities are built natively, eliminating the need for external diffusion pipelines.

Unified Multimodal Research Platforms

For researchers, Show-o provides a clean testbed to study cross-modal alignment, reasoning, and generation under one framework. Its acceptance at NeurIPS 2025 and strong results on benchmarks like OneIG-Bench validate its scientific rigor.

Getting Started Is Straightforward

The project maintains full transparency with public code for both training and inference. To run your first demo:

- Install dependencies:

pip3 install -r requirements.txt

- Run multimodal understanding (e.g., image captioning):

python3 inference_mmu.py config=configs/showo_demo_512x512.yaml mmu_image_root=./mmu_validation question='Please describe this image in detail.'

- Generate an image from text:

python3 inference_t2i.py config=configs/showo_demo_512x512.yaml mode='t2i' validation_prompts_file=prompts.txt

Pre-trained checkpoints are available on Hugging Face, and the codebase includes configurations for both CLIP-ViT and discrete tokenizer variants.

Practical Considerations and Current Limits

While Show-o is powerful, it’s not without trade-offs:

- Training is complex: The two-stage (or three-stage) pipeline requires careful data path setup,

accelerateconfiguration, and checkpoint management. Stage 1 uses ImageNet, Stage 2 uses web-scale image-text data—manual adjustments are needed for custom datasets. - Video support is evolving: Though Show-o2 adds video understanding, generation capabilities for long-form video are still maturing.

- Mixed-modality generation (e.g., interleaved text-image outputs) is listed as a pending feature—not yet fully supported in the public release.

- Hardware demands: The 7B model requires substantial GPU memory; the 1.5B variant is more accessible for prototyping.

Nonetheless, the inference experience is polished, and the team actively maintains community channels (Discord, WeChat) for support.

Summary

Show-o redefines what’s possible with unified multimodal AI. By integrating understanding and generation across text, images, and videos into a single Transformer—backed by autoregressive language modeling and flow matching—it eliminates the engineering overhead of maintaining siloed models. With open-source code, pre-trained weights, and support for real-world tasks like VQA, inpainting, and high-res image synthesis, it’s a strategic choice for teams seeking a future-proof, all-in-one foundation. If your project touches multiple modalities, Show-o isn’t just convenient—it’s transformative.