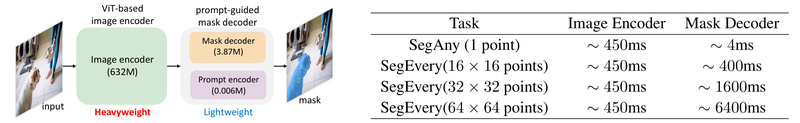

MobileSAM is a streamlined, high-performance variant of Meta’s groundbreaking Segment Anything Model (SAM), engineered to deliver the same powerful segmentation capabilities with dramatically reduced computational demands. Designed for both “segment anything” (SegAny)—mask prediction from a user-provided point or box—and “segment everything” (SegEvery)—automatic detection and masking of all objects in an image—MobileSAM enables real-time, on-device segmentation without sacrificing usability or visual fidelity.

Built on a distilled TinyViT image encoder (just 5M parameters vs. SAM’s 611M), MobileSAM maintains SAM’s original prompt-guided mask decoder, preserving full API compatibility. This means developers and researchers can swap in MobileSAM with virtually no code changes—making it a plug-and-play solution for resource-constrained environments like mobile apps, edge devices, and browser-based tools.

With the release of MobileSAMv2, the framework now accelerates SegEvery even further by replacing SAM’s brute-force grid-search prompting with object-aware prompt sampling, yielding 16× faster mask generation and 3.6% higher zero-shot object proposal performance on LVIS.

Whether you’re building annotation tools, AI-powered photo editors, 3D scene understanding systems, or real-time vision pipelines, MobileSAM delivers enterprise-grade segmentation at a fraction of the cost, size, and latency.

Why MobileSAM Outperforms Alternatives

Speed and Efficiency Without Compromise

MobileSAM achieves 12ms per image on a single GPU—38× faster than the original SAM (456ms)—thanks to its lightweight encoder. Crucially, the mask decoder remains unchanged, ensuring consistent behavior with SAM’s well-tested prompting logic.

| Component | Original SAM | MobileSAM |

|---|---|---|

| Image Encoder Parameters | 611M | 5M |

| Encoder Speed | 452ms | 8ms |

| Full Pipeline Speed | 456ms | 12ms |

| Total Parameters | 615M | 9.66M |

Compared to FastSAM, another lightweight alternative, MobileSAM is 5× faster (12ms vs. 64ms) and 7× smaller (9.66M vs. 68M parameters). More importantly, it aligns far more closely with SAM’s outputs: under two-point prompts, MobileSAM achieves mIoU scores above 0.71, while FastSAM hovers around 0.3–0.4, indicating significantly better mask accuracy and consistency.

Seamless Integration and Developer Experience

Because MobileSAM retains SAM’s exact preprocessing, postprocessing, and API structure, adopting it requires near-zero refactoring. Existing SAM-based projects—from Stable Diffusion WebUI plugins to 3D segmentation tools like SegmentAnythingin3D—can switch to MobileSAM by simply loading a different checkpoint.

This compatibility has fueled rapid community adoption, with integrations already live in:

- AnyLabeling for auto-labeling

- Inpaint-Anything and joliGEN for efficient mask-guided inpainting

- Personalize-SAM for one-shot customization

- Browser-based demos running directly on phones or laptops

Ideal Use Cases

MobileSAM excels wherever speed, low memory footprint, or on-device deployment matter:

- Mobile and Edge Applications: Run segmentation directly on smartphones or embedded systems without cloud dependency.

- Real-Time Annotation Tools: Accelerate data labeling workflows in computer vision pipelines.

- Creative AI Tools: Enable instant object masking in photo editors, video apps, or generative AI interfaces (e.g., Stable Diffusion).

- 3D Vision Systems: Extend 2D segmentation to point clouds or NeRFs with minimal latency overhead.

- Educational and Prototyping Projects: Experiment with state-of-the-art segmentation on consumer-grade hardware.

With ONNX export support, MobileSAM models can be deployed across diverse inference engines (e.g., ONNX Runtime, TensorRT), further broadening hardware compatibility.

Getting Started in Minutes

Installing and using MobileSAM is straightforward:

pip install git+https://github.com/ChaoningZhang/MobileSAM.git

Then, load the model just like SAM:

from mobile_sam import sam_model_registry, SamPredictor mobile_sam = sam_model_registry["vit_t"](checkpoint="mobile_sam.pt") mobile_sam.to(device="cuda") predictor = SamPredictor(mobile_sam) predictor.set_image(your_image) masks, _, _ = predictor.predict(point_coords=coords, point_labels=labels)

For full-image SegEvery:

from mobile_sam import SamAutomaticMaskGenerator mask_generator = SamAutomaticMaskGenerator(mobile_sam) masks = mask_generator.generate(your_image)

MobileSAMv2 enhances SegEvery further—simply download the updated weights and run the provided script to leverage object-aware prompting for faster, higher-quality everything segmentation.

Limitations and Practical Considerations

While MobileSAM matches SAM visually in most scenarios, it’s important to note:

- It was trained on only 100K images (1% of SA-1B) via knowledge distillation, which may affect robustness in rare or highly complex scenes.

- The full training code is not yet public, limiting fine-tuning flexibility for advanced users.

- For ultra-high-precision medical or industrial applications, the original SAM may still be preferable—though often at prohibitive computational cost.

That said, for the vast majority of real-world applications—especially those prioritizing speed, accessibility, and deployability—MobileSAM delivers an optimal balance of performance and practicality.

Summary

MobileSAM redefines what’s possible with on-device image segmentation. By distilling SAM’s power into a 9.66M-parameter model that runs in 12ms, it removes the biggest barriers to deploying interactive, promptable segmentation: cost, latency, and hardware requirements. With full SAM compatibility, ONNX support, and a growing ecosystem of integrations, MobileSAM is the smart choice for practitioners who need fast, lightweight, and reliable segmentation—today.