Parsing complex documents—especially those containing tables, mathematical formulas, mixed layouts, or multilingual content—remains a persistent challenge in real-world AI applications. Traditional optical character recognition (OCR) pipelines often require stitching together multiple specialized models (e.g., separate layout detectors, text recognizers, and table parsers), leading to brittle, slow, and hard-to-maintain systems. On the other hand, giant vision-language models (VLMs) like Qwen2.5-VL or Gemini 2.5 Pro can handle end-to-end parsing but suffer from high computational costs, slow inference speeds, and impractical deployment requirements—even for single-page PDFs.

Enter MonkeyOCR, a purpose-built vision-language model that strikes an exceptional balance between accuracy, speed, and deployability by introducing a novel Structure-Recognition-Relation (SRR) triplet paradigm. Instead of relying on fragmented toolchains or bloated multimodal architectures, MonkeyOCR reframes document parsing as three intuitive, human-aligned questions:

- “Where is it?” → Structure detection (layout analysis)

- “What is it?” → Content recognition (text, formulas, tables)

- “How is it organized?” → Logical relation modeling (reading order, hierarchy)

This decomposition enables precise, scalable, and efficient parsing—without sacrificing performance on the most challenging document elements. Trained on MonkeyDoc, the largest publicly available document parsing dataset to date (3.9 million instances across 10+ document types in English and Chinese), MonkeyOCR consistently outperforms both modular OCR systems and much larger VLMs, while running smoothly on a single consumer-grade GPU like the NVIDIA RTX 3090.

Why Traditional Approaches Fall Short

Many engineering teams turn to modular OCR frameworks such as MinerU, which combine distinct models for layout detection, OCR, and table/formula parsing. While flexible, these pipelines accumulate errors across stages, struggle with cross-element alignment (e.g., linking a table caption to its body), and require extensive tuning. Their average performance on complex content like formulas or tables often lags significantly—sometimes by more than 15% compared to end-to-end methods.

Conversely, deploying massive VLMs (e.g., 72B-parameter Qwen2.5-VL) for document parsing seems appealing but introduces new pain points:

- Inference speeds as low as 0.12 pages/second

- Need for high-end multi-GPU setups

- High latency and operational overhead

- Diminishing returns on accuracy relative to computational cost

MonkeyOCR directly addresses these trade-offs by offering a leaner, task-specialized architecture that retains the coherence of end-to-end modeling while avoiding unnecessary bloat.

Core Capabilities That Solve Real Engineering Problems

State-of-the-Art Accuracy on Challenging Content

MonkeyOCR delivers +15.0% improvement in formula parsing and +8.6% in table understanding over MinerU on benchmark datasets. It also achieves top results on OmniDocBench and olmOCR-Bench, outperforming closed-source giants like Gemini 2.5 Pro, GPT-4o, and InternVL3-78B—despite having only 1.2B or 3B parameters.

Fast, Predictable Inference Speeds

Depending on the model variant and hardware, MonkeyOCR processes:

- 0.68–1.43 pages/second for MonkeyOCR-pro-1.2B on an RTX 3090

- Up to 2.41 pages/second on an RTX 4090

This is 3–7× faster than Qwen2.5-VL and ~30% faster than MinerU, enabling real-time or near-real-time document processing in production environments.

Lightweight Deployment

Both the 3B and 1.2B variants run efficiently on a single GPU, including consumer cards like the RTX 3090 or even the 8GB RTX 4060 (with quantization). This makes MonkeyOCR ideal for edge deployments, on-premise enterprise systems, or cloud microservices with strict resource budgets.

Multilingual Support for Real-World Documents

MonkeyOCR natively handles both English and Simplified Chinese across diverse document genres: academic papers, financial reports, textbooks, newspapers, slides, and exam sheets. Its performance remains robust even on dense, multi-column layouts or documents with embedded equations.

Practical Use Cases

MonkeyOCR shines in scenarios where structure, fidelity, and speed matter:

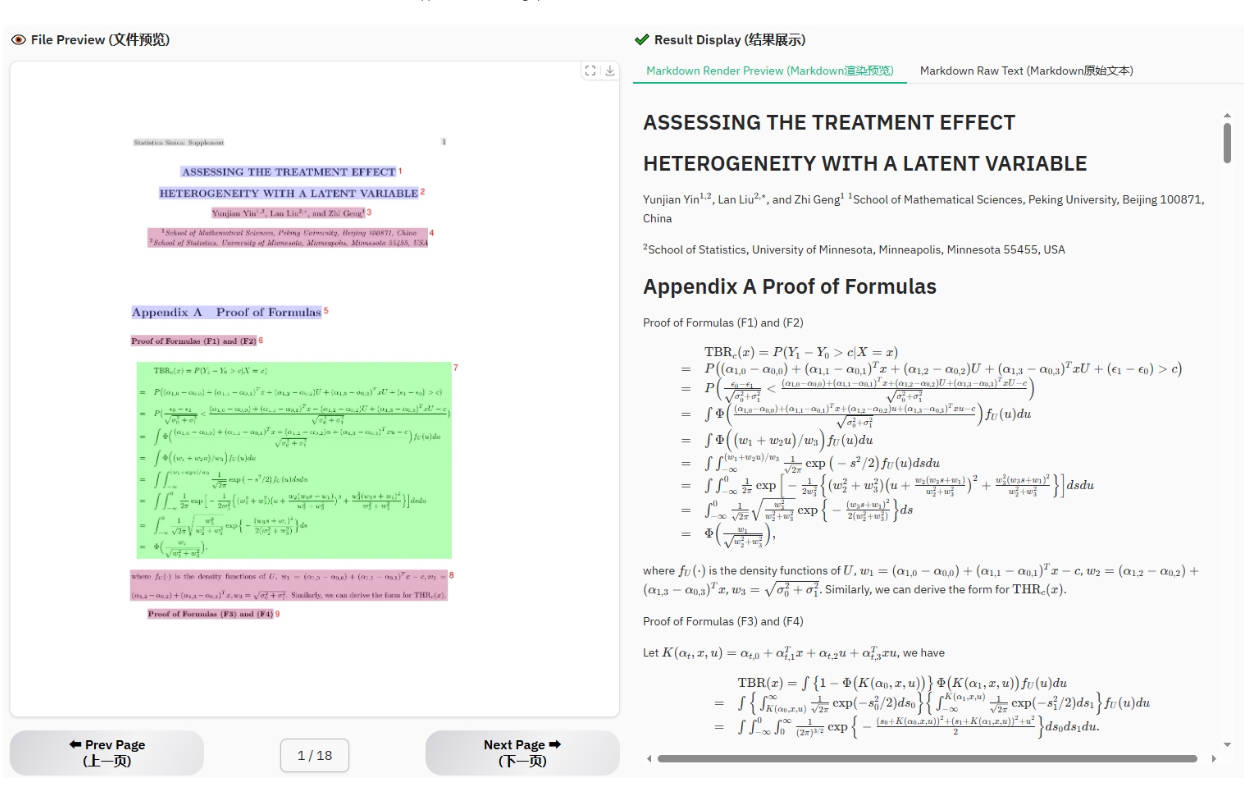

- Academic digitization: Convert research PDFs into structured Markdown with preserved equations and reference order.

- Financial data extraction: Parse quarterly reports to extract tables and key metrics in machine-readable format.

- Educational content processing: Transform scanned textbooks into editable, logically ordered documents.

- SaaS document intelligence: Power “smart upload” features in legal, insurance, or compliance platforms that need accurate layout-aware parsing.

It is particularly well-suited for born-digital PDFs and high-quality scans of printed text, where layout integrity is preserved.

Getting Started Is Straightforward

MonkeyOCR offers a developer-friendly experience with multiple deployment options:

Command-Line Interface (CLI)

Install via pip, download weights, and run inference in minutes:

python parse.py document.pdf python parse.py image.jpg -t formula # formula-only mode python parse.py /folder -g 10 -s # batch process with page splitting

Output Flexibility

Each run generates:

- A clean Markdown file with text, tables (in Markdown grid format), and LaTeX formulas

- A layout visualization PDF overlaying detected blocks

- A structured JSON file with coordinates, content types, and relational metadata

Integration Options

- Gradio demo for rapid prototyping (

python demo/demo_gradio.py) - FastAPI server for RESTful integration (

uvicorn api.main:app) - Docker images for containerized deployment (with GPU support)

Quantized versions (via AWQ) further reduce VRAM usage, enabling use on modest hardware.

Current Limitations

While powerful, MonkeyOCR has known boundaries:

- Not optimized for handwritten text or smartphone-captured photos (low-quality, distorted inputs)

- Does not support Traditional Chinese, or languages beyond English/Simplified Chinese

- Best performance on clean, digital documents—not noisy historical scans

These constraints are clearly documented, and the team plans to expand coverage in future releases.

Summary

For engineers and product teams building document understanding systems, MonkeyOCR delivers a rare combination: near-perfect accuracy on complex layouts, sub-second per-page latency, and single-GPU deployability. By replacing brittle OCR pipelines and over-engineered VLMs with its focused SRR paradigm, it removes critical bottlenecks in real-world workflows. If your project involves parsing scientific papers, financial statements, textbooks, or any structured document with tables or formulas, MonkeyOCR is an exceptionally strong candidate—proven to outperform far larger models while remaining practical, fast, and open.