Deploying large AI models in production often involves a fragmented toolchain: one set of libraries for training, another for quantization, and yet another for serving. TorchAO changes this by offering a PyTorch-native, end-to-end model optimization framework that unifies quantization, sparsity, and efficient training—all within the familiar PyTorch ecosystem. Whether you’re pre-training a 70B-parameter language model or deploying a quantized 1B model to a mobile device, TorchAO streamlines the entire workflow without forcing you to jump between incompatible tools.

Built for real-world performance, TorchAO supports FP8 quantized training, quantization-aware training (QAT), post-training quantization (PTQ), and structured sparsity techniques like 2:4 sparsity. It leverages PyTorch’s tensor subclass system to represent low-precision data types—including INT4, INT8, FP8, MXFP4, MXFP6, and MXFP8—in a hardware-agnostic way, ensuring portability across server, edge, and future architectures.

Why TorchAO Solves Critical Pain Points

Eliminates Tool Fragmentation

Historically, optimizing a model for inference required exporting it from a training framework, applying external quantization scripts, and integrating with a separate serving engine. TorchAO collapses this pipeline:

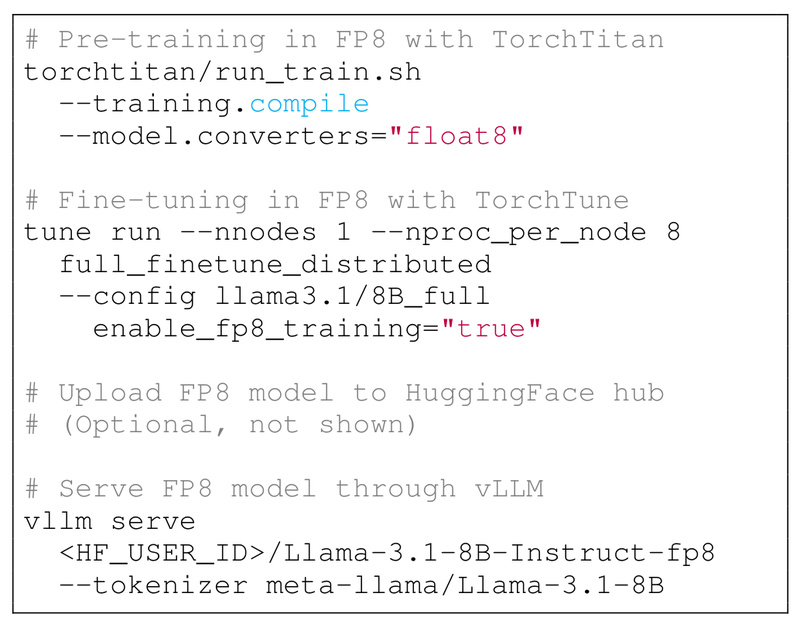

- Pre-train with TorchTitan using FP8.

- Fine-tune with QAT via TorchTune, Axolotl, or Unsloth.

- Serve directly through Hugging Face Transformers, vLLM, SGLang, or ExecuTorch—with quantization applied in-place.

This eliminates format conversions, compatibility bugs, and accuracy drift between stages.

Delivers Measurable Speed and Memory Gains

TorchAO isn’t theoretical—it ships models that are faster and leaner:

- 1.5× faster pre-training for Llama-3.1-70B using FP8 on H100 clusters.

- 1.89× faster inference and 58% less memory for Llama-3-8B quantized to INT4.

- 67% recovery of accuracy loss on Gemma3-4B via QAT compared to naive PTQ.

- 2.37× throughput on Llama-3-8B using INT4 + 2:4 sparsity.

These aren’t lab curiosities—they power production models like Llama 3.2 1B/3B and LlamaGuard3-8B.

Enables Low-Bit Quantization Without Catastrophic Accuracy Drop

Post-training quantization to INT4 often degrades model quality. TorchAO’s QAT workflow recovers up to 96% of lost accuracy on benchmarks like HellaSwag. By simulating quantization effects during fine-tuning, QAT ensures the final quantized model behaves nearly identically to its full-precision counterpart—making aggressive compression viable even for sensitive applications.

Best Use Cases: When TorchAO Shines

Large-Scale Training on H100/B200 Clusters

If you’re pre-training or fine-tuning billion-parameter models on modern NVIDIA GPUs, FP8 training with TorchAO can accelerate throughput by up to 1.5× while maintaining convergence. Integrated with FSDP2 and torch.compile(), it scales efficiently to thousands of GPUs.

Production LLM Serving with Minimal Overhead

For teams deploying LLMs on A100 or H100 servers, INT4 weight-only quantization via TorchAO reduces memory pressure and boosts token generation speed by nearly 2×—ideal for cost-sensitive, high-throughput inference.

Edge Deployment via ExecuTorch

TorchAO supports INT1–INT7 quantization for ARM CPUs. Combined with ExecuTorch, you can run quantized models like Qwen3-4B on an iPhone 15 Pro with under 3.4 GB of memory—enabling on-device AI without cloud dependency.

Fine-Tuning Quantized Models Efficiently

Rather than quantizing after fine-tuning (and risking quality loss), use TorchAO’s QAT with LoRA or full-parameter tuning. Integrations with Unsloth and Axolotl let you fine-tune quantized models 1.89× faster than standard QAT, with preserved accuracy.

Getting Started with Minimal Code

TorchAO requires only a few lines to integrate into existing PyTorch workflows.

Option 1: Direct Quantization (for custom models)

from torchao.quantization import Int4WeightOnlyConfig, quantize_ quantize_(model,Int4WeightOnlyConfig(group_size=32,int4_packing_format="tile_packed_to_4d",int4_choose_qparams_algorithm="hqq") )

Option 2: Hugging Face Integration (for Transformers models)

from transformers import AutoModelForCausalLM, TorchAoConfig

from torchao.quantization import PerRow

quantization_config = TorchAoConfig(quant_type=PerRow())

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen3-32B",device_map="auto",quantization_config=quantization_config

)

Once quantized, the model works out-of-the-box with torch.compile(), FSDP2, and popular serving backends like vLLM—no refactoring needed.

Hardware and Maturity Considerations

TorchAO’s capabilities vary by hardware:

- H100/B200: Best for FP8 training/inference and MXFP* formats.

- A100: Ideal for INT4/INT8 quantization with BFloat16 activations.

- ARM CPUs: Supports INT1–INT7 for edge deployment.

While core workflows (FP8 training, INT4 PTQ, QAT) are stable, newer features like MXFP4 training or blockwise FP8 remain prototypes. Always consult the compatibility table and documentation before adopting experimental modes in production.

Ecosystem Integrations Reduce Adoption Risk

TorchAO isn’t a standalone tool—it’s a connective layer in the modern AI stack:

- Training: TorchTitan (pre-training), TorchTune, Axolotl, Unsloth (fine-tuning).

- Model Hubs: Pre-quantized models on Hugging Face.

- Serving: vLLM, SGLang, ExecuTorch.

- Libraries: PEFT (for LoRA), FBGEMM (optimized kernels), Diffusers.

This deep integration means you can adopt TorchAO incrementally—without overhauling your pipeline.

Summary

TorchAO solves a critical bottleneck in the AI lifecycle: the disconnect between training efficiency and inference optimization. By providing a unified, PyTorch-native framework for quantization, sparsity, and low-precision training, it enables teams to ship faster, leaner, and more accurate models—from data centers to smartphones. With proven speedups, strong ecosystem support, and minimal code changes required, TorchAO is a pragmatic choice for anyone serious about deploying optimized models at scale.