Robotic manipulation—especially with two arms working in coordination—is essential for complex real-world tasks like assembling electronics, handling kitchenware, or performing logistics operations. However, developing reliable bimanual policies remains a major challenge due to the scarcity of real-world demonstration data, the high cost of physical trials, and poor generalization from simulation to reality.

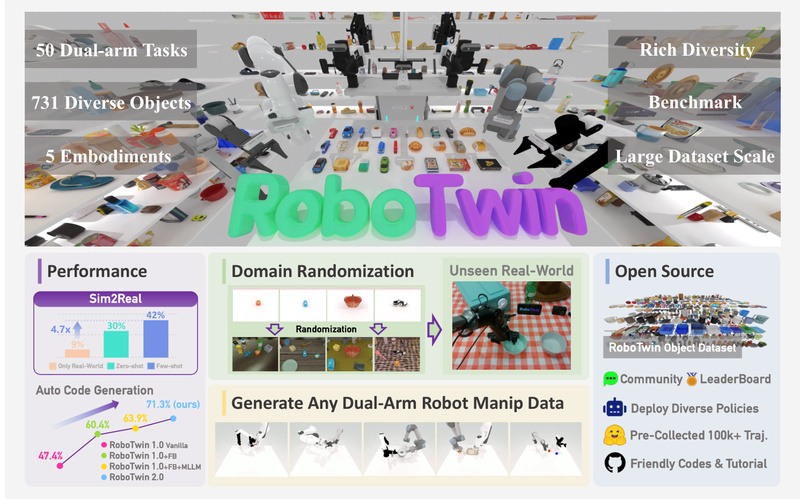

Enter RoboTwin 2.0: a next-generation, open-source simulation platform designed specifically to address these bottlenecks. Built on scalable data generation, strong domain randomization, and automated task synthesis, RoboTwin 2.0 enables researchers and engineers to train and evaluate robust dual-arm manipulation policies—even with minimal real-world data.

What sets RoboTwin apart is its end-to-end framework: from a rich object library and multimodal task generation to evaluation protocols that test visual, linguistic, and embodiment robustness. Whether you’re fine-tuning a vision-language-action (VLA) model or benchmarking cross-robot generalization, RoboTwin provides the tools, data, and infrastructure to accelerate progress in bimanual robotics.

Core Innovations That Drive Sim-to-Real Success

A Large, Annotated Object Library: RoboTwin-OD

At the heart of RoboTwin 2.0 lies RoboTwin-OD, a meticulously curated library of 731 real-world object instances spanning 147 categories. Each object comes with semantic labels and manipulation-relevant annotations (e.g., grasp points, symmetry, material properties), ensuring that simulated interactions remain physically plausible and task-relevant. This depth of asset realism is rare in existing robotic datasets and directly contributes to more transferable policies.

Automated Task Generation via Multimodal Language Models

Manually scripting hundreds of bimanual tasks is time-consuming and limits scalability. RoboTwin 2.0 introduces an expert data synthesis pipeline that leverages multimodal language models (MLLMs) to automatically generate executable task code from natural language instructions. This code is then refined through simulation-in-the-loop feedback, iteratively correcting errors until successful task execution is achieved. The result? A 10.9% improvement in code generation success rate compared to prior methods—enabling rapid expansion to 50 diverse dual-arm tasks.

Strong Domain Randomization Across Five Key Axes

To bridge the sim-to-real gap, RoboTwin 2.0 applies structured domain randomization along five critical dimensions:

- Clutter density – Varying object arrangements on the tabletop

- Lighting conditions – Simulating different intensities, angles, and shadows

- Background scenes – Changing visual context to prevent overfitting

- Tabletop height – Adjusting workspace geometry

- Language phrasing – Diversifying task instructions to improve linguistic robustness

This multi-axis randomization forces policies to learn invariant features rather than exploit simulation-specific artifacts, dramatically improving real-world performance.

Multi-Embodiment Support for Generalization Testing

RoboTwin 2.0 is instantiated across five distinct dual-arm robot embodiments, allowing researchers to test how well policies transfer across different kinematic structures, gripper types, and actuation capabilities. This is crucial for developing truly generalizable robotic systems that aren’t tied to a single hardware platform.

Solving Real-World Pain Points for Robotics Teams

RoboTwin 2.0 directly addresses four persistent challenges in robotic manipulation development:

- Data Scarcity: With over 100,000 pre-collected trajectories publicly available—and tools to generate millions more—teams no longer need large fleets of physical robots to gather training data.

- Cost Reduction: Simulated training slashes the need for expensive, time-consuming real-world trials, especially during early policy development.

- Robustness Gaps: Policies trained on RoboTwin’s randomized data show 367% relative improvement over a 10-real-demo baseline when fine-tuned, and even zero-shot models trained purely on synthetic data achieve a 228% gain—proving that high-quality simulation can meaningfully substitute real data.

- Evaluation Fragmentation: The platform provides unified benchmark protocols, ensuring fair, reproducible comparisons across algorithms and embodiments.

Who Should Use RoboTwin—and How

RoboTwin 2.0 is ideal for:

- Academic researchers studying bimanual coordination, sim-to-real transfer, or VLA model training

- Startup robotics engineers building manipulation stacks with limited real-world data

- Industrial R&D teams prototyping dual-arm workflows in logistics, manufacturing, or service domains

Practical Use Cases

- Training VLA Models: Use synthetic trajectories to pre-train models like OpenVLA, TinyVLA, or ACT, then fine-tune with minimal real demos.

- Testing Visual Robustness: Evaluate how policies perform under varying lighting, backgrounds, and occlusions.

- Benchmarking Language Generalization: Assess whether models understand diverse phrasings of the same task.

- Cross-Embodiment Transfer: Train on one robot type and test on another to measure architectural independence.

- Participating in Challenges: Join the RoboTwin Dual-Arm Collaboration Challenge @ CVPR 2025 MEIS Workshop to compete on standardized tasks.

Getting Started: A Simple Workflow

RoboTwin 2.0 is designed for ease of adoption:

-

Install the platform following the official documentation (takes ~20 minutes).

-

Collect data using a single command:

bash collect_data.sh beat_block_hammer demo_randomized 0

This automatically finds a valid random seed and replays it to generate trajectories.

-

Customize tasks by modifying configuration files to suit your research needs.

-

Integrate your policy—RoboTwin supports a growing list of baselines including ACT, DP3, RDT, PI0, OpenVLA-oft, and community contributions like LLaVA-VLA and DexVLA.

-

Evaluate and submit results to the public leaderboard to compare against state-of-the-art methods.

Current Limitations and Practical Considerations

While powerful, RoboTwin 2.0 has a few constraints users should consider:

- Simulation Dependency: Despite strong domain randomization, extremely complex real-world dynamics (e.g., fluid interactions, fine-grained deformations) may still require real-world fine-tuning.

- Hardware Scope: Only five robot embodiments are officially supported; integrating new hardware requires additional effort.

- Compute Requirements: Large-scale data generation and policy training demand GPU resources, though pre-collected datasets reduce this barrier.

These limitations are common in simulation-based robotics platforms, but RoboTwin’s design minimizes their impact through realism, diversity, and modularity.

Open Resources and Community Engagement

RoboTwin 2.0 is fully open-source under the MIT license and includes:

- Complete source code on GitHub

- 100,000+ pre-collected trajectories on Hugging Face

- Detailed documentation covering installation, task design, and policy deployment

- A public leaderboard for standardized benchmarking

- Active community challenges, including the CVPR 2025 RoboTwin Dual-Arm Collaboration Challenge

By lowering barriers to entry and promoting reproducibility, RoboTwin aims to become a foundational platform for scalable, robust bimanual manipulation research.

Summary

RoboTwin 2.0 represents a significant leap forward in simulation-based robotic manipulation. By combining a rich object library, automated multimodal task generation, strong five-axis domain randomization, and multi-embodiment support, it directly tackles the core challenges of data scarcity, poor sim-to-real transfer, and fragmented evaluation. Empirical results confirm its effectiveness: policies trained primarily on synthetic RoboTwin data achieve dramatic performance gains with minimal real-world adaptation. For anyone working on dual-arm robotics—from academia to industry—RoboTwin 2.0 offers a powerful, accessible, and community-supported foundation to accelerate innovation.